Apps & Software

Latest about Apps & Software

-

-

Safeguards

SafeguardsGoogle and Amazon unite to tackle cloud outages with innovative networking solution

By Nickolas Diaz Published

-

Revamp

RevampGemini's web redesign introduces 'My Stuff' folder for a streamlined user experience

By Nandika Ravi Published

-

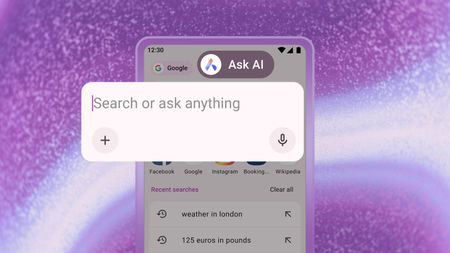

No more chaos

No more chaosOpera wants to save you from the chaos of switching between AI apps

By Sanuj Bhatia Published

-

A little QoL

A little QoLGoogle Messages leak suggests a world with better SIM switching for RCS is coming

By Nickolas Diaz Published

-

Year in Search 2025

Year in Search 2025Google's 2025 search data reveals a world gripped by Gemini and chaos

By Jay Bonggolto Published

-

The safety paradox

The safety paradoxYouTube cut off: Australian teens are losing logins under new age law

By Jay Bonggolto Published

-

gone for good

gone for goodGoogle just killed a key Home app feature, and parents are going to miss it

By Jay Bonggolto Published

-

Explore Apps & Software

AI

-

-

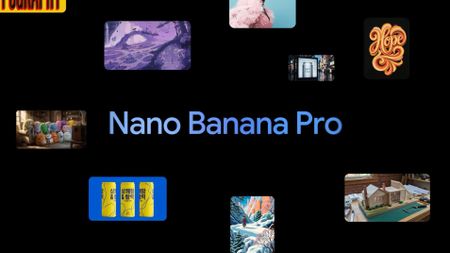

Tech Talk

Tech TalkWhat is Nano Banana? Here's a breakdown of the Gemini image editor and how to use it

By Jerry Hildenbrand Published

-

Google AI

Google AIGoogle Gemini AI: Gemini 3, Nano Banana, Live, best features, linked apps, and more

By Michael L Hicks Last updated

-

AI Mode upgrades

AI Mode upgradesGoogle expands Gemini 3 and Nano Banana Pro availability for AI Mode in Search globally

By Brady Snyder Published

-

Shifting tides

Shifting tidesChatGPT for Android is about to be low-key flooded with ads

By Nickolas Diaz Published

-

Early preview

Early previewThis Gemini for Home hack lets you hear the new assistant early, with a catch

By Brady Snyder Published

-

First Draft

First DraftGoogle tipped to bring an annotation feature to Gemini's images

By Nickolas Diaz Published

-

Group project?

Group project?Google could be secretly training Gemini to focus on user 'Projects'

By Nickolas Diaz Published

-

Tech Talk

Tech TalkHere's why you shouldn’t trust AI when doing your Black Friday shopping

By Jerry Hildenbrand Published

-

Gemini for Home

Gemini for HomeThis Google Home update improves Gemini's voice assistant and simplifies AI descriptions

By Brady Snyder Published

-

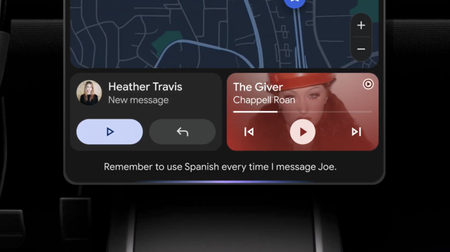

Android Auto

-

-

Gemini-fied

Gemini-fiedGemini transforms Android Auto with new AI features for a smarter drive

By Nandika Ravi Published

-

Bye, Assistant!

Bye, Assistant!Gemini for Android Auto is starting to replace Google Assistant

By Brady Snyder Published

-

Eyes on the Road

Eyes on the RoadGoogle starts quietly rolling out an essential button on Android Auto

By Nickolas Diaz Published

-

game over

game overGoogle may be pulling the plug on Android Auto’s in-car mini-games

By Jay Bonggolto Published

-

Alternative?

Alternative?Samsung's alleged 'Auto DeX' leak is an Android Auto variant you might see

By Nickolas Diaz Published

-

Share songs n ride

Share songs n rideAndroid users can now "Jam" together with this new Spotify feature

By Vishnu Sarangapurkar Published

-

Coming soon

Coming soonI tried Android Auto with Gemini at Google I/O, here's how it went

By Brady Snyder Last updated

-

It's getting hot

It's getting hotAndroid Auto's UI for climate control support might look like this

By Nickolas Diaz Published

-

Might not happen

Might not happenLatest Android Auto v14.2 seemingly dashes hopes of smart glasses navigation

By Nickolas Diaz Published

-

Android OS

-

-

Revamp

RevampGemini's web redesign introduces 'My Stuff' folder for a streamlined user experience

By Nandika Ravi Published

-

In-call safety arrives

In-call safety arrivesAndroid now warns before you open banking apps during risky calls

By Sanuj Bhatia Published

-

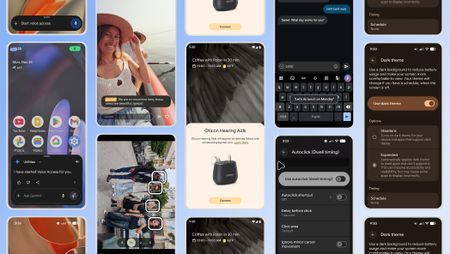

More inviting

More invitingFrom Expressive to Accessible, Google's winter Android update makes the OS easy for all

By Nickolas Diaz Published

-

The big shift

The big shiftAndroid 16 finally fights notification overload and lets you kill ugly icons

By Jay Bonggolto Published

-

Unwrap the December drop: Google brings fresh updates for Android users

By Nandika Ravi Published

-

Perfect personalization

Perfect personalizationOne UI 8.5 set to enhance Spotify and YouTube recommendations in Now Brief

By Brady Snyder Published

-

Bugg-y

Bugg-yQuick Share integration disrupts Pixel 10 Wi-Fi: Users seek temporary solutions

By Nandika Ravi Published

-

Bugg-y

Bugg-yPixel 10's Quick Share update creates Wi-Fi connection nightmare

By Nandika Ravi Published

-

Search away!

Search away!Google's testing the bottom search bar in Circle to Search for effortless queries

By Nandika Ravi Published

-

Gmail

-

-

Get a preview

Get a previewGmail gives Android users a window into email attachments with this update

By Nickolas Diaz Published

-

Sleigh Bells ring...

Sleigh Bells ring...Google brings a unified 'Purchases' tab to Gmail ahead of the holiday rush

By Nickolas Diaz Published

-

new UX style

new UX styleGmail's new Material 3 Expressive design is secretly hitting some inboxes

By Jay Bonggolto Published

-

Quick reply

Quick replyGmail will now let you react to emails with emojis

By Brady Snyder Published

-

Mail upgrades

Mail upgradesGmail's new search results prioritize relevant emails over recent ones

By Brady Snyder Published

-

Gone phishing

Gone phishingGmail could start rejecting suspicious emails even before they reach your inbox

By Steven Shaw Published

-

How to recover lost Google contacts for Android

By Mick Symons Last updated

-

7 best Gmail for Android tips and tricks

By Harish Jonnalagadda Published

-

How to set up out of office replies in Gmail

By Jeramy Johnson Published

-

Google Assistant

-

-

Bye, Assistant

Bye, AssistantGoogle Assistant could shut down for Android Auto in March 2026

By Brady Snyder Published

-

New look!

New look!Google's song search evolves with a modern Gemini-inspired UI on Android

By Nandika Ravi Published

-

New look!

New look!Google's voice and song search gets a major overhaul on Android after years

By Nandika Ravi Published

-

Stay in the know

Stay in the knowGoogle introduces new tools to help users fight against evolving phishing scams effectively

By Nandika Ravi Published

-

Google Outage

Google OutageGoogle, Gmail, and Meet hit by widespread outage, causing login issues

By Nandika Ravi Published

-

New voices

New voicesGoogle is spicing up its voice list on Search, according to a new leak

By Nandika Ravi Published

-

New Google AI plans

New Google AI plansNew Google AI Pro and $249/month Ultra subscription announced at I/O

By Vishnu Sarangapurkar Published

-

Easy-peesy

Easy-peesyGoogle app on iOS gets a new feature that will 'Simplify' text online

By Nandika Ravi Published

-

Bye

ByeGoogle officially killed Driving Mode after stripping most of its features in 2024

By Brady Snyder Published

-

Google Maps

-

-

Let's go there

Let's go thereGoogle Maps gets a major upgrade with Gemini for smooth navigation on Android and iOS

By Nickolas Diaz Published

-

Let's go there

Let's go thereGoogle Maps gets a Gemini boost to help you navigate the roads like a pro

By Nickolas Diaz Published

-

Double Rainbow

Double RainbowHere's what the redesigned Google Photos and Maps icons look like

By Nickolas Diaz Published

-

Real-time lane intelligence

Real-time lane intelligenceGoogle Maps and Polestar fix the worst part of highway driving

By Jay Bonggolto Published

-

ETA at a glance

ETA at a glanceGoogle Maps is adding a nifty chip to show how long it'll take you to get home

By Sanuj Bhatia Published

-

Assistant is out

Assistant is outYour next Google Maps navigation could be planned by Gemini

By Jay Bonggolto Published

-

Smoother EV driving

Smoother EV drivingRivian partners with Google Maps for enhanced EV navigation experience

By Vishnu Sarangapurkar Published

-

Save from Screenshots

Save from ScreenshotsGoogle Maps for iOS simplifies saving locations from screenshots

By Vishnu Sarangapurkar Published

-

Fake reviews

Fake reviewsGoogle Maps doubles down on preventing fake reviews

By Nandika Ravi Published

-

Google Pay

-

-

No more drain

No more drainAndroid’s next update is finally addressing your phone’s biggest battery hogs

By Jay Bonggolto Published

-

On Time

On TimeGoogle Wallet is helping Android users effortlessly catch their plane or train

By Nickolas Diaz Published

-

Quick Taps

Quick TapsGoogle Pay's fresh updates will unlock better shopping rewards for Chrome users

By Nickolas Diaz Published

-

Pay Your Way

Pay Your WayAndroid users get another option to pay later with Klarna on Google Pay

By Nickolas Diaz Published

-

Easier access

Easier accessGoogle Wallet brings digital ID support to UK, more US states

By Nandika Ravi Published

-

Now arriving at...

Now arriving at...Google Wallet brings real-time train status alerts to Android, and teases I/O 2025

By Nickolas Diaz Published

-

Next stop is...

Next stop is...Londoners can join the Google Pay 'Tube Challenge' for badges and city lore

By Nickolas Diaz Published

-

How to add vaccine cards and medical info to Google Wallet

By Michael L Hicks Published

-

How to send and request money using GPay

By Jerry Hildenbrand Published

-

Google Play Store

-

-

You win!

You win!Focus Friend and Pokémon TCG Pocket shine in Google Play's Best of 2025 awards

By Nickolas Diaz Published

-

Find it faster

Find it fasterGoogle Play enhances search with new 'Where to watch' streaming feature

By Sanuj Bhatia Published

-

No more sifting

No more siftingGoogle's upcoming review search feature might soon help you save time on the Play Store

By Jay Bonggolto Published

-

Gift cards go green

Gift cards go greenYou can now send Starbucks and Disney gift cards straight from Google Play

By Jay Bonggolto Published

-

Epic v. Google

Epic v. GoogleGoogle and Epic's settlement proposal could finally end the multi-year Play Store dispute

By Brady Snyder Published

-

Ditch the scroll

Ditch the scrollPlay Store’s new AI summaries could help you spot the best apps faster

By Jay Bonggolto Published

-

Age checks go live

Age checks go liveGoogle Play users must now verify their age to keep downloading certain apps

By Sanuj Bhatia Published

-

The Order Stands

The Order StandsUS Supreme Court upholds Google Play Store changes amid ongoing Epic Games lawsuit

By Nickolas Diaz Published

-

More games, more prizes

More games, more prizesAndroid users can unlock new rewards with Google's revamped Play Points

By Nickolas Diaz Published

-

Meta

-

-

Early deals

Early dealsMeta reveals its Black Friday offers early with Ray-Ban Meta and Meta Quest 3S deals

By Brady Snyder Published

-

Trade-in deals

Trade-in dealsMeta is piloting a trade-in program for Ray-Ban and Oakley smart glasses — here's how it works

By Brady Snyder Published

-

Shakeup

ShakeupMeta's chief AI scientist is leaving the company after 12 years to create a startup

By Brady Snyder Published

-

Ruled in favor

Ruled in favorMeta cleared of monopoly claims as judge highlights competition with TikTok

By Nickolas Diaz Published

-

Not so fast

Not so fastGot a $100 off code from Meta that didn't work? You're not alone — here's what happened

By Brady Snyder Published

-

One inbox for all

One inbox for allYou might soon message non-WhatsApp users — here’s how Meta plans to pull it off

By Jay Bonggolto Published

-

Finally!

Finally!Meta boosts older smart glasses with new Garmin and Strava features for fitness enthusiasts

By Brady Snyder Published

-

Trolls-away

Trolls-awayMeta's Threads ups its game with new controls to keep trolls at bay

By Nandika Ravi Published

-

AI splurging

AI splurgingZuckerberg explained when AI glasses will become 'profitable' during earnings call

By Michael L Hicks Published

-

Spotify

-

-

Wrap it up!

Wrap it up!Explore Spotify Wrapped 2025: A year in music, top albums, and your unique listening age

By Nandika Ravi Published

-

Pricey!

Pricey!Spotify might raise prices again for US users in early 2026

By Nandika Ravi Published

-

Easy switching

Easy switchingSpotify is making it easier to switch with in-app TuneMyMusic playlist transfers

By Brady Snyder Published

-

Pay up

Pay upSpotify lossless lands in India, and there's the inevitable price hike: it's 40% costlier than Apple Music, and I'm now paying 3x as much as my previous plan

By Harish Jonnalagadda Published

-

Spotify sharing

Spotify sharingAndroid users can now share their favorite Spotify songs through WhatsApp Status

By Brady Snyder Published

-

Put you on repeat...

Put you on repeat...Spotify can now tell which songs Android users are addicted to

By Nickolas Diaz Published

-

Rocky Music

Rocky MusicSpotify's having major issues on Samsung and Google phones—this is why

By Nickolas Diaz Published

-

Got a request?

Got a request?Spotify's AI DJ takes requests in a new way on Android with prompts to get you started

By Nickolas Diaz Published

-

Step into lossless

Step into losslessHow to enable Spotify Lossless audio on your smartphone

By Tshaka Armstrong Published

-

-

-

Partial outage

Partial outageFacing trouble logging into X? You're not alone — here’s the scoop!

By Nandika Ravi Published

-

Twitter is down

Twitter is downIt wasn't just you — X (Twitter) resolved a major outage today

By Brady Snyder Last updated

-

Whistleblower calls out Twitter for spambots and mishandling user data

By Derrek Lee Published

-

What is free speech?

By Jerry Hildenbrand Published

-

Twitter makes it easier to search for Communities on the web

By Derrek Lee Published

-

Massive Twitter outage ends after about 90 minutes

By Michael L Hicks Published

-

House committee summons Meta, Alphabet, Twitter and Reddit over Capitol riot

By Jay Bonggolto Published

-

Twitter wants to turn the Explore page into yet another TikTok clone

By Michael L Hicks Published

-

How to remove a follower on Twitter without blocking them

By Keegan Prosser Published

-

Wear OS

-

-

Watch 5 + Watch 5 Pro

Watch 5 + Watch 5 ProOne UI 8 Watch update expected soon for Galaxy Watch 5 and 5 Pro users

By Brady Snyder Published

-

News Weekly

News WeeklyNews Weekly: Early look at the OnePlus 15R, Wear OS 6 lands on older Galaxy watches, Android Auto gets Gemini's tricks, and more

By Nandika Ravi Published

-

Wear this

Wear thisBest Wear OS watch 2025

By Michael L Hicks Last updated

-

Wear OS Weekly

Wear OS WeeklyWear OS 6 is one of the best parts of the Pixel Watch 4

By Michael L Hicks Published

-

Pixel Weather takes over

Pixel Weather takes overGoogle is phasing out the Wear OS Weather app, but for something better

By Sanuj Bhatia Published

-

Future of Wear OS

Future of Wear OSWear OS 6: One UI 8 Watch, Material 3 Expressive, Gemini, & more

By Michael L Hicks Last updated

-

Wear OS Weekly

Wear OS WeeklyCustom watch faces for the Galaxy Watch 8 and Pixel Watch 4 are fantastic — Here's where to find them

By Michael L Hicks Published

-

Fun faces

Fun facesFacer officially returns to the Samsung Galaxy Watch 8 in massive update

By Michael L Hicks Published

-

Gemini on your Watch

Gemini on your WatchGoogle brings the might of Gemini AI to your Pixel Watch

By Vishnu Sarangapurkar Published

-

Youtube

-

-

The safety paradox

The safety paradoxYouTube cut off: Australian teens are losing logins under new age law

By Jay Bonggolto Published

-

AI everywhere

AI everywhereYouTube might soon let you tweak your suggested content with AI prompts

By Sanuj Bhatia Published

-

DM feature is back

DM feature is backYouTube revives in-app direct messaging in surprise test

By Jay Bonggolto Published

-

Targeted tracks

Targeted tracksYouTube Music might finally fix its biggest playlist headache for a long time

By Jay Bonggolto Published

-

Piling on

Piling onWhy YouTube is not working for users of Opera GX with Ad blockers

By Nickolas Diaz Published

-

Piling on

Piling onYouTube's not working for users with ad blockers, at least for this one browser

By Nickolas Diaz Published

-

AI gets it wrong

AI gets it wrongYouTube's age verification system is back to calling adults kids

By Sanuj Bhatia Published

-

Old videos, new life

Old videos, new lifeYouTube will soon use AI to make low-resolution videos look sharper

By Sanuj Bhatia Published

-

A Pop of Fun

A Pop of FunYouTube's new 'Like' button animations bring a playful twist to your viewing experience

By Nickolas Diaz Published

-

More about Apps & Software

-

-

gone for good

gone for goodGoogle just killed a key Home app feature, and parents are going to miss it

By Jay Bonggolto Published

-

Tech Talk

Tech TalkWhat is Nano Banana? Here's a breakdown of the Gemini image editor and how to use it

By Jerry Hildenbrand Published

-

In-call safety arrives

In-call safety arrivesAndroid now warns before you open banking apps during risky calls

By Sanuj Bhatia Published

-