GPT-5 vs. Gemini 2.5 Pro: OpenAI's bold move in the growing AI landscape

ChatGPT's real-time router is a game-changer for user experience.

AI Byte is a weekly column covering all things artificial intelligence, including AI models, apps, features, and how they all impact your favorite devices.

OpenAI has the clear brand advantage in the AI race, and that's something that may prove impossible for competitors to overcome. ChatGPT has more weekly users than Meta or Google has on a monthly basis. Being first comes with benefits, which OpenAI is certainly reaping.

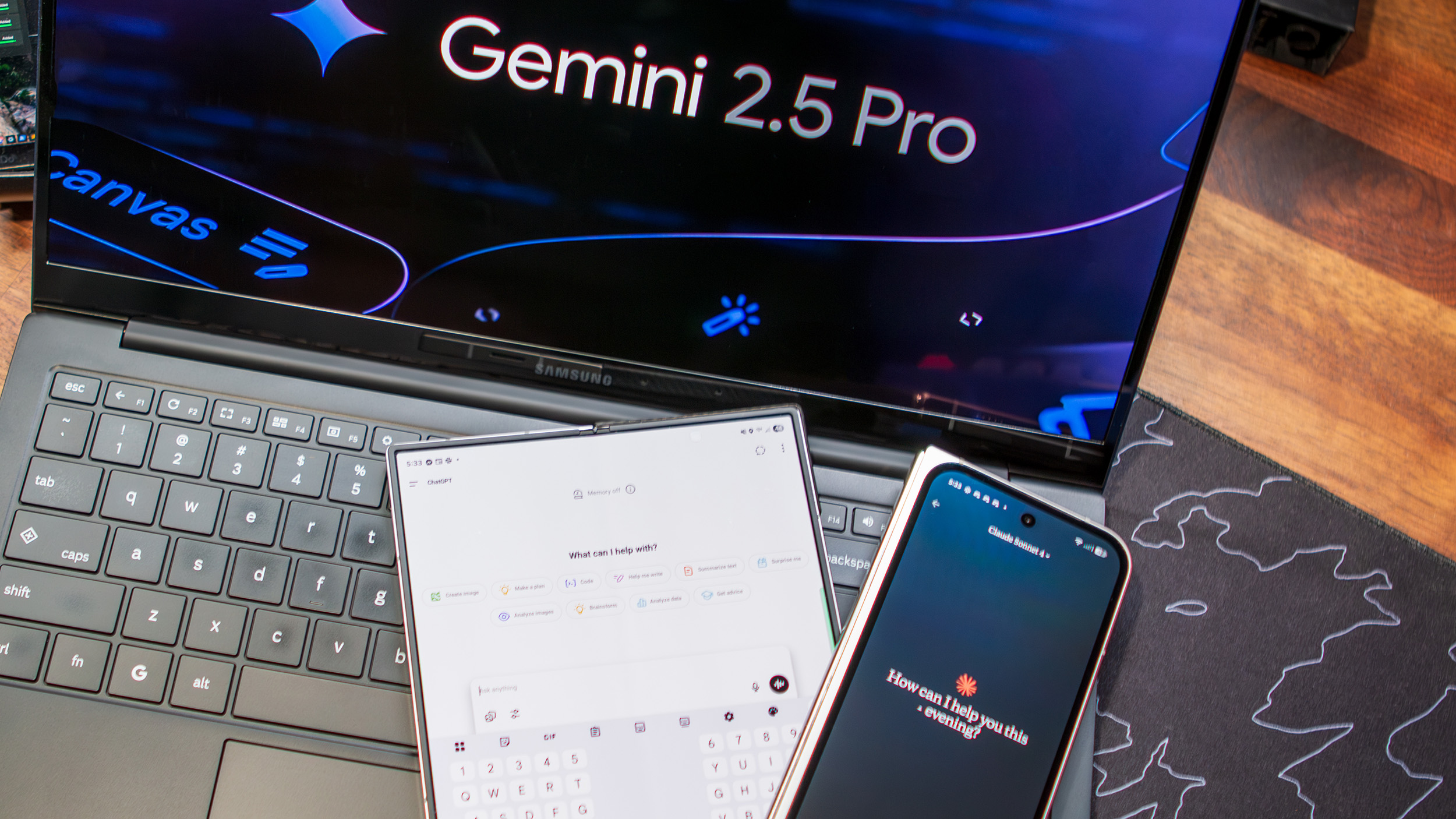

In the time since, though, the competition caught up — at least on a functional level. AI models from Google, DeepSeek, Claude, and xAI have all held top-five spots on LMArena's leaderboard, signaling that the race from basic large language models (LLMs) to artificial general intelligence (AGI) is far from decided. For months, I've been certain that Gemini 2.5 Pro is a better and more versatile thinking model than anything coming out of OpenAI.

Well, OpenAI is hoping to change that narrative with the release of GPT-5, which launched for everyone last week (Aug. 7). It's actually a collection of models that can intelligently pick which one is best for a given prompt on the fly. And at least for now, it's at the top of both the LMArena and WebDev Arena leaderboards — two crucial LLM benchmarks.

GPT-5 doesn't solve all of ChatGPT's hallucination problems, and it's definitely not AGI. There are some areas where Google models still outperform comparable ones from OpenAI. Regardless, GPT-5 looks impressive and free, and that might be all OpenAI needs to maintain its hefty lead over the competition.

Where OpenAI's GPT-5 beats Google's Gemini 2.5 Pro

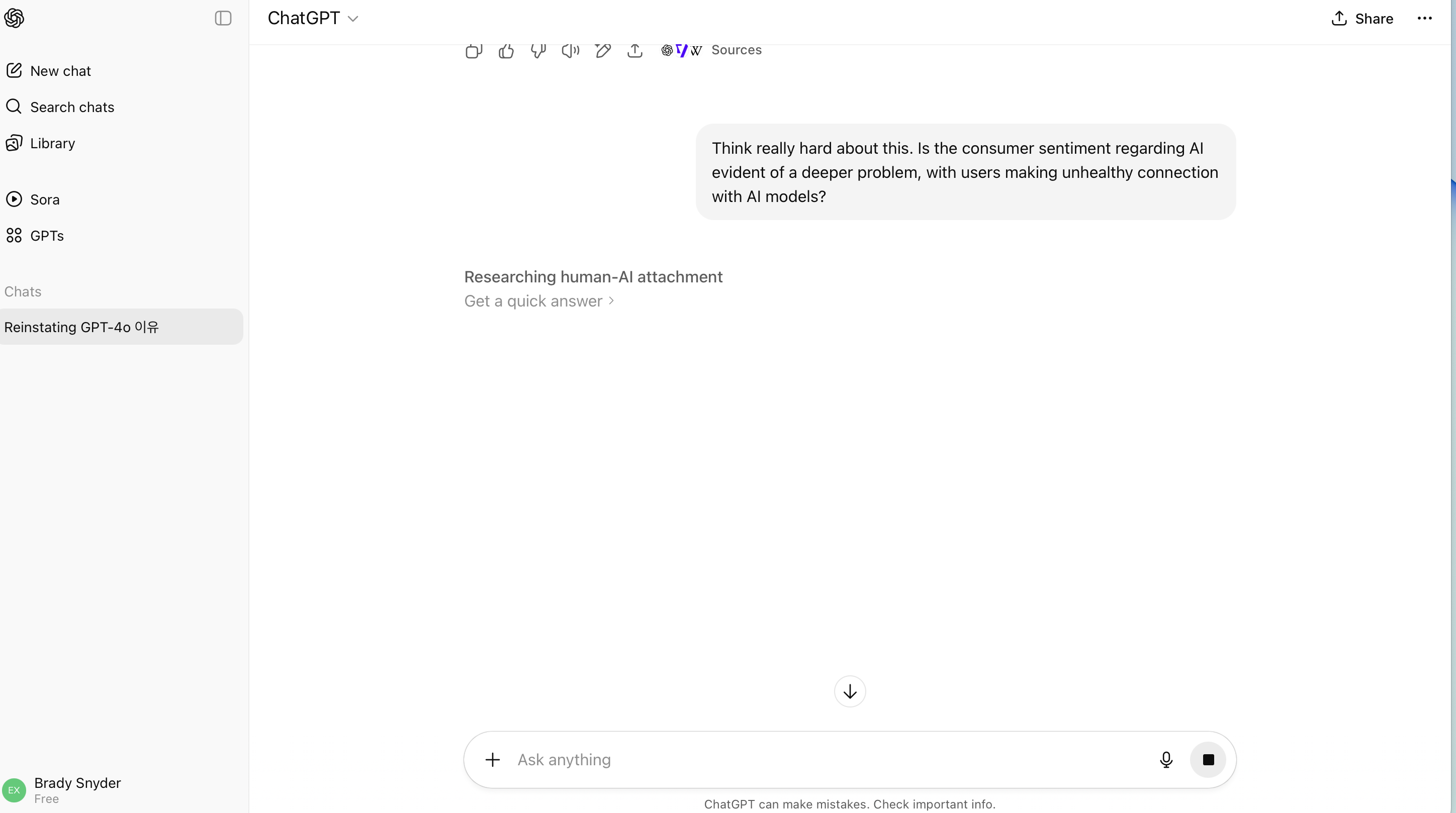

GPT-5’s smartest feature has nothing to do with compute or its knowledge base. It’s called real-time routing, and it helps pick the right model for your task without any additional user input. Currently, AI chatbots like ChatGPT and Gemini are littered with a variety of new, old, and experimental models best suited for a specific prompt. That’s great, but the onus was on the user to decide which one is best. With names like GPT-o3 and GPT-4o, or Gemini Flash Thinking Experimental, making that decision wasn’t always easy.

Instead, GPT-5 is a simple name for a collection of OpenAI models. It includes a lightweight model for quick, easy prompts and a more thoughtful model called GPT-5 Thinking for complex queries. OpenAI’s real-time router means that all of these models function as one, at least to the user. After inputting a prompt to ChatGPT, the GPT-5 router will decide which one to use, streamlining the UX.

There are still ways to manually control which GPT-5 model responds to your prompt. You can use phrases like “think really hard about this” in your prompt to trigger GPT-5 Thinking. If ChatGPT mistakenly opts for the thinking model, users can tap Get a quick answer to pivot to the lightweight model instead. Both models appear to be very reliable in early testing, clearly citing sources to avoid hallucinations.

Get the latest news from Android Central, your trusted companion in the world of Android

These online sources, which include sites like Android Central, are a key reason why hallucinations are down with GPT-5. OpenAI says that with web search enabled on GPT-5 prompts, the model is about 45% less likely to contain a factual error than GPT-4o. Hallucinations are by no means extinct, but they are less prevalent when using GPT-5 in ChatGPT.

Independent tests and benchmarks seem to align with OpenAI’s claims that GPT-5 is better at writing, coding, and health-based tasks. It finally usurps Gemini 2.5 Pro on both the LMArena and WebDev Arena leaderboards, claiming the top spot overall. Specifically, GPT-5 seems to have the edge in text-based and coding prompts. I used a sample prompt from OpenAI to test GPT-5’s coding capabilities in ChatGPT, and came away seriously impressed.

Where Gemini still beats ChatGPT

GPT-5 isn’t better than Gemini 2.5 Pro in every area, and OpenAI still doesn’t seem to be able to match Google’s image and video generation. That’s reflected in LMArena’s text-to-image, text-to-video, and image-to-video benchmarks — Google’s Imagen 4 and Veo 3 tools sweep the graphical suite of generative AI tests.

I wanted to test that outcome in the real world, so I fed ChatGPT and Gemini the same prompt: "Generate an image of Johnny Thunderbird holding up a Big East Tournament trophy at Madison Square Garden." Here’s how the images turned out:

Anecdotally, the generated images align with the benchmark results — Google’s video- and image-generation chops are much stronger than OpenAI’s. For starters, Google gave me a generated image in about 10 seconds, and I had to wait nearly two minutes for an image to come back from ChatGPT.

From an accuracy standpoint, Gemini won handedly. It knew I was talking about my alma mater’s basketball program from the mascot reference, and correctly recreated Madison Square Garden with a ton of detail and flair. Meanwhile, ChatGPT produced an image with the wrong team and I’m not convinced the generic background is really MSG; it looks like it could be any basketball court.

So, if you’re the kind of person who likes to switch between LLMs based on your task, perhaps choose GPT-5 for writing or coding and Gemini for image or video generation.

GPT-5 is great, but GPT-4o just won't go away

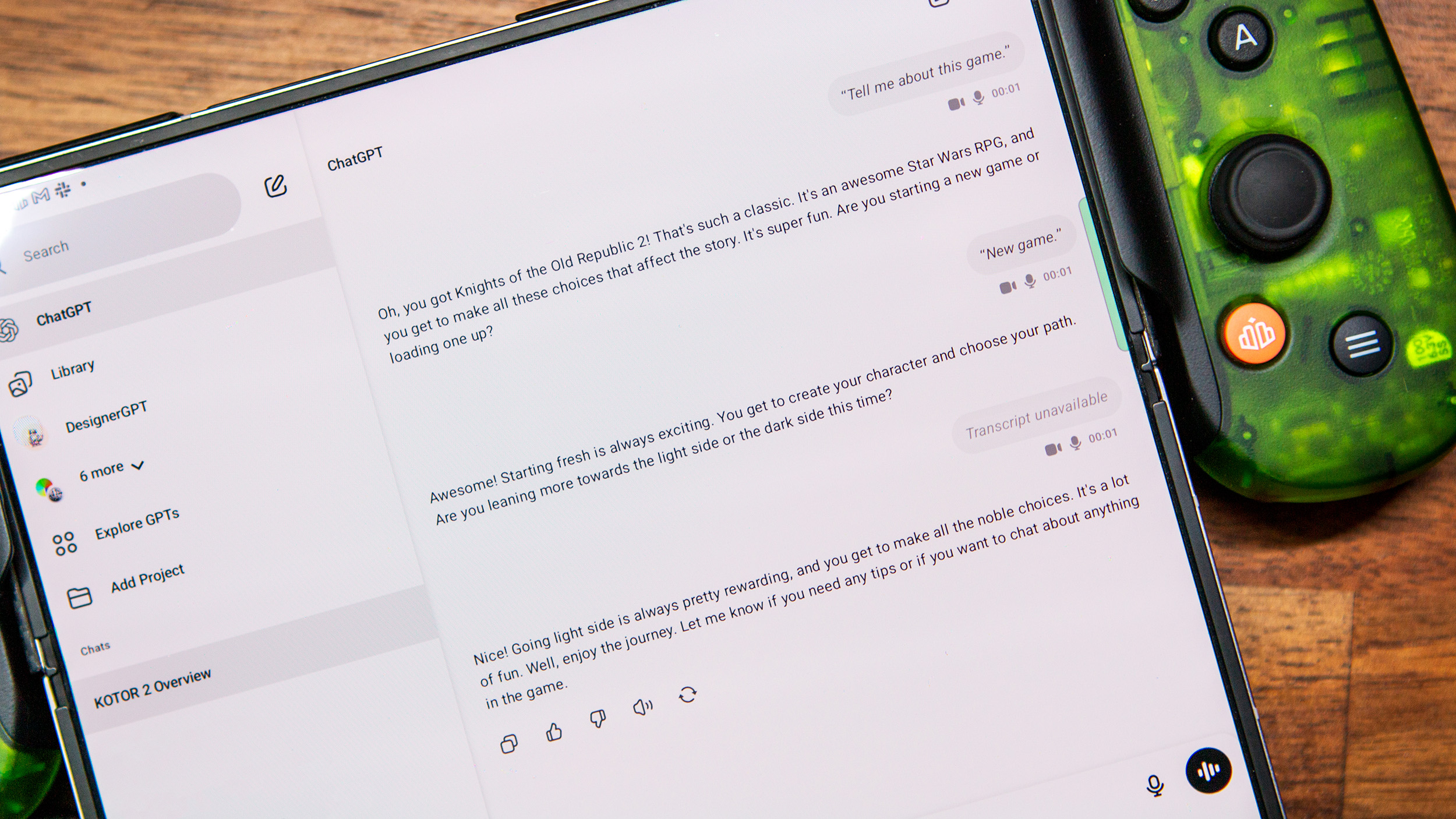

A wrinkle in OpenAI’s GPT-5 rollout is user attachment to older models. GPT-5 was more than two years in the making, as OpenAI reportedly struggled to make an AI model worthy of the title. As it turns out, users didn’t want one. OpenAI is bringing back GPT-4o — a model that was supposed to be sunset in favor of GPT-5 — because of user backlash (via Tom’s Guide).

It might seem silly for those of us who touch grass, but ChatGPT users have seemingly created parasocial relationships with OpenAI models. They’d rather use the AI model they “know” over GPT-5, even if the newer one is better in every way.

I think you can extrapolate this premise to the broader AI race. In some ways, it really doesn’t matter whether Google, OpenAI, Claude, or DeepSeek has the best-performing model. People are going to stick to the models they like and are familiar with, and if that’s the case, OpenAI’s lead in this space may be insurmountable for the competition.

Brady is a tech journalist for Android Central, with a focus on news, phones, tablets, audio, wearables, and software. He has spent the last three years reporting and commenting on all things related to consumer technology for various publications. Brady graduated from St. John's University with a bachelor's degree in journalism. His work has been published in XDA, Android Police, Tech Advisor, iMore, Screen Rant, and Android Headlines. When he isn't experimenting with the latest tech, you can find Brady running or watching Big East basketball.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.