The Google-Samsung AI glasses and Meta Ray-Bans sound remarkably similar, but Android XR could have three key advantages that will make it worth waiting for

Meta AI glasses have a huge lead for hardware and consumer awareness, but Gemini, photography, and wearables could give Google and Samsung an edge.

Last week, I tested Google's Android XR prototypes, the Gemini-powered foundation for Samsung's 2026 AI glasses. And while Google director Juston Payne said these AI glasses will "help bring this category into existence," Meta brought smart glasses and XR into the mainstream first. So it's fair to speculate how Google and Samsung will crack Meta's AI glasses stranglehold.

We don't know how Warby Parker and Gentle Monster will design these glasses, nor do we have camera or battery specs yet. But the Android XR UI and apps felt polished and consumer-ready in my hour-long demo. I already know what it'll be like to use these glasses in daily life.

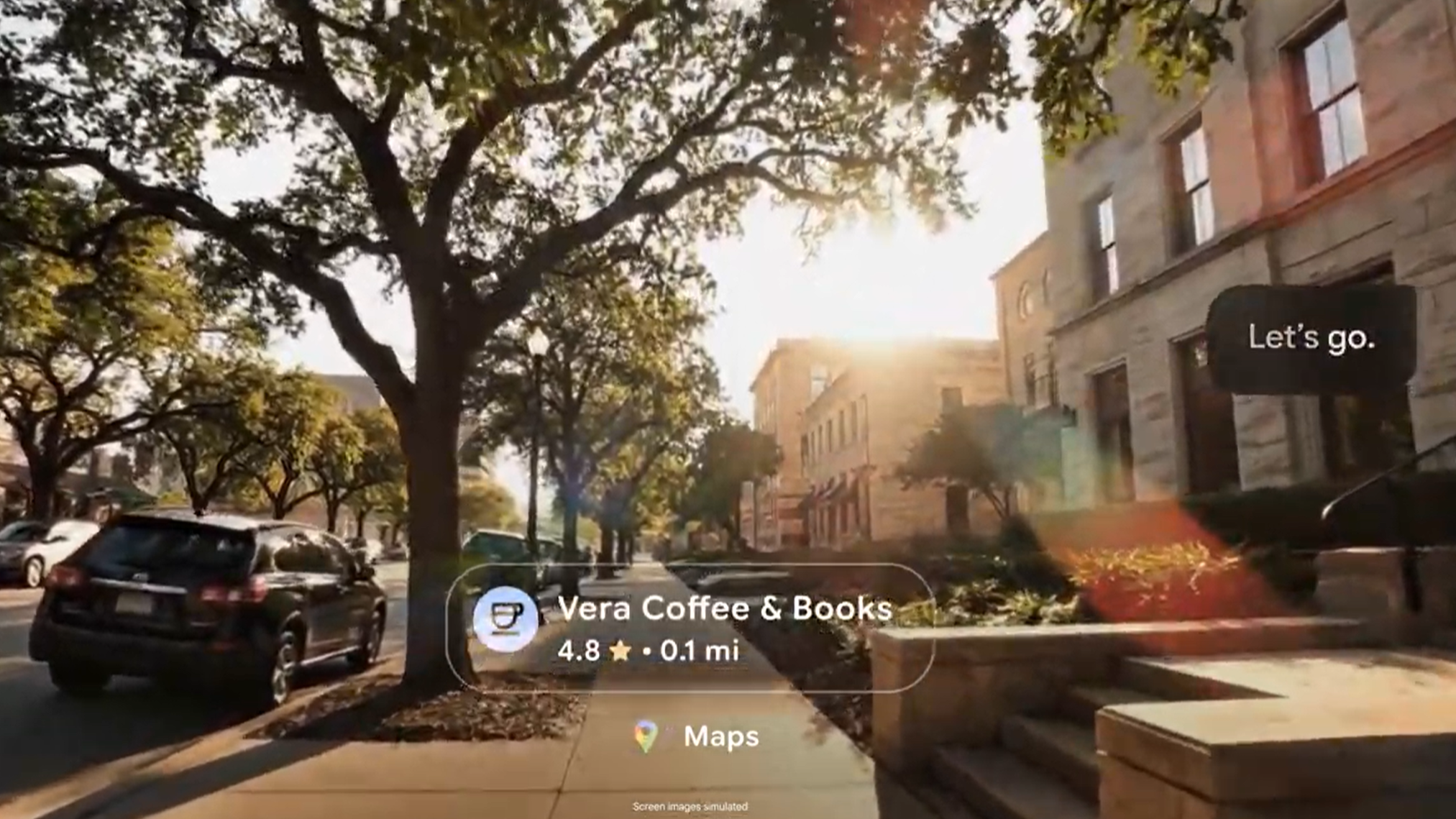

As a result, I expect the display-free Samsung glasses and Ray-Ban Meta glasses to have remarkably similar software, including "live" AI advice and translation. The mono-HUD glasses will, likewise, resemble the Meta Ray-Ban Display glasses, with their petite app widgets, turn-by-turn navigation, and other shared apps.

They're similar enough that people may end up choosing their smart glasses based on Warby Parker, Gentle Monster, or Ray-Ban/Oakley styles, not the AI behind it.

That said, I've already noticed three key differences that could help the Android XR smart glasses stand out and offer key advantages over what Meta offers. This doesn't mean these glasses will be better, as Meta has three generations of hardware experience that's paid off so far. But it proves that long-time Meta glasses wearers should be paying close attention.

Gemini has one key advantage over Meta AI: Apps

I'm not going to get into the Gemini vs. Llama debate. One, we're comparing conversational recognition and multimodal accuracy, which is harder to quantify than judging how well they code or generate images from prompts. Two, both frequently launch new AI models, and either could have improved significantly by 2026.

We've used multimodal Gemini Live on phones to keep plants alive and give home repair advice, so I expect that to carry over well to Samsung's glasses with an always-ready Gemini — though Meta has its own Live AI and handles image recognition well.

Get the latest news from Android Central, your trusted companion in the world of Android

What I've specifically noticed about Gemini, while testing it on Wear OS, is how Google has integrated commands into its app library, connecting them so you can pull data from one app and seamlessly apply it to another. For example, pulling info from Messages or Maps and then creating a Keep note from the result.

This interconnectivity with other apps will let the HUD display a preview of your Nest Doorbell feed, Google TV controls, a pop-up of a Gmail subject line, and more. And it's not just for Google apps: the deep Android integration means any Gemini-compatible apps should be compatible. Meta supports the essential phone, messaging, music, and social apps, but Google could have an "ecosystem," at least on Android; iOS users could have a more specialized experience.

Google's photography expertise will be vital

When I reviewed the Ray-Ban Gen 2s and Oakley Meta Vanguards, I appreciated the high-res action photos captured with such petite sensors, as well as how well they stabilize videos, so I appear to slide forward rather than rock and bounce with every step. A lot of postprocessing goes into making this work.

My expectation is that Google and Samsung will handle computational photography at least as well as Meta. They both make some of the best camera phones, specializing in areas such as motion capture, AI-powered zoom, edge detection with targeted blurring, and more.

Of course, Samsung can't fit Ultra-quality sensors with zoom into glasses frames; "physics will get in the way," as Payne explained, particularly for low-light photos. But he thinks they can "push up to that level of quality," and I've seen how, for example, the Pixel 9a produced better photography than other mid-range phones despite its smaller, flatter sensors.

Taking photos on Ray-Ban Meta glasses is also challenging without a viewfinder, so you don't know how close to stand. But during my Android XR glasses demo, they showed a viewfinder function on a synced Pixel Watch, so you can check if your photo angle looks right in the moment, then either retake it or use Gemini to edit it.

The Android XR-Wear OS connection will be key

The Meta Ray-Ban Display really benefits from having an sEMG band that reads your hand's neural inputs, recognizing gestures without needing to see them on camera. I assumed it would be tough for Google and Samsung to match this feature.

It turns out that Android smartwatches will be compatible with Android XR, and gestures like pinches detected by your watch will trigger actions on your glasses. It may be one reason why Google added gesture support to Pixel Watches this month, so they'd be ready to handle this feature in 2026.

Gestures and the viewfinder are confirmed features, but I expect there will be an Android XR app that can add shortcuts, or an option to display Gemini answers as text on your wrist for the audio-only glasses. I'd also expect an option to hear your live workout stats over the glasses, similar to how the Oakley Vanguards read out Garmin stats.

Basically, because it's a full-fledged accessory instead of a specialized input device, there's more potential for interplay between Android glasses and watches. Until that long-rumored Meta Watch shows up, Android XR will have the wearable edge.

Competition is always better

I'm eagerly anticipating the new Samsung glasses, whatever they're called, next year. I actually wear (non-smart) Warby Parker glasses, and I'm curious whether they'll be able to create designs that look more natural for daily wear — though I don't know how well they'll match Ray-Ban and Oakley for sporty looks.

Ray-Ban & Oakley Meta fans may still end up sticking with the ecosystem and styles they know. What matters is that Meta hasn't had much smart glasses competition yet. With Google and Samsung pushing in to challenge its supremacy, that gives Meta more impetus to innovate and keep its sales lead.

Michael is Android Central's resident expert on wearables and fitness. Before joining Android Central, he freelanced for years at Techradar, Wareable, Windows Central, and Digital Trends. Channeling his love of running, he established himself as an expert on fitness watches, testing and reviewing models from Garmin, Fitbit, Samsung, Apple, COROS, Polar, Amazfit, Suunto, and more.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.