I tried both of Google's AI glasses and spoke to Android XR's senior product director about the different types of smart glasses we can expect and the challenges developing them — Here's everything I learned

I also spoke with Juston Payne, AR and XR Product Management team leader, about what to expect from Google and Samsung's display-free and HUD glasses.

Before the Android Show: XR Edition showcased Android XR's future, I enjoyed an hour-long private demo, testing three pairs of Android XR glasses: The monocular AI glasses first introduced at I/O 2025, a new binocular HUD prototype, and the XREAL Project Aura XR glasses.

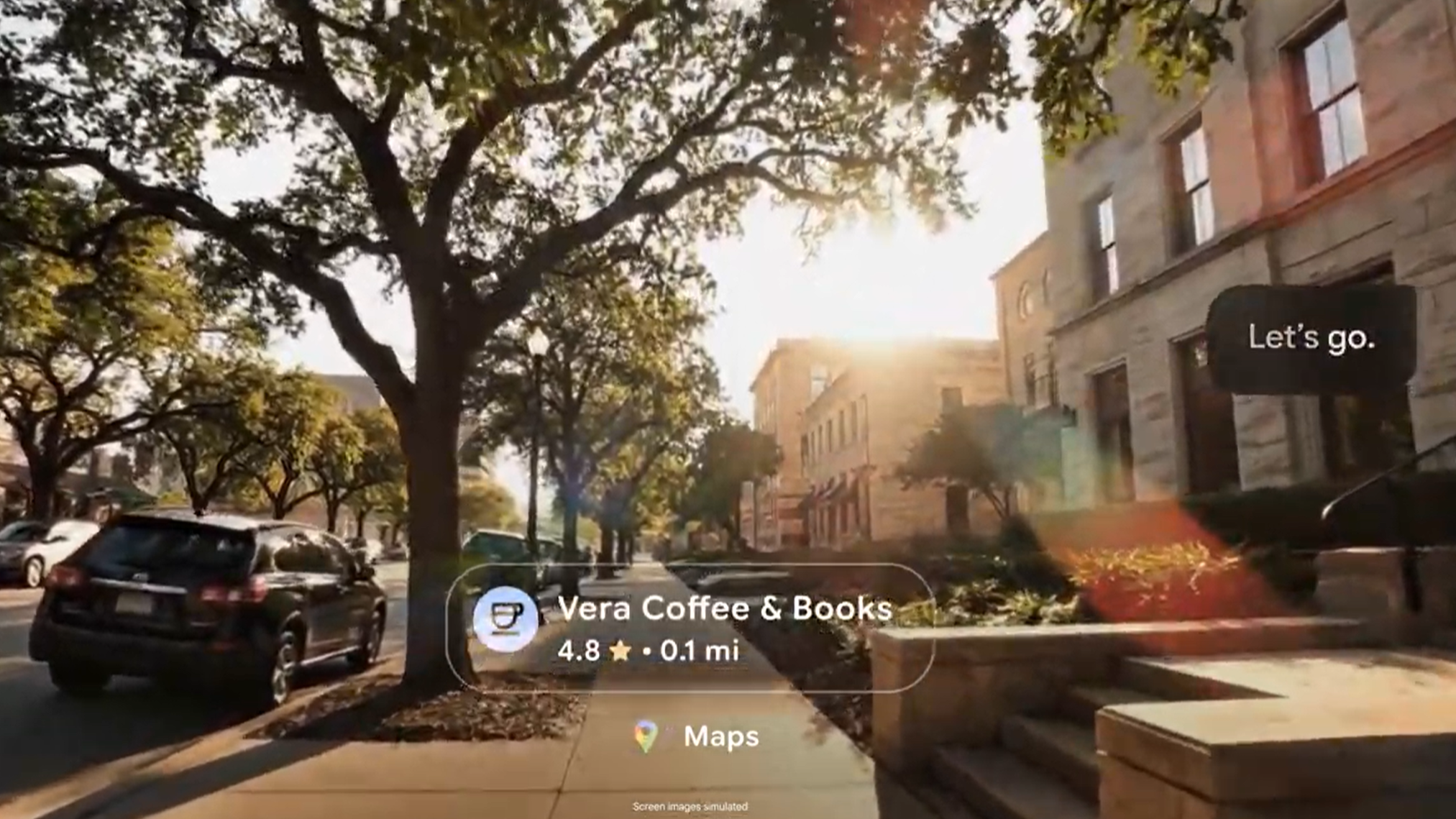

My first Android XR glasses demo at I/O only lasted five minutes, scratching the surface with a few apps. This time, I used these single-HUD glasses for over 30 minutes, testing Gemini, Google Maps and Translate, YouTube Music, Uber, and other glasses-based apps.

Next, Google had me try a new binocular glasses prototype — meaning they use dual displays with a wider FoV and 3D depth capabilities. Then I tested XREAL Project Aura, photographed above, which uses the same UI and pinch gestures as Galaxy XR but with a lighter form factor and 70-degree FoV.

What to expect from Android XR glasses

Google's monocular prototype and Android XR software will serve as the foundation for Samsung's glasses hardware, which will launch next year with designs from Warby Parker and Gentle Monster. They'll also launch "screen-free" AI glasses in 2026, relying on speakers, mics, cameras, and Gemini smarts for an audio-led experience. They'll be a serious competitor to Ray-Ban Meta AI glasses.

The two-display version, by contrast, won't arrive until 2027 at the earliest. Juston Payne, Android XR's senior product director, explained that they still "weigh a little bit too much" for consumers, so Google will "wait until it's ready." I certainly felt the pinch on my nose, about on par with Meta Ray-Ban Display glasses.

The audio-only glasses will probably be the most affordable, thin, and popular. Payne said that Android XR pulls from years of audio-only tools created for Pixel Buds and Pixel accessibility tools like TalkBack, "leveraging what was already there."

I turned off the glasses HUD with a button tap and could still rely on Gemini smarts that I'd normally get on my Pixel Watch 4. Payne says he expects that "much of the usage" of these HUD glasses will be "with the display off," so using an audio-only version "isn't like a painful cut," Payne reassured me.

Get the latest news from Android Central, your trusted companion in the world of Android

I didn't get a chance to photograph the prototypes, but the final versions won't look the same, anyway. What matters more is what I learned, both during the demo and behind the scenes, about what to expect from these new Samsung-made, Google-powered glasses, including how they'll sync with other Google devices and take advantage of Pixel camera expertise.

These smart glasses will work with iOS, but the Android integration is key

During my glasses prototype demo, they surprised me with the news that the eventual Samsung release will work on both Android and iOS. I assumed they'd make the same mistake as with the Galaxy Ring and make it Android-exclusive, but Google's team stressed that iPhone owners will want these enhanced Google app experiences, too.

Most of the features I demoed on Google's AI glasses — image recognition and recipe advice via cameras, automatic live translation, shared camera view during calls, local restaurant recs, turn-by-turn navigation with a minimap, or beamforming to differentiate between my voice and others — are also available on Meta's AI or HUD glasses.

Meta achieved this by combining its own apps and smarts with major partners, enabling its AI commands to trigger basic tools such as starting a playlist or calling a contact. While Google has direct Android system access, it clearly realized it can deliver a similar piecemeal experience on iPhones and vastly expand its potential audience.

Still, the Android experience should be the definitive one, with direct access to all Play Store apps instead of specific access to the Google Workspace suite and Gemini, plus partner apps. And they'll sync with other Android accessories.

When I took a photo with the glasses, a preview appeared on a Google rep's Pixel Watch. He said that other Wear OS watches, like Galaxy Watches, will integrate with Android XR so that gestures trigger certain actions — similar to how Meta uses its sEMG band for gesture controls.

Plus, on Android, the developer tools built for phones will carry over to the glasses. For example, if an app supports Live Update notifications, devs won't have to "do anything else" for glasses to show the same rich notifications, Payne says.

It's up to developers to decide whether to take advantage of the Android XR SDK and APIs to do more. Uber, for example, was "excited" by the Google Maps demo this May and decided to create a similar experience. During my demo, Google simulated a pick-up event; I could see an airport map to the pick-up zone by looking down, with a normal widget of my driver's details when looking ahead.

The main challenges that Google had to solve

I don't know what the Warby Parker and Gentle Monster designs will look like, but Payne promises that these Samsung Glasses will be "eyewear first," with both prescriptions and "sun tints" available, as well as "stylistic variety" so anyone can find a pair that matches their "preferences."

But their primary strategy to keep these glasses comfortable and stylish, according to Payne, is to "offload" as much computation as possible to the phone, enabling smaller batteries and thinner frames.

That's why they're not selling the two-display glasses in 2026; while they're "nowhere near as heavy as 70 or 80 grams," they're still above the "comfort threshold" that consumers will accept. But Payne seems to think they'll solve this hardware issue soon. It'll be exciting when they do, as weight aside, this prototype was more pleasurable to use, with a wider, centered field and depth added to certain apps — like 3D buildings in Google Maps.

Another smart glasses challenge that Google and Samsung will have to solve is camera quality. Meta glasses can capture high-resolution photos and stabilize videos, but they have limitations, especially in low-light photography, framing, and zoom.

Payne says Samsung is responsible for the cameras in the eventual consumer release, but it's a "tight partnership" with Google handling the post-processing and AI image-editing side of things. So I asked Payne what to expect.

Payne acknowledged readily that "physics will get in the way" of giving these glasses the same camera quality as a Pixel 10, because frames can't fit large sensors, but he's confident that Google's "computational photography investments" will help the glasses "push up to that level of quality." I also assume that tools like Magic Editor will help you remove the excess details around your subject.

On that note, when I told Payne that my current AI glasses don't always know which object in front of me I'm asking about, he promised that Gemini is good at "inferring" the subject, but suggested people can "point at" or hold something, and Gemini will use that context when answering.

XREAL Project Aura matters as much as Galaxy XR for Android XR's future

Despite a decade of Google Glass, VR headsets, and AR prototypes, Payne says that it's still "early days for XR overall," with 2026 as a "big year" because the upcoming AI and HUD glasses will be the first smart glasses with an "app ecosystem."

But if Android XR is to become the 'next large computing platform,' it can't just be the Samsung & Google show. Google will need it to be a proper open system, with more partners. And that starts with XREAL Project Aura becoming a success story.

When I tested XREAL Project Aura, it had the same onboarding experience as Galaxy XR, with an identical pinching tutorial and the same apps and Gemini integration. The performance was seamless, the FoV was much wider than on other smart glasses, and it still has the upside of syncing with a Switch or Steam Deck for gaming.

These glasses certainly have the potential to find an audience, but what happens after launch? Will Project Aura receive Android support and software updates at the same cadence as Galaxy XR? Or will it lag behind as Google prioritizes its biggest partner? We've seen this in recent years with Wear OS, as long-term partners like Fossil and Mobvoi have fallen behind and given up on smartwatches.

Hopefully, Android XR sticks to a more collaborative and supportive path, growing the smart glasses category even further.

Michael is Android Central's resident expert on wearables and fitness. Before joining Android Central, he freelanced for years at Techradar, Wareable, Windows Central, and Digital Trends. Channeling his love of running, he established himself as an expert on fitness watches, testing and reviewing models from Garmin, Fitbit, Samsung, Apple, COROS, Polar, Amazfit, Suunto, and more.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.