Ray-Ban Meta tips and tricks: Key AI features for Ray-Ban, Oakley, and Meta Display glasses

Meta smart glasses add new features, connected apps, and settings regularly; we've broken down every cool trick new owners should know.

Enjoy our content? Make sure to set Android Central as a preferred source in Google Search, and find out why you should so that you can stay up-to-date on the latest news, reviews, features, and more.

Meta's Ray-Ban and Oakley glasses are on version 18 of the operating system; Meta regularly updates them with new features, making it challenging to keep track of every tool they offer. This Ray-Ban Meta glasses guide will run through the key features, settings, tips, and tricks you should know!

While we'll focus mainly on the Ray-Ban Meta Gen 2 and Oakley Meta HSTN glasses, we'll also touch on the new Meta Ray-Ban Display glasses with their exclusive tricks. Plus, we'll talk about the features that'll launch alongside or after the Oakley Meta Vanguard glasses in late October, such as Garmin integration and saved shortcuts to skip "Hey Meta" commands.

Let's dive into every key Meta smart glasses feature and how to use them, from Live Translation and Live AI to activating connected apps!

Meta AI features and settings

Meta has a convenient FAQ page listing some sample Meta AI commands, including calling or messaging a contact, asking how much battery is left, or going to the "Next" song in your playlist — all fairly standard commands for any AI assistant.

What's unique to these smart glasses is asking Meta AI to "tell me about X," such as a plant or landmark, to summarize an article in front of you, translate a sign to your language, or remember this hotel room number.

When it comes to Meta AI reminders, you can tell Meta to remember where you parked or that you have an appointment on Thursday; any dated or timed reminders will send a push notification to your phone. Alternatively, you can simply ask Meta AI, "What are my upcoming reminders?" If you enable location tracking, you'll be able to see exactly where you created a reminder, which may help you locate what you're looking for.

You can also set timers using Meta AI, which will appear in the Meta AI app. You can ask the AI for your time remaining and edit it if necessary.

Get the latest news from Android Central, your trusted companion in the world of Android

In the Devices tab of the Meta AI app, tap the cog icon next to your glasses, then the Meta AI settings. Under Language and voice, you'll find low, medium, or high-pitched voice options, as well as AI voices for Awkwafina, John Cena, Keegan-Michael Key, and Kristen Bell. You may find these cool or uncanny; I find it more useful to switch the Speaking rate to 1.25X so that I'm not waiting as long to get a response to my questions.

By default, your glasses are always listening for a "Hey Meta" command. If you want to conserve battery life, you can go into your device settings > Meta AI > "Hey Meta" preferences to turn this feature off. You can then summon Meta AI by tapping and holding the touchpad instead.

If you leave "Hey Meta" on, double-check that Respond without "Hey Meta" is toggled as well. You'll still have to say the wake word once, but after Meta AI answers, it'll listen for a reply for about five seconds. So if you asked about the Lincoln Memorial in front of you, you could follow up with "When did he die?" and have Meta understand who "he" is from context.

Live AI

Say "Hey Meta, start Live AI," and the assistant will activate your mic and camera continuously until you end the session; you can then ask as many questions as you'd like without the "Hey Meta" wake word. It drains your battery quickly — the new Oakley HSTNs and Ray-Ban Gen 2s might last an hour in this mode — but it's very convenient for in-depth conversations.

Meta recommends using it for tasks such as meal preparation, fashion advice, or contextual information about a landmark or museum exhibit, particularly when discussing a single topic in depth or seeking context for multiple visual subjects.

You can say "Pause Live AI" or tap the touchpad to temporarily disable the mic and cameras, such as when someone sees the notification light and asks you to stop. Then say, "Hey Meta, resume Live AI" or tap the touchpad again to restart. Remember to say "Stop Live AI" at the end to preserve your battery life.

Be My Eyes

One of the coolest spin-offs of the Live AI feature is Be My Eyes. Blind or low-vision owners of Ray-Ban Meta glasses can contact a volunteer and share their camera and mic feed with that person; they'll be able to essentially see for that person and guide them through that environment.

Even if you can't or don't want to rely on someone else's help, you can always simply ask Meta AI, "What am I looking at?" and get help; that's why Live AI can be helpful, to get continuous support if you're going to be repeating the question.

Meta doesn't want people using these glasses as a dedicated mobility aid, due to the potential for inaccurate information. However, if you activate the Detailed responses feature, Meta AI responses will include more information on the "placement of doorways, people, and objects, as well as quantity of objects," providing low-vision users with the context they need.

Live Translation

Meta glasses can live-translate between English, French, German, Italian, Portuguese, or Spanish, but you have to do some setup first. In the Devices tab, tap the Translate button under your glasses. Then select which language you expect the other person to be speaking, as well as what "You speak." Meta will then download the necessary language pack(s).

Once that's set up, face the person you'll be speaking with and place the phone between the two of you. Then say, "Hey Meta, start live translation." Meta recommends that you speak clearly and at a "modest" pace so that the AI has no trouble translating your words. Whatever you say will be transcribed on your phone, while what the other person says will be spoken aloud over your glasses' speakers.

Connected apps

Once you grant permissions, your Ray-Ban or Oakley Meta glasses can sync with:

- Phone apps (iOS and Android)

- Messaging apps (Apple Messages, Google Messages, Messenger, WhatsApp)

- Social apps (Facebook, Instagram)

- Music/podcast apps (Amazon Music, Apple Music, Audible, Calm, iHeart, Shazam, Spotify)

- Calendar apps (Google Calendar, Outlook)

When using music apps or answering calls, it's a good idea to memorize the touchpad shortcuts. A single tap will pause or restart a song; a double-tap will skip to the next song or answer a phone call; a triple-tap will return to the previous song; a tap-and-hold will reject a call or trigger your music app to start playing if you assign that shortcut to it instead of Meta AI. Swiping up or down will increase or decrease the volume.

When it comes to calls and messaging, it's as simple as sharing your contacts with Meta so you can say, "Hey Meta, call Bob" or "send a message to Bob." If you connect multiple apps, you may need to add "on WhatsApp" or "on Messenger" at the end of the command.

Meta unsurprisingly saves its best tools for its own apps, where it can use direct integration instead of basic commands. For example, you can record a voice message (up to one minute), but only through WhatsApp or Messenger. Likewise, you can share your glasses camera feed during a video call, but only by tapping a glasses icon in Messenger, WhatsApp, or Instagram.

When you take photos with your glasses, you can say, "Hey Meta, share my last photo to Facebook/Instagram," or you can specify to share it "to my Instagram/Facebook Story."

Meta will continue to add new app connections over time, as well as better commands for specific apps; you can bookmark the Meta AI glasses release notes to see the most recent updates.

Upcoming Vanguard features

Meta has confirmed that the Oakley Meta Vanguard will launch on October 21, featuring a few fitness-focused AI and camera features, and that these features will eventually be available on other Ray-Ban and Oakley glasses.

First, Meta has teamed up with Garmin and Strava to sync your live workout activities with your glasses. You'll be able to ask, "What's my current pace?" and have the glasses deliver that information from your Garmin watch or Strava app. You'll be able to check info without breaking stride to swipe through watch menus or pull your phone out of your pocket.

Second, Meta's autocapture feature will "automatically capture video clips when you hit key distance milestones or ramp up your heart rate, speed, or elevation," creating memories from a race without having to take them manually.

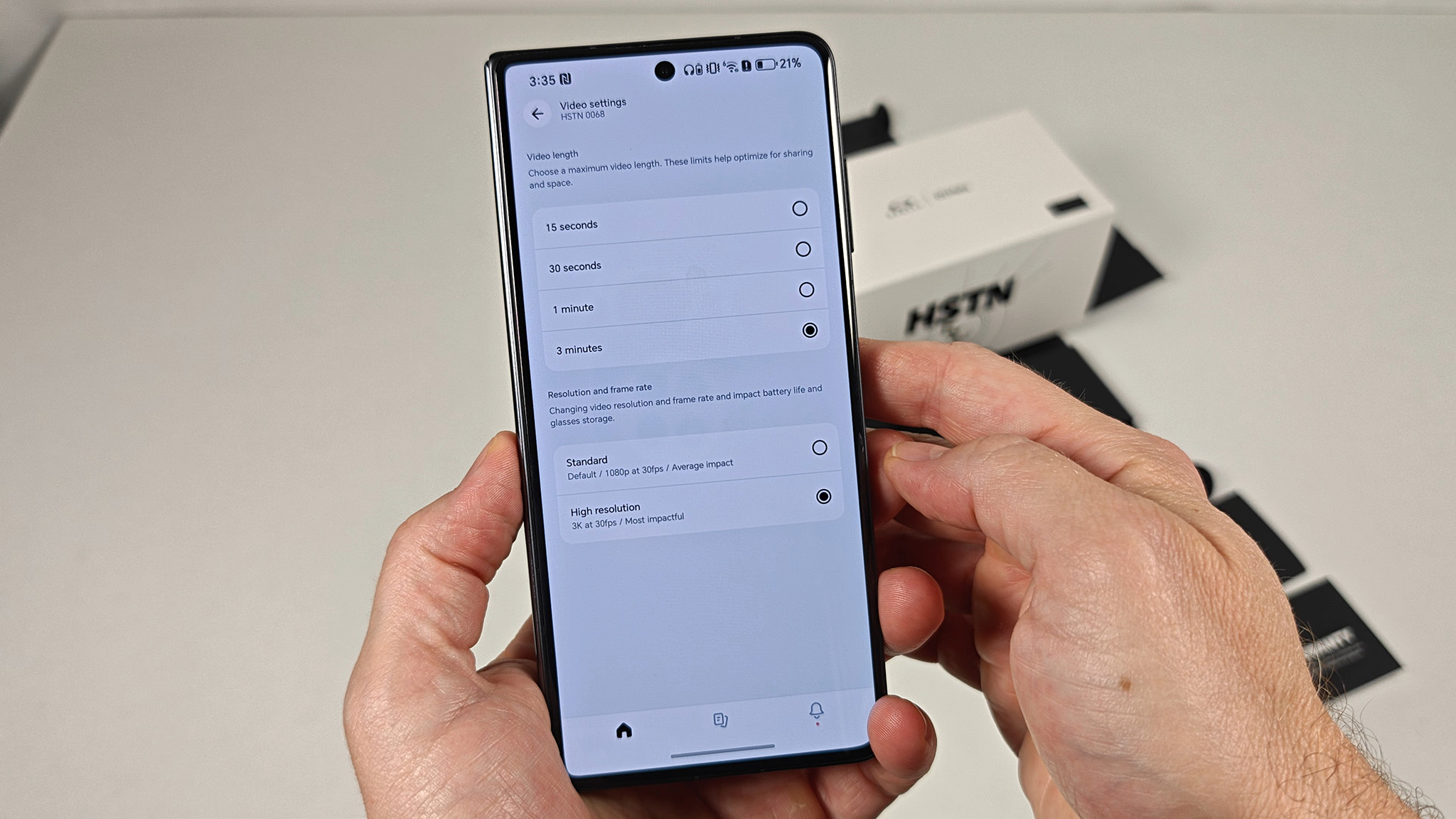

Third, Meta will add new video modes beyond the current 1080p or 3K recording settings. Specifically, you'll be able to create Slow Motion videos for dramatic extreme sports moments or Hyperlapse videos for extended tours of your activity route. The Vanguard glasses will have a second Action button that you can use to set up your video shortcut of choice.

Meta also told us that it'll create new single-word shortcuts sometime before the end of 2025. For example, instead of "Hey Meta, capture a photo," you'll simply say "Photo," and the always-listening assistant will do the rest. Meta will be the one to choose which shortcuts work, but it'll still be appreciated to trigger actions more quickly.

Meta Ray-Ban Display features

The Meta Ray-Ban Display glasses build on the smart glasses template, adding a built-in display. Most of the features on them are comparable, but with upgrades enabled by the HUD, such as the ability to see Meta AI responses in addition to hearing them, including images or (if relevant) step-by-step instructions in your vision, which you can then swipe through with Meta Neural Band gesture controls.

Taking photos with normal Ray-Ban/Oakley glasses is challenging because you don't know how close you need to be. The Display glasses solve this with a viewfinder previewing your shot, with the ability to zoom in or out by twisting your fingers. Plus, if you're into generative AI silliness, you can look at your finished shot and restyle it based on a text prompt before you upload it to social media.

If you use Meta AI to look for something nearby, the Display glasses support pedestrian navigation to guide you to your destination, though only in "select cities" to start.

Meta smart glasses can show your perspective in a video call, but only the Display glasses can have it both ways, showing the person you're calling in your vision. You also get a full view of other apps, such as your Instagram feed, for scrolling through videos without it being visible to anyone else in the room.

Likewise, you can skip through a music playlist on smart glasses, but only the Display glasses will show the details and album artwork, so there's no suspense of what's coming next.

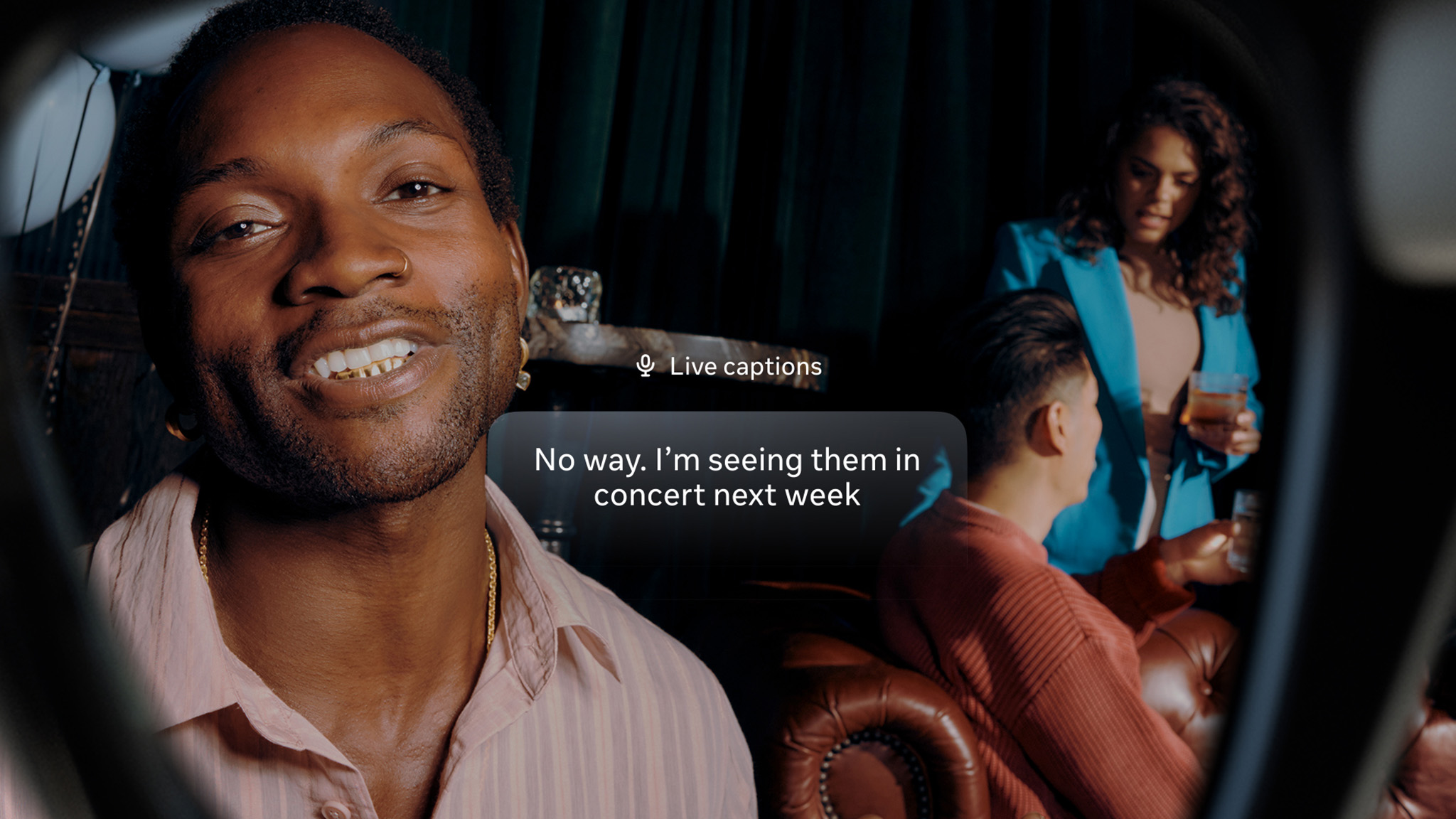

One of the best Meta Ray-Ban Display features is Live Captions, which transcribes in real time what the person in front of you is saying. The glasses' multi-array mics pinpoint who you're looking at so that any other conversations around you don't mess up the captions; if you turn to look at someone else, the text will switch to whatever they're saying.

The glasses will also enhance Live Translations so that you see people's translated responses in text form, as well as audibly.

Most Meta Ray-Ban Display glasses features are controlled by simple gestures or voice commands. One feature, currently in beta but coming to them soon, is the ability to write words on a desk or thigh and have them transcribed for a message or command; this will allow you to use the glasses silently and subtly during a meeting.

Michael is Android Central's resident expert on wearables and fitness. Before joining Android Central, he freelanced for years at Techradar, Wareable, Windows Central, and Digital Trends. Channeling his love of running, he established himself as an expert on fitness watches, testing and reviewing models from Garmin, Fitbit, Samsung, Apple, COROS, Polar, Amazfit, Suunto, and more.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.