Google Search goes "Live" with this new feature that we didn't know we needed

Live Search brings hands-free and instant access to Gemini's multimodal features.

What you need to know

- Google's Live Search integrates real-time AI conversations for enhanced user interaction

- Users can now share camera feeds to receive instant answers and insights

- Available on Android and iOS since September 24, bringing versatile assistance to users

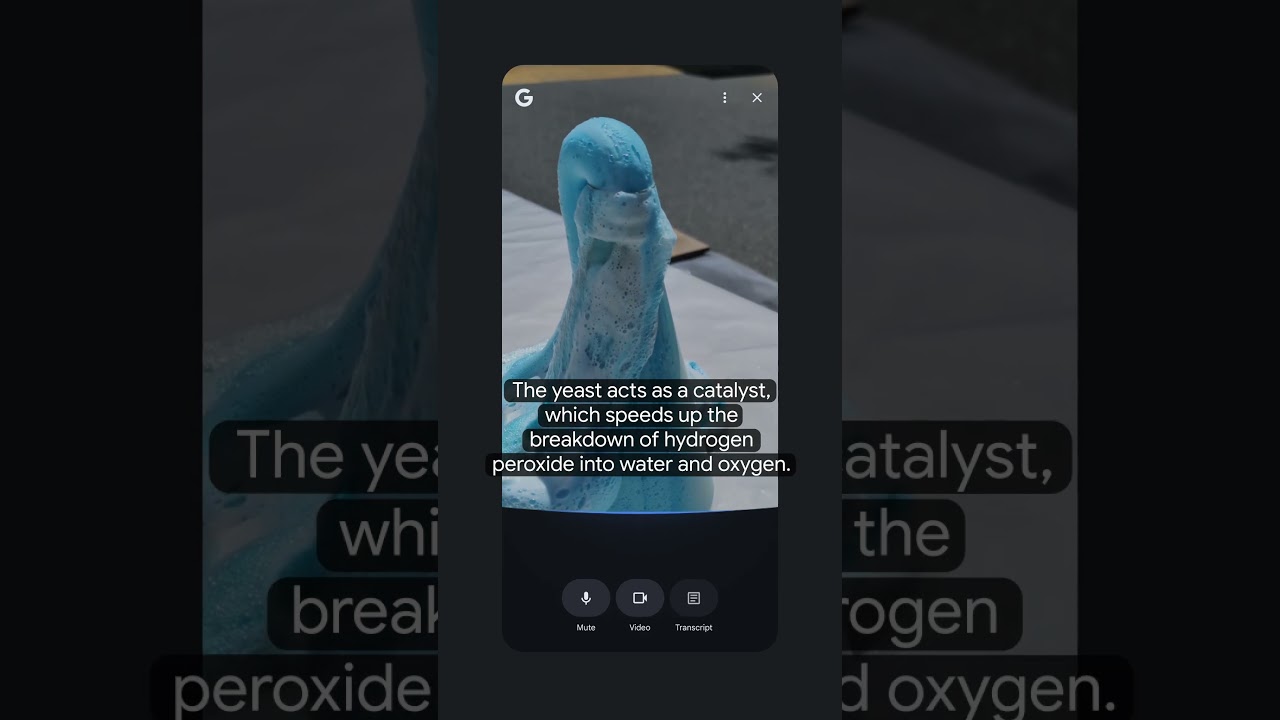

Google announced that it's bringing Project Astra's capabilities to Google Search, helping users get real-time answers via Live Search within AI Mode. Think of it as an upgrade to the current Google Lens, and Google's way of pushing Gemini's multimodal capabilities onto more platforms.

With "Live Search," users can have real-time, interactive conversations with Google Search in AI Mode, much like speaking to the AI chatbot on Gemini Live. This feature allows users to share their phone's camera feed, and they will have the ability to respond to your questions in real time. Since this feature is tied to AI Mode, it will also provide links for intensive research as well.

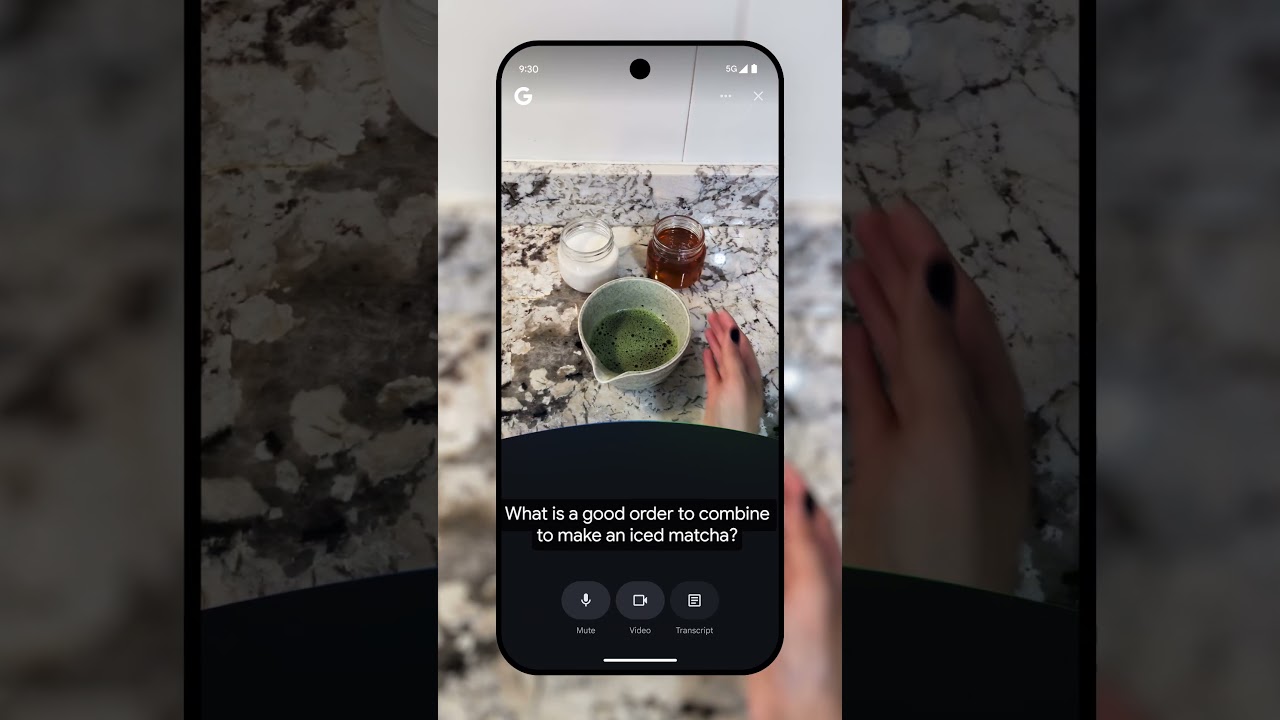

For instance, you'd like to know how you can make a cup of matcha — all users have to do is open the Google app (Android and iOS) and tap the new "Live" icon under the search bar. Then point the camera at the equipment in your matcha set and ask what each tool is used for. Or you could even ask it questions for a deeper understanding of matcha's history or its rise to fame as a beverage.

It can also come in handy when you're on vacation and want to have a hands-free conversation with someone about the best spots around the neighborhood to explore. Users can even use the Live option on the go to help them translate or give them more information on anything they see. It's practically a personal assistant in your pocket that can help you with quick fixes or if you need help with an assignment.

Google says that users can start asking questions out loud and enable video input to share visual context from their camera feed. This feature extends into Google Lens as well. Now, you can go a step further and click on the Live option within Lens to have an instant back-and-forth conversation about whatever you see in front of you, instead of clicking a picture and waiting for Google Lens to do its job.

The Live Search option is available to all Android and iOS users in the U.S. starting Sept. 24. Accessing this feature appears significantly easier within the Google app itself, as opposed to navigating to Gemini, then selecting Gemini Live, and finally activating your camera.

Get the latest news from Android Central, your trusted companion in the world of Android

Nandika Ravi is an Editor for Android Central. Based in Toronto, after rocking the news scene as a Multimedia Reporter and Editor at Rogers Sports and Media, she now brings her expertise into the Tech ecosystem. When not breaking tech news, you can catch her sipping coffee at cozy cafes, exploring new trails with her boxer dog, or leveling up in the gaming universe.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.