Tech Talk: What phone hardware does it take for good, fast AI?

Let's talk about tech.

Enjoy our content? Make sure to set Android Central as a preferred source in Google Search, and find out why you should so that you can stay up-to-date on the latest news, reviews, features, and more.

Welcome to Tech Talk, a weekly column about the things we use and how they work. We try to keep it simple here so everyone can understand how and why the gadget in your hand does what it does.

Things may become a little technical at times, as that's the nature of technology — it can be complex and intricate. Together we can break it all down and make it accessible, though!

How it works, explained in a way that everyone can understand. Your weekly look into what makes your gadgets tick.

You might not care how any of this stuff happens, and that's OK, too. Your tech gadgets are personal and should be fun. You never know though, you might just learn something ...

AI needs special hardware

You may be familiar with the components needed to make a phone run, such as the CPU and GPU. However, when it comes to AI, specific hardware is required to enable features to run fast and efficiently.

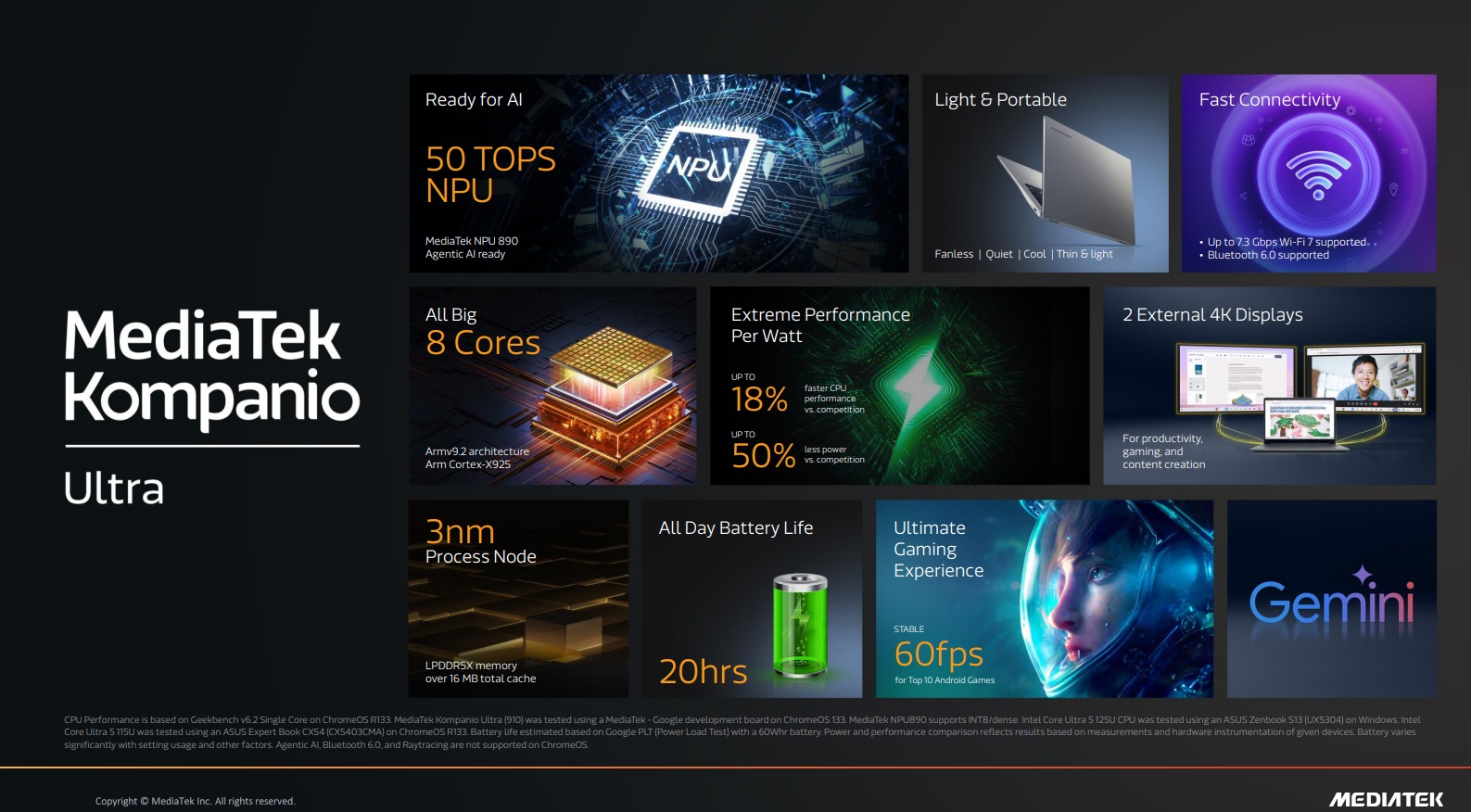

The hardware that speeds up AI processing on a phone is centered around a specialized component known as the NPU (Neural Processing Unit). This component is designed specifically for the math and data handling required by artificial intelligence and machine learning models, making it significantly different from standard phone hardware, such as the CPU and standard GPU.

It works with the "normal" phone hardware to transform lines of code into something that appears to think or be intelligent. It's also very different.

Feature | NPU | Standard CPU or GPU |

|---|---|---|

Primary Function: | Optimized for neural network computation, machine learning, and deep learning tasks like matrix multiplications or convolutions. | CPU: Handles general-purpose, sequential tasks. GPU: Handles graphics rendering and massive, but simple, parallel computations. |

Architecture: | Built for massively parallel processing of low-precision 8-bit or 16-bit arithmetic. | CPU: Optimized for complex instructions at a high clock with and low latency. GPU: Optimized for 32-bit floating-point math common in graphics. |

Power Efficiency: | Extremely power-efficient for AI tasks, allowing complex AI (like real-time translation or image generation) to run on the battery for longer. | CPU/GPU: Can run AI tasks, but are significantly less power-efficient and slower than an NPU for the same task, draining the battery much faster while taking longer to do it. |

Performance Measurement: | TOPS (Tera Operations Per Second). Higher TOPS means faster AI processing. | Clock speed in Gigahertz. Higher is faster, but not necessarily better. |

Integration: | Usually an optional integrated core within the main SoC (System-on-a-Chip) alongside the CPU and GPU. | Core components of the SoC necessary for computing tasks |

The NPU is a dedicated device for AI. Without one, AI processing on an Android phone (or any device, for that matter) will be significantly slower, consume more battery, and likely require an internet connection and a remote AI server to function. Chip makers have their own brand of NPU: Google has the Tensor Core, Apple has the Neural Engine, Qualcomm has the Hexagon NPU, and so on. They all do the same things slightly differently.

Get the latest news from Android Central, your trusted companion in the world of Android

However, you also need standard phone hardware to bring everything together and enable faster and more efficient AI processing.

The CPU manages and coordinates the entire system. For AI, it handles the initial setup of the data pipeline, and for some smaller, simpler models, it may execute the AI task itself, albeit less efficiently than the NPU.

The GPU can accelerate some AI tasks, especially those related to image and video processing, as its parallel architecture is well-suited for repetitive, simple calculations. In a heterogeneous architecture (utilizing multiple processor components on a single chip), the system can select the most suitable component (NPU, GPU, or CPU) for a specific AI workload.

AI models, especially large language models (LLMs) used for generative AI, require a large amount of fast RAM (Random Access Memory) to store the entire model and the data it's actively processing. Modern flagship phones have increased RAM to support these larger, more capable on-device AI models.

Why this matters

For fast and efficient AI ("good" AI is subjective), what you really need is optimization. Each different type of task may require a slightly different processing path, and with the right mix of hardware, all tasks can be performed by the hardware best suited for each task.

Having said that, the NPU plays a critical role, and it's soon going to be a requirement for every high-end phone because of three things:

Speed: NPUs can perform the massive parallel matrix math required by neural networks much faster than a general-purpose processor.

Efficiency: By focusing on the exact operations needed, NPUs use significantly less power, which is critical for a battery-powered mobile device.

Local Processing (Edge AI): The speed and efficiency of the NPU allow complex AI features—like real-time voice translation, advanced computational photography, and generative text/image models—to run entirely on the phone without sending your data to a distant cloud server, enhancing privacy and reducing latency (lag).

So, know that the next time you use Gemini without having to connect to the Google mothership via the cloud, because your phone can handle everything—this special hardware is the reason why.

Jerry is an amateur woodworker and struggling shade tree mechanic. There's nothing he can't take apart, but many things he can't reassemble. You'll find him writing and speaking his loud opinion on Android Central and occasionally on Threads.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.