Tech Talk: How can AI translate text and speech in real-time?

Let's talk about tech.

Enjoy our content? Make sure to set Android Central as a preferred source in Google Search, and find out why you should so that you can stay up-to-date on the latest news, reviews, features, and more.

Welcome to Tech Talk, a weekly column about the things we use and how they work. We try to keep it simple here so everyone can understand how and why the gadget in your hand does what it does.

Things may become a little technical at times, as that's the nature of technology — it can be complex and intricate. Together we can break it all down and make it accessible, though!

How it works, explained in a way that everyone can understand. Your weekly look into what makes your gadgets tick.

You might not care how any of this stuff happens, and that's OK, too. Your tech gadgets are personal and should be fun. You never know though, you might just learn something ...

¿Cómo funciona?

In the mid-1950s, the U.S. government invested in what was called the Georgetown-IBM experiment to translate written and spoken Russian into Americanized English. Ever since, computer-powered translation has been worked on and improved because it's one of those things that's incredibly useful.

What started as simple Rule-Based Machine Translation (RBMT) has transformed into Large Language Models, which use seemingly endless amounts of data to power generative AI translation on simple consumer electronics like phones. It's come a long way and works very well, but how does it do it?

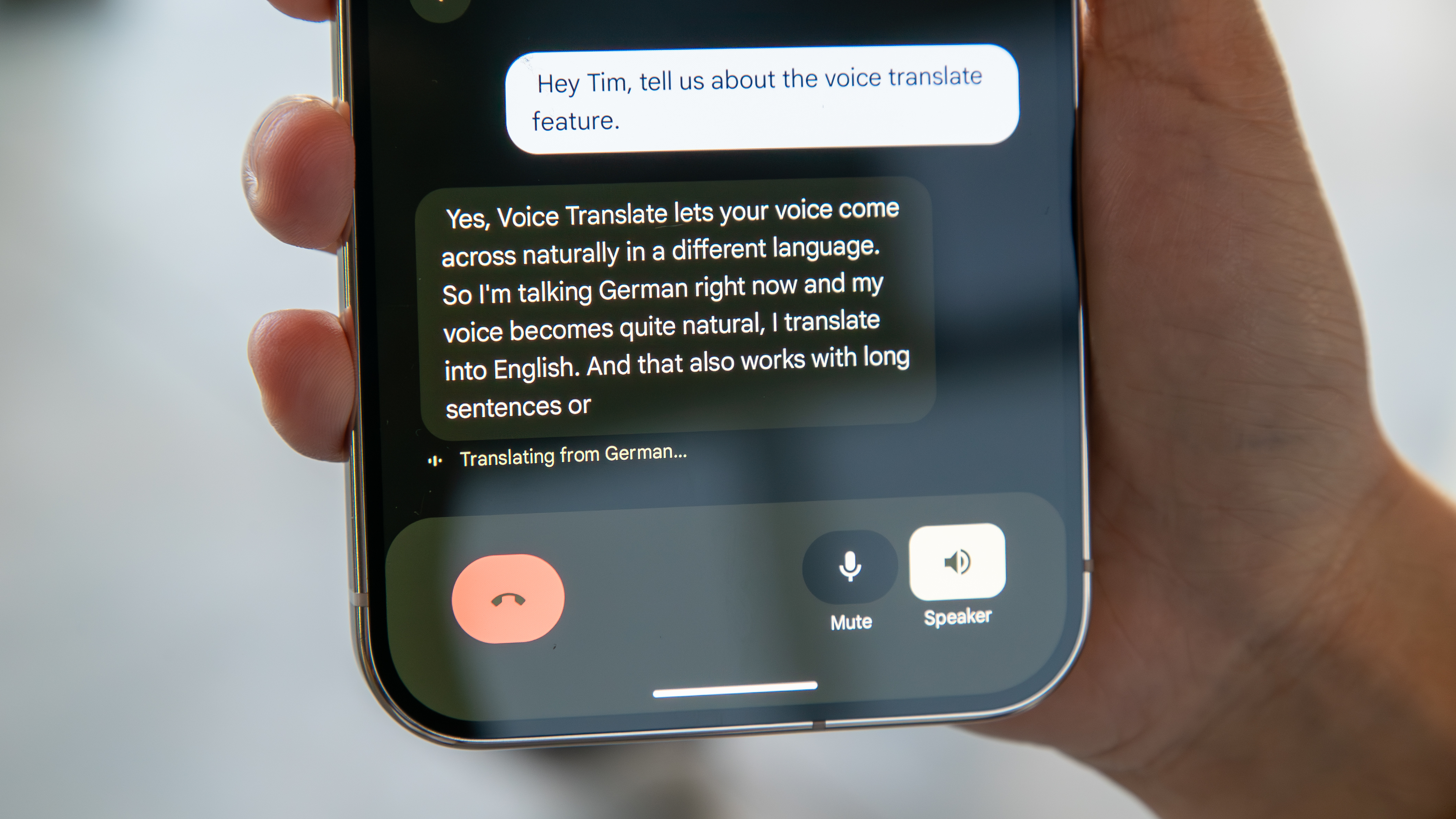

The seemingly instantaneous nature of real-time AI translation belies a complex and sophisticated process, a high-speed relay race involving three AI powerhouses: Automatic Speech Recognition (ASR), Natural Language Processing & Neural Machine Translation (NLP & NMT), and Text-to-Speech (TTS). A look at each individual component helps understand how this magic actually works.

Automatic Speech Recognition

It all starts with a voice. AI acts like a very attentive listener, automatically converting spoken words into digital text.

Get the latest news from Android Central, your trusted companion in the world of Android

This isn't just a simple transcription service; it's a sophisticated system that filters out background noise, adapts to diverse accents, and can even detect very subtle inflections and emphasis on certain words. Imagine a supercharged speech-to-text app like the old Google Translate that operates in real-time, processing speech in short bursts rather than waiting for you to complete an entire sentence.

Natural Language Processing & Neural Machine Translation

With the transcribed text at hand, the system starts a process of deep analysis. It cleans up the text, eliminating filler words ("ums" and "uhs"), adds appropriate punctuation, and, most importantly, interprets the context.

The system doesn't just engage in a simple word swap; it discerns the actual meaning of a sentence, leveraging the power of Transformer models to capture the nuances of a specific language.

Once it decides it has everything figured out correctly, it then generates a translation in the target language, striving for both accuracy and naturalness.

Text-to-Speech

Finally, the translated text is brought to life and transformed back into spoken words.

Modern AI has made remarkable strides in this area, producing speech that is incredibly natural-sounding, complete with appropriate intonation, rhythm, and even subtle emotional inflections. You probably remember when computer "voices" were robotic and monotone. Today's AI-powered TTS systems specifically strive to emulate the expressiveness of human speech because this actually helps people understand, even though there may be small errors.

All of this unfolds in a matter of milliseconds, creating what feels like a seamless, conversational experience. This remarkable feat is made possible by deep learning algorithms, constant adaptation, and never-ending research in better contextual understanding.

But nothing's perfect.

The good and bad

Most people who have tried AI-powered translation applications or services are simply wowed by them. We love them because it's a cost-effective and convenient way to break the barrier of not understanding what we see or hear around us.

With the ability to use devices like smartphones or earbuds to understand what we see and hear, this technology is within the reach of almost everyone. This allows us to overlook many of the obvious issues of using AI to translate what one person is saying into what another person is hearing.

AI struggles with the subtle aspects of human language. All cultures use things like sarcasm or humor, along with specific cultural references that can easily confuse a machine (and sometimes even a human). Phrases like "kick the bucket" or "Skibidi Rizz" simply can't be accurately translated without understanding the meaning and context the speaker had in mind.

There is also still a perceptible amount of lag. We call it real-time translation, and it is very fast and impressive, but using "chunk detection" to guess when a person is done speaking often leads to awkward pauses or even misinterpretations.

Finally, there is the issue of bias. AI "learns" from the data it collects, which reflects numerous gender, race, and accent biases. This leads to AI doing things like using male pronouns for a doctor while using female pronouns for a nurse. Other bias issues are more apparent; when most of the data collected comes from males aged 18-40, things can have a specific outlook that ignores many cultural aspects, as well as alternative points of view.

AI Translation is not a Human Replacement. Experts and users alike generally agree that human translators are still needed for important situations such as legal or medical contexts. A machine can't have the same cultural understanding and empathy as a human, either.

AI translation is best viewed as a powerful tool that augments human capabilities, not replaces them. Even as the science and theory behind it all improve, there are things AI can never be capable of doing as well as real flesh and bone.

Jerry is an amateur woodworker and struggling shade tree mechanic. There's nothing he can't take apart, but many things he can't reassemble. You'll find him writing and speaking his loud opinion on Android Central and occasionally on Threads.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.