The iPhone 12 now has LiDAR, but Google could do it bigger and better

Get the latest news from Android Central, your trusted companion in the world of Android

You are now subscribed

Your newsletter sign-up was successful

Apple has shown the world the iPhone 12, and like the new iPad Pro it comes with a new feature: a LiDAR laser and scanner. While the tech can be used for a lot of things, Apple is using it for a couple of reasons, but mostly to bolster the Augmented Reality (AR) capabilities of iOS and as a way to help the iPhone 12 camera focus in low light.

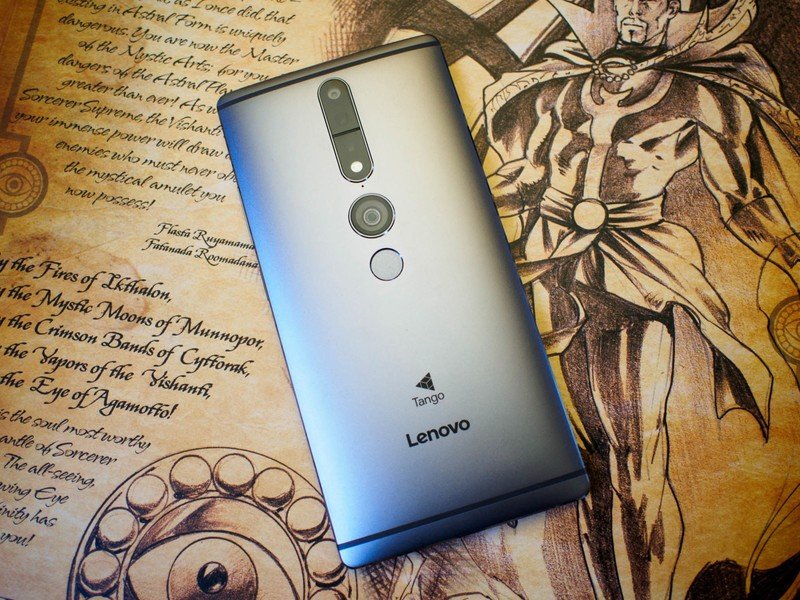

Google is also no stranger to AR, and you might remember hearing about Tango — Google's method to build an AR powerhouse for mobile. Tango used special sensors to create a pretty good AR experience, but the project was shuttered once ARCore helped us get as much AR as we wanted with just regular cameras.

In turn, we've also seen Android phones that use a time of flight (ToF) sensor to improve focus under all lighting conditions. ToF is a generic term that measures how long it takes light to bounce back when projected and some math can turn that into an exact distance. Software developments and better machine learning capabilities have mostly done away with ToF sensors, too.

Apple has done the reverse and is bringing special sensing equipment back on the hardware side. Should Google do the same?

What is LiDAR?

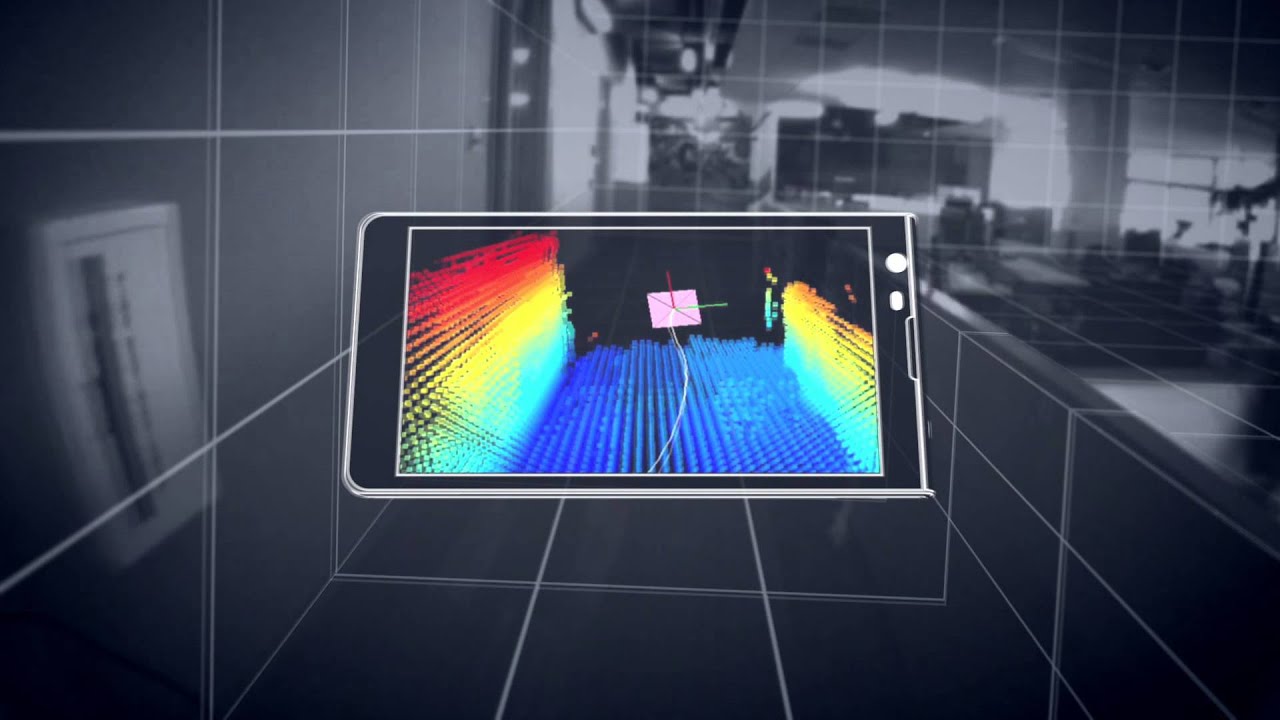

LiDAR stands for Light Detection and Ranging and is a way to create a three-dimensional map of whatever it's focused on.

A LiDAR system consists of a laser and receiver; the laser emits pulsed light, and the receiver measures the time it takes for the light to bounce back. It's not a new technology — your robot vacuum probably uses it, and NOAA has used it to recreate and model the surface of the earth for a while now, though Apple isn't doing things quite so grand.

On the 2020 iPad Pro, LiDAR is used to build an "image" of what the camera sees, so apps that have an AR component can use it to add animations and static images to the screen in exactly the right place.

Get the latest news from Android Central, your trusted companion in the world of Android

Yeah, that looks cool. It's also something that could probably be done without using any special sensors, though Apple seems to think they are needed. It's tough to argue with the engineers who designed the new iPad, so I'll go with the idea that the LiDAR sensor makes things better.

Project Tango and ARCore

Google introduced Project Tango in 2014 as a side project from the ATAP team, and it was equally cool. It came to consumer devices from ASUS and Lenovo, and it worked as well as it did in the lab: a Tango device like the Lenovo Phab 2 Pro could map its surroundings and store the data so extras could be added by AR developers.

In 2017, Google shuttered Project Tango in favor of ARCore. Debuting on the Pixel 2, it was demoed with new stickers and animations in the Google camera app, but there are plenty of apps in Google Play that utilize ARCore.

Why Google did the reverse of Apple here — starting with extra hardware, then working on eliminating the need for it — is anyone's guess. Google might have found a way to cut costs yet was still good enough, or maybe the adoption wasn't strong enough to continue to build devices with expensive sensors. Either way, Tango is gone, ARCore is the replacement, and it works reasonably well.

Android phones have better cameras

You still will find extra sensors used in addition to the rest of the hardware in every phone, but most companies aren't doing any ToF calculations using a dedicated sensor for the reasons described above. Instead, advancements in on-device machine learning have made some of the best Android phones like Google's Pixel, Samsung's Galaxy Note 20 Ultra, and Huawei's Mate 30 pro have cameras better than what Apple could offer.

Apple does everything very slowly and steadily. The idea to use LiDAR to improve Apple's AR platform or incorporate it into the camera isn't something the company dreamed up over tacos last week. After a lot of time and money, Apple decided that the inclusion of LiDAR could help turn the iPhone camera from "one of the best" to "one of the very best" and that's important to the company, as it should be.

Do the sensors need to come back to Android?

That's the million-dollar question, isn't it? Apple did AR using just the standard cameras for a while, and just because the new iPhone and iPad Pro have a LiDAR sensor package doesn't mean that AR on older models will stop working. Still, Apple must have a plan because the hardware isn't cheap, and adding it just to say "look at this!" isn't how things are done in the competitive world of mobile hardware. Apple must have a plan.

Google may be able to build something bigger and better with LiDAR, but I'm thinking they will instead look to inventing new tech instead of repurposing older ideas.

If you were to ask me what I think the future holds for a tablet with a LiDAR sensor package in it, I would instantly think about tieing it to location data. That's what LiDAR was originally designed to do and how NOAA uses it today.

AR is fun, but it's also useful. Google used Tango to build an indoor mapping system that gave audible cues to people with low vision so they knew where to safely step. With a precise location system, that map only needs to be "drawn" once, and then real-time checks need only look for changes that may have happened since the original. And those changes could then be changed on the "master" map.

Most people won't be using a tablet to navigate indoors, but the idea that the world can be built in the cloud using a LiDAR sensor could lead to some other applications. Imagine seeing someone capture a Pokémon on your screen while they're playing Pokemon Go on theirs. Or an application that that could act as a virtual tour guide on the display because it knows where you are and what to draw.

The Floor is Lava is looking like a cool game, but it could have been a cool game without LiDAR. And the iPhone cameras were really good before the addition of LiDAR, but adding it will make for better low-light photos. Apple could have done the same thing using machine learning just like Google or Huawei or Samsung did.

Google has always been about pushing Android forwards through software.

Google isn't likely to try and incorporate LiDAR into Android, and phone makers really can't do it without Google's help this time. Adding it could make Android better in some ways and open up some new tech for making our phones "aware" of where they are and what we're doing, but I just don't see a real need for it.

Google has always been about pushing Android phones forward through software and so far it's done a great job. I'm pretty sure it will stay that way and any new sensors will be a proof-of-concept much like the Pixel 4's Face Unlock was. It doesn't matter that Google would be willing to take the risk and might do it better because it's focusing on building newer ideas instead of reusing old ones.

Jerry is an amateur woodworker and struggling shade tree mechanic. There's nothing he can't take apart, but many things he can't reassemble. You'll find him writing and speaking his loud opinion on Android Central and occasionally on Threads.