Google urges its employees to exercise caution with AI chatbots, including Bard

Sometimes your own creations are scary.

Get the latest news from Android Central, your trusted companion in the world of Android

You are now subscribed

Your newsletter sign-up was successful

What you need to know

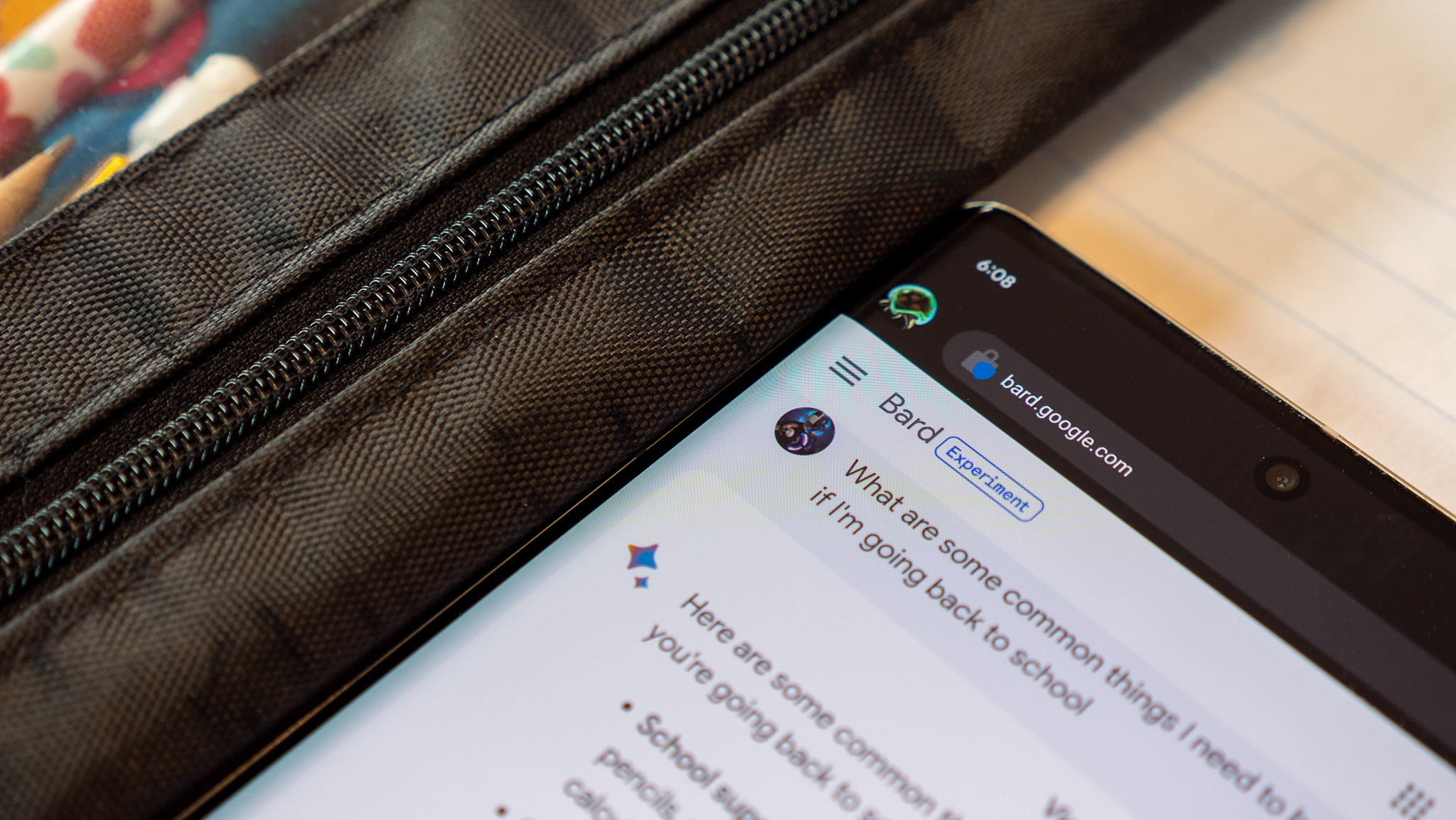

- Google is beginning to warn its employees against submitting confidential company information code into its own AI software Bard.

- The company is becoming increasingly more concerned with the potential of a data leak as information into the bot could become public knowledge.

- Similar measures to place more focus on privacy and security with AI software has cropped up in the EU and Samsung.

Despite Google rushing forward to bake its own AI chatbot into its search engine, the company is now trying to steer employees away from using it. According to Reuters, the Mountain View-based company has been advising employees to refrain from sinking confidential material into the AI chatbot. Alphabet has also extended this warning to computer code, as well.

Google Bard can assist programmers with their coding but, as the company states, the company told Reuters that it can at times deliver "undesired" suggestions. Additionally, because of how chatbots like Bard work, there is potential for a data leak. Not only may a human read your chat (for any such reason) but another AI could take that information as a part of its learning process and then disperse that in return for another query, Reuters explained. In turn, this would enable highly sensitive data to become public.

The company does have a statement on its Bard FAQ page which tells users not to "include information that can be used to identify you or others in your Bard conversations." However, at the end of the day, the chatbot cannot understand what confidential company information or code looks like.

Google also informed Reuters of its current traffic jam in the EU as it addresses questions with Ireland's Data Protection Commission. They are concerned about Bard's impact on user privacy which echoes other concerns by the European Commission.

The European Commission and Google have been in talks since May about drafting new guidelines for AI software, deemed the "AI Pact." This voluntary pact would affect European and non-European countries as the Commission looks to get a handle on AI software and its potential privacy risks.

This also includes the United States which is also working with the EU to create a new "minimal standard" for AI as concerns continue to rise about the potential dangers to a person's security that is utilizing the chatbot.

Additionally, Google steering its employees away from submitting confidential company information and sensitive data into its AI chatbot echoes what Samsung did with its workers in May. The Korean OEM actually banned its employees from using AI chatbots such as Bard and ChatGPT after a mishap with a Samsung engineer. They submitted confidential company code into an AI chatbot which prompted the swift and sudden cut-off of the software.

Get the latest news from Android Central, your trusted companion in the world of Android

During Google I/O 2023, the company stated it will look focus on being "responsible" with its AI software. The company told Reuters that it aims to be transparent about the limitations of the technology.

Nickolas is always excited about tech and getting his hands on it. Writing for him can vary from delivering the latest tech story to scribbling in his journal. When Nickolas isn't hitting a story, he's often grinding away at a game or chilling with a book in his hand.