Google is still being slow and careful with AI and that's still a good thing

Who does it best is more important than who does it first.

Did you know that there are 100,000 people living in Antarctica full-time? Of course, you didn't because there aren't. But if enough people typed that on the internet and claimed it as fact, eventually all the AI chatbots would tell you there are 100,000 people with Antarctic residency.

This is why AI in its current state is mostly broken without human intervention.

I like to remind everyone — including myself — that AI is neither artificial nor intelligent. It returns very predictable results based on the input it is given in relation to the data it was trained with.

That weird sentence means if you feed a language model with line after line of boring and unfunny things Jerry says then ask it anything, it will repeat one of those boring and unfunny things I've said. Hopefully, one that works as a reply to whatever you typed into the prompt.

In a nutshell, this is why Google wants to go slow when it comes to direct consumer-facing chat-style AI. It has a reputation to protect.

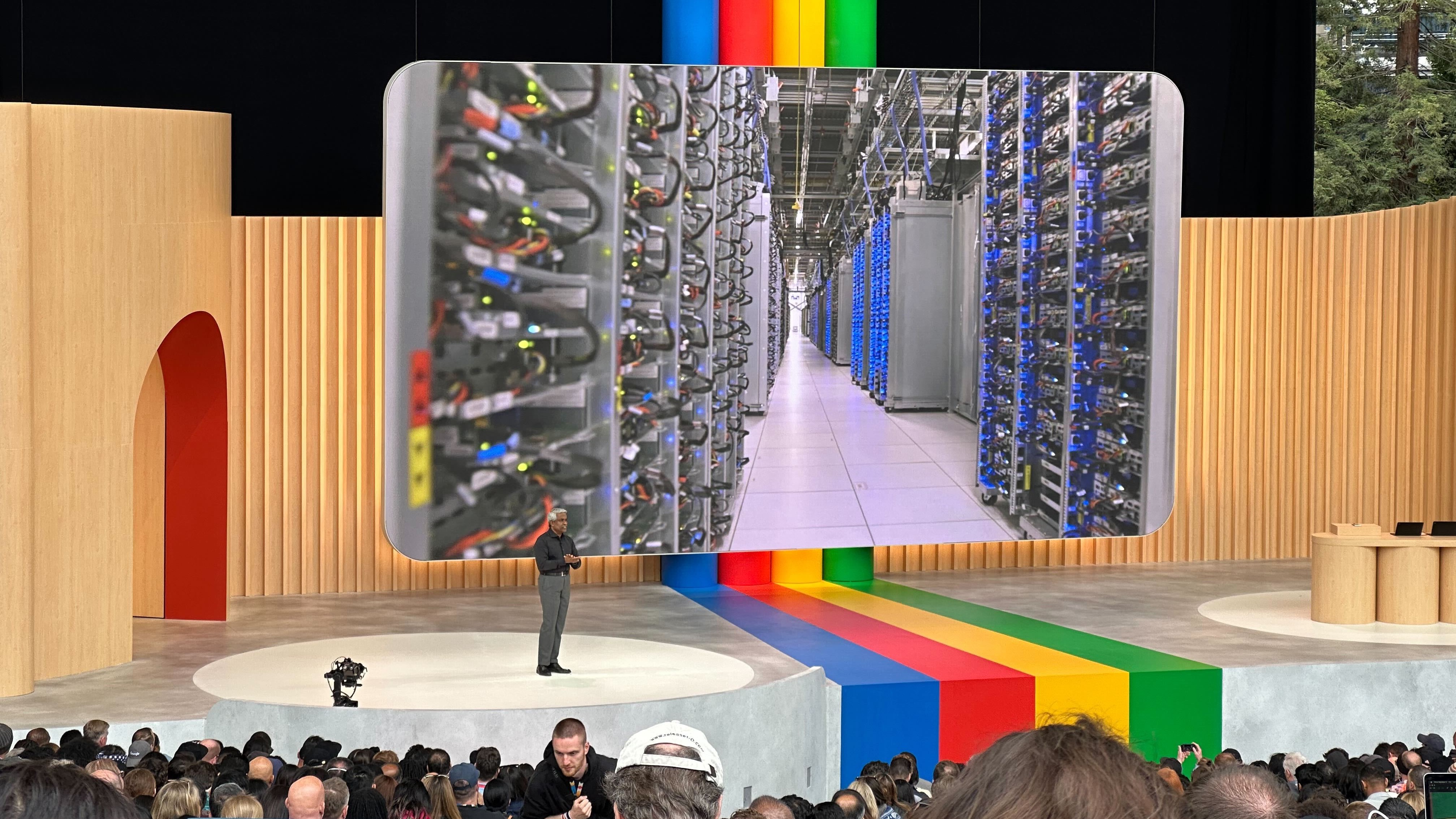

The internet has told me that everything AI-related we saw at Google I/O 2023 was Google being in some sort of panic mode and a direct response to some other company like Microsoft or OpenAI.

I think that's hogwash. The slow release of features is exactly what Google has told us about how it plans to handle consumer AI time and time again. It's cool to think that Google rushed to invent everything we saw in just a month in response to Bingbot's latest feature release, but it didn't. While being cool to imagine, that's also foolish to believe.

Get the latest news from Android Central, your trusted companion in the world of Android

This is Google's actual approach in its own words:

"We believe our approach to AI must be both bold and responsible. To us that means developing AI in a way that maximizes the positive benefits to society while addressing the challenges, guided by our AI Principles. While there is natural tension between the two, we believe it’s possible — and in fact critical — to embrace that tension productively. The only way to be truly bold in the long-term is to be responsible from the start."

Maximizing positives and minimizing harm is the key. Yes, there is a blanket disclaimer that says so-and-so chatbots might say horrible or inaccurate things attached to these bots, but that is not enough. Any company involved in the development — and that includes throwing cash at a company doing the actual work — needs to be held responsible when things go south. Not if, when.

This is why I like the slow and careful approach that tries to be ethical and not the "let's throw features!!!!" approach we see from some other companies like Microsoft. I'm positive Microsoft is concerned with ethics, sensitivity, and accuracy when it comes to AI but so far it seems like only Google is putting that in front of every announcement.

This is even more important to me since I've spent some time researching a few things around consumer-facing AI. Accuracy is important, of course, and so is privacy, but I learned the hard way that filtering is probably the most important part.

I was not ready for what I did. Most of us will never be ready for it.

I dug around and found some of the training material used for a popular AI bot telling it what is too toxic to use inside its data model. This is the stuff it should pretend does not exist.

The data consisted of both text and heavily edited imagery, and both actually affected me. Think of the very worst thing you can imagine — yes, that thing. Some of this is even worse than that. This is dark web content brought to the regular web in places like Reddit and other sites where users provide the content. Sometimes, that content is bad and stays up long enough for it to be seen.

Seeing this taught me three things:

1. The people who have to monitor social media for this sort of garbage really do need the mental support companies offer. And a giant pay raise.

2. The internet is a great tool that the most terrible people on the planet use, too. I thought I was thick-skinned enough to be prepared for seeing it, but I was not and literally had to leave work a few hours early and spend some extra time with the people who love me.

3. Google and every other company that provides consumer-grade AI can not allow data like this to be used as training material, but it will never be able to catch and filter out all of it.

Numbers one and two are more important to me, but number three is important to Google. The 7GB raw text of "offensive web content" — just a fraction of the content I accessed, had the word "Obama" used over 330,000 times in an offensive way. The number of times it's used in a despicable way across the entire internet is probably double or triple that number.

This is what consumer AI language models are trained with. No human is feeding ticker tapes of handwritten words into a computer. Instead, the "computer" looks at web pages and their content. This web page will eventually be analyzed and used as input. So will chan meme and image pages. So will blogs about the earth being flat or the moon landing being faked.

If it takes Google moving slowly to weed out as much of the bad from consumer AI as it can, I'm all for it. You should be, too because all of this is evolving its way into the services you use every day on the phone you're planning to buy next.

Jerry is an amateur woodworker and struggling shade tree mechanic. There's nothing he can't take apart, but many things he can't reassemble. You'll find him writing and speaking his loud opinion on Android Central and occasionally on Threads.