My top 5 Google I/O demos, from Gemini robots to virtual dressing rooms

Curious to know what happened at Google I/O 2025 beyond the live-streamed keynote? Here's the recap.

The headlining event of Google I/O 2025, the live keynote, is officially in the rearview. However, if you've followed I/O before, you may know there's a lot more happening behind the scenes than what you can find live-streamed on YouTube. There are demos, hands-on experiences, Q&A sessions, and more happening at Shoreline Amphitheatre near Google's Mountain View headquarters.

We've recapped the Google I/O 2025 keynote, and given you hands-on scoops about Android XR glasses, Android Auto, and Project Moohan. For those interested in the nitty-gritty demos and experiences happening at I/O, here are five of my favorite things I saw at the annual developer conference today.

Controlling robots with your voice using Gemini

Google briefly mentioned during its main keynote that its long-term goal for Gemini is to make it a "universal AI assistant," and robotics has to be a part of that. The company says that its Gemini Robotics division "teaches robots to grasp, follow instructions and adjust on the fly." I got to try out Gemini Robotics myself, using voice commands to direct two robot arms and move object hands-free.

The demo is using a Gemini model, a camera, and two robot arms to move things around. The multimodal capabilities — like a live camera feed and microphone input — make it easy to control Gemini robots with simple instructions. In one instance, I asked the robot to move the yellow brick, and the arm did exactly that.

It felt responsive, although there were some limitations. In one instance, I tried to tell Gemini to move the yellow piece where it was before, and quickly learned that this version of the AI model doesn't have a memory. But considering Gemini Robotics is still an experiment, that isn't exactly surprising.

I wish Google would've focused a bit more on these applications during the keynote. Gemini Robotics is exactly the kind of AI we should want. There's no need for AI to replace human creativity, like art or music, but there's an abundance of potential for Gemini Robotics to eliminate the mundane work in our lives.

Trying on clothes using Shop with AI Mode

As someone who refuses to try on clothes in dressing rooms — and hates returning clothes from online stores that don't fit as expected just as much — I was skeptical but excited by Google's announcement of Shop with AI Mode. It uses a custom image generation model that understands "how different materials fold and stretch according to different bodies."

Get the latest news from Android Central, your trusted companion in the world of Android

In other words, it should give you an accurate representation of how clothes will look on you, rather than just superimposing an outfit with augmented reality (AR). I'm a glasses-wearer that frequently tries on glasses virtually using AR, hopeful that it'll give me an idea of how they'll look on my face, only to be disappointed by the result.

I'm happy to report that Shop with AI Mode's virtual try-on experience is nothing like that. It quickly takes a full-length photo of yourself and uses generative AI to add an outfit in a way that looks shockingly realistic. In the gallery below, you can see each part of the process — the finished result, the marketing photo for the outfit, and the original picture of me used for the edit.

Is it going to be perfect? Probably not. With that in mind, this virtual try-on tool is easily the best I've ever used. I'd feel much more confident buying something online after trying this tool — especially if it's an outfit I wouldn't typically wear.

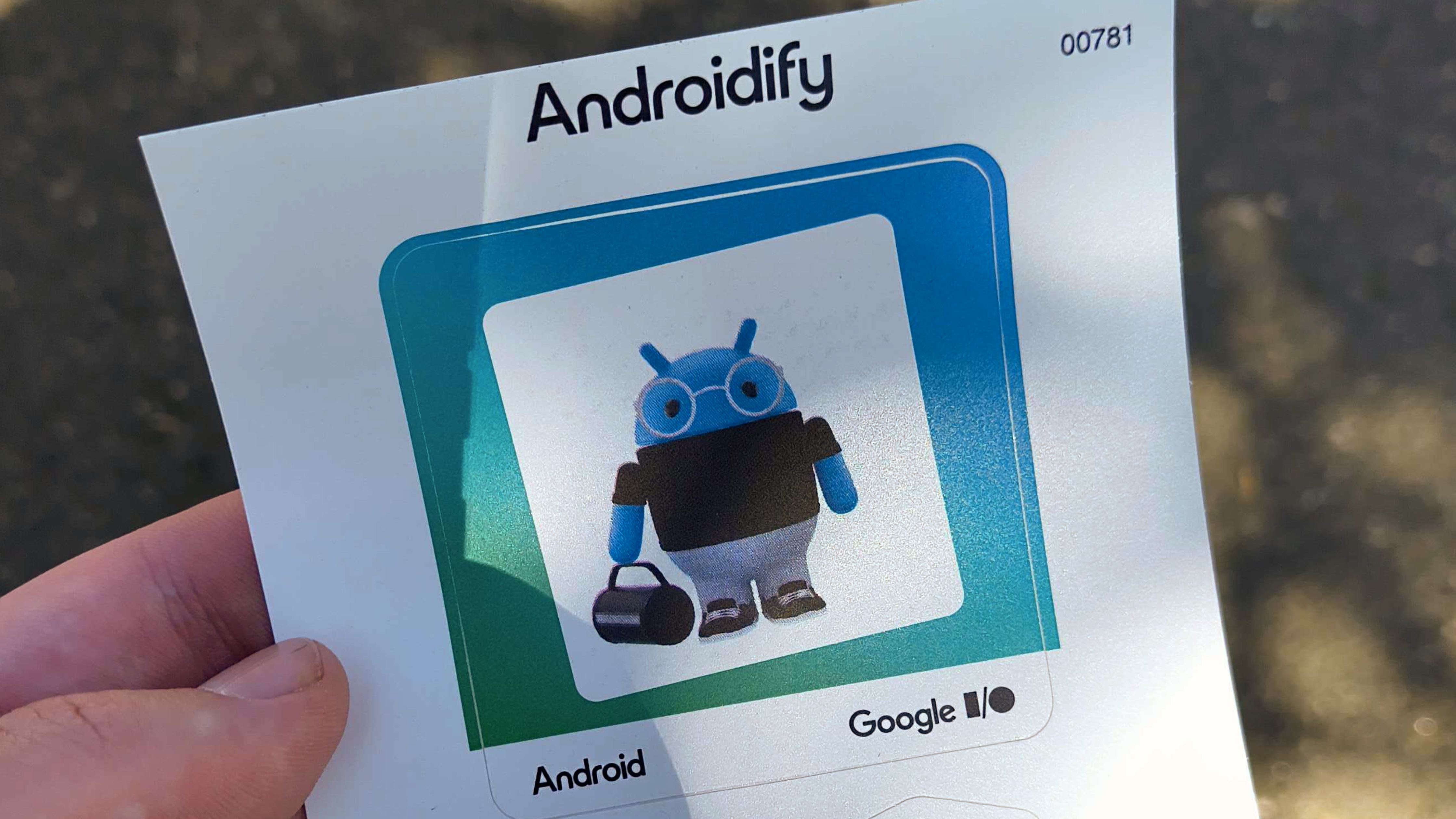

Creating an Android Bot of myself using Google AI

A lot of demos at Google I/O are really fun, simple activities with a lot of technical stuff going on in the background. There's no better example of that than Androidify, a tool that turns a photo of yourself into an Android Bot. To get the result you see below, a complex Android app flow used AI and image processing. It's a glimpse of how an app developer might use Google AI in their own apps to offer new features and tools.

Androidify starts with an image of a person, ideally a full-length photo. Then, it analyses the image and generates a text description of it using the Firebase AI Logic SDK. From there, that description is sent to a custom Imagen model optimized specifically for creating Android Bots. Finally, the image is generated.

That's a bunch of AI processing to get from a real-life photo to a custom Android Bot. It's a neat preview of how developers can use tools like Imagen to offer new features, and the good news is that Androidify is open-source. You can learn more about all that goes into it here.

Making music with Lyria 2

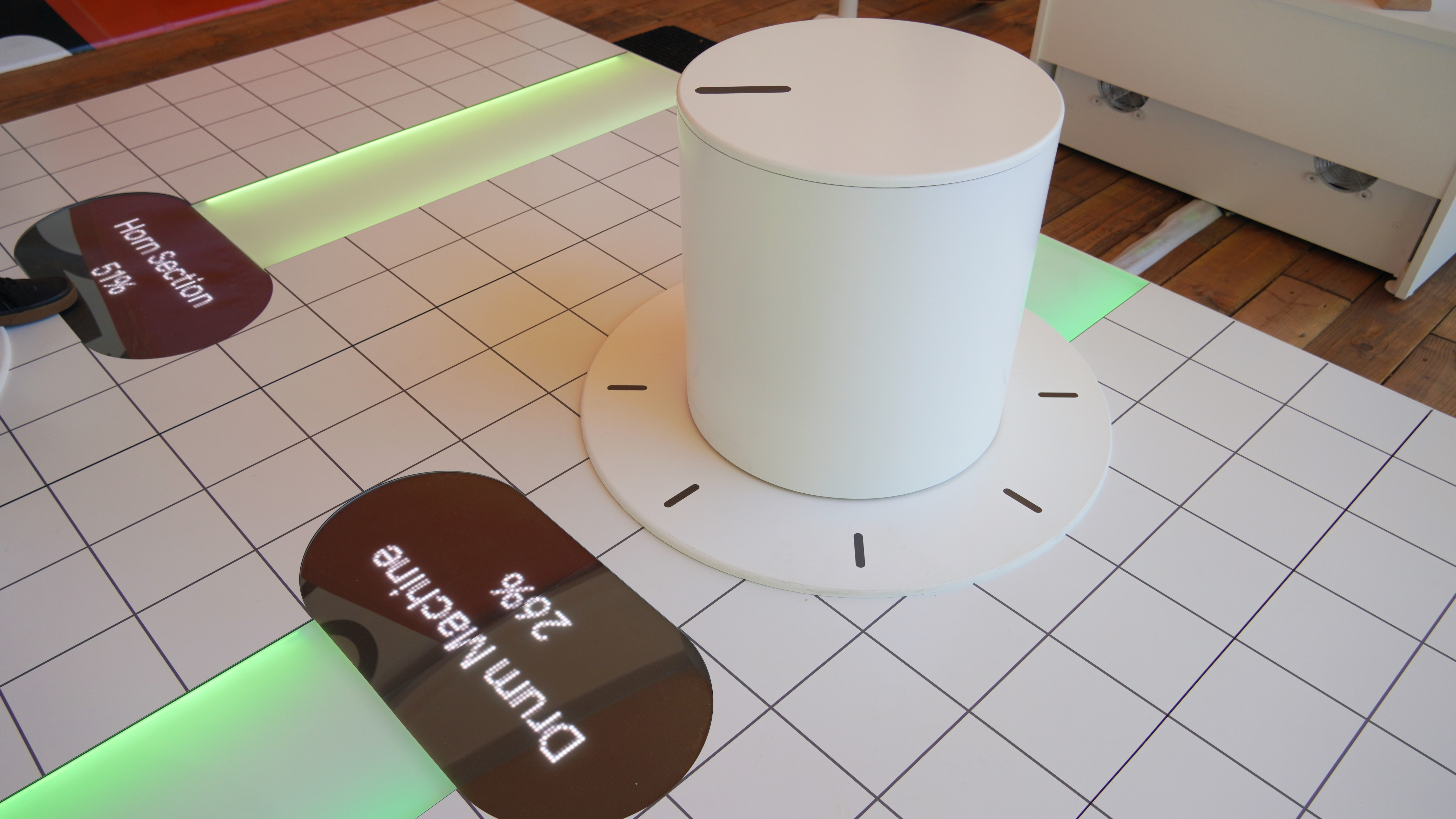

Music isn't my favorite medium to incorporate AI, but alas, the Lyria 2 demo station at Google I/O was pretty neat. For those unfamiliar, Lyria Realtime "leverages generative AI to produce a continuous stream of music controlled by user actions." The idea is that developers can incorporate Lyria into their apps using an API to add soundtracks to their apps.

At the demo station, I tried a lifelike representation of the Lyria API in action. There were three music control knobs, only they were as big as chairs. You could sit down and spin the dial to adjust the percentage of impact each genre had on the sound created. As you change the genres and their prominence, the audio playing changed in real time.

The cool part about Lyria Realtime is that, as the name suggests, there's no delay. Users can change the music generation in an instant, giving people that aren't musicians more control over sound than ever before.

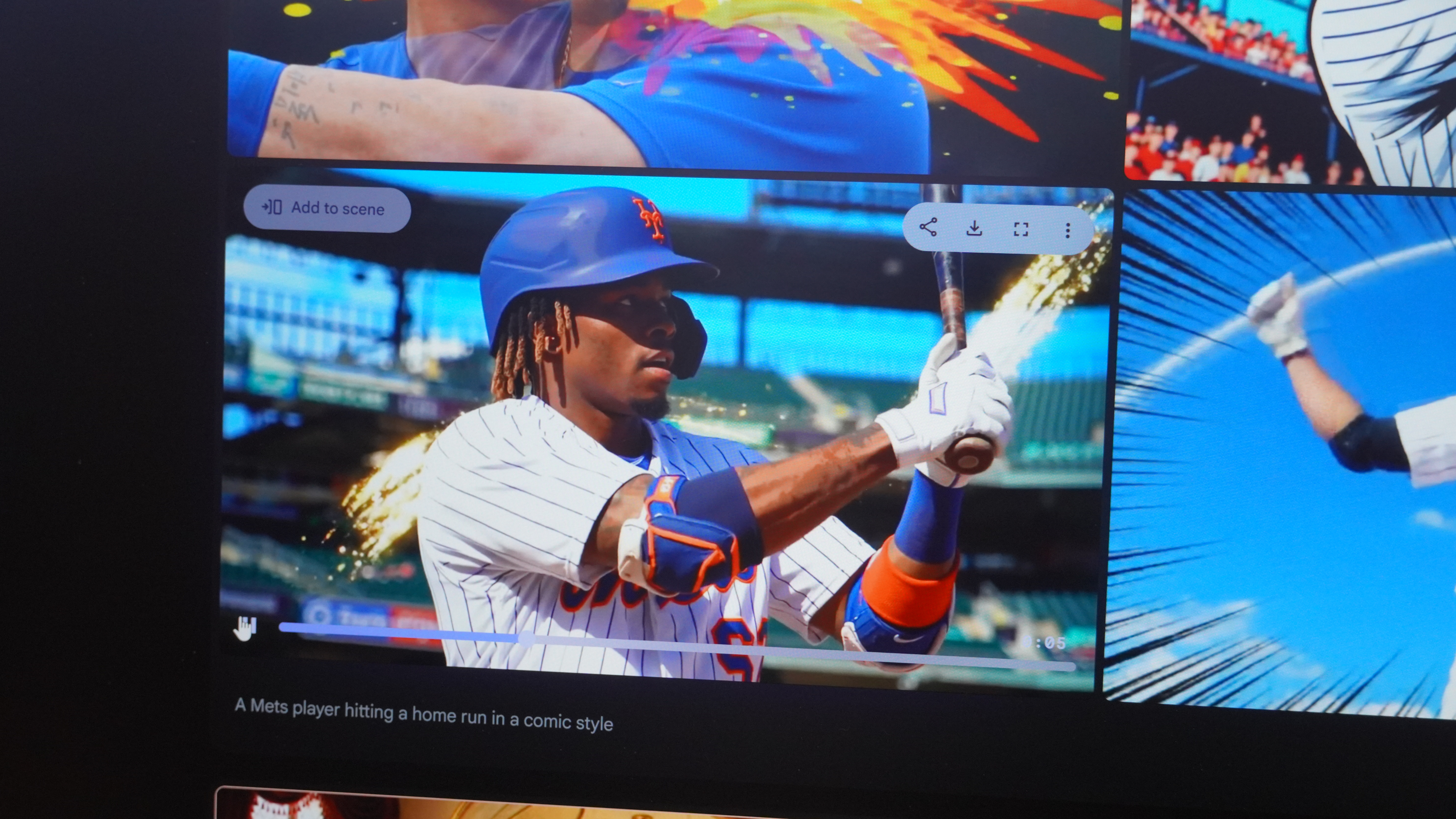

Generating custom videos with Flow and Veo

Finally, I used Flow — an AI filmmaking tool — to create custom video clips using Veo video-generation models. Compared to basic video generators, Flow is used to enable creators to have consistent and seamless themes and styles across clips. After creating a clip, you can change the video's characteristics as "ingredients," and use that as prompting material to keep generating.

I gave Veo 2 (I couldn't try Veo 3 because it takes longer to generate) a challenging prompt: "generate a video of a Mets player hitting a home run in comic style." In some ways, it missed the mark — one of my videos had a player with two heads and none of them actually showed a home run being hit. But setting Veo's struggles aside, it was clear that Flow is a useful tool.

The ability to edit, splice, and add to AI-generated videos is nothing short of a breakthrough for Google. The very nature of AI generation is that every creation is unique, and that's a bad thing if you're a storyteller using multiple clips to create a cohesive work. With Flow, Google seems to have solved that problem.

If you found AI talk during the main keynote boring, I don't blame you. The word Gemini was spoken 95 times and AI was uttered slightly fewer on 92 occasions. The cool thing about AI isn't what it can do, but how it can change the way you complete tasks and interact with your devices. So far, the demo experiences at Google I/O 2025 did a solid job at showing the how to attendees at the event.

Brady is a tech journalist for Android Central, with a focus on news, phones, tablets, audio, wearables, and software. He has spent the last three years reporting and commenting on all things related to consumer technology for various publications. Brady graduated from St. John's University with a bachelor's degree in journalism. His work has been published in XDA, Android Police, Tech Advisor, iMore, Screen Rant, and Android Headlines. When he isn't experimenting with the latest tech, you can find Brady running or watching Big East basketball.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.