Google I/O 2025: The biggest announcements from AI to Android XR

Gemini packed upgrades, AI Mode magic, and the first look at Google's smart glasses.

Google I/O 2025 keynote is behind us; our crew was on the ground experiencing the event firsthand as the tech giant announced a multitude of Gemini-related features, Android 16, enhancements to Google's AI Mode, and, more importantly, all things Android XR. As you may have already heard, "AI" got a solid 92 mentions, but Gemini, the show stopper, snagged 95! But to be fair, the two defined the theme of this year's developers conference. So, let's jump right into it, shall we?

Gemini 2.5— the 'most intelligent' model yet

What's I/O without Gemini these days? Personal, Proactive, and Powerful are the three pillars of Gemini 2.5 Pro.

Google detailed how its Gemini 2.5 models, including 2.5 Pro, are going to advance in the near future thanks to a few updates. To begin, Gemini is going to receive Google's 2.5 Flash model, which is quickly becoming the "most powerful" version, improving reasoning and multimodality, the tech giant noted. Moreover, Google says 2.5 Flash is now better (more efficient) at code and long context.

According to the company, Gemini 2.5 Flash will be available alongside Gemini 2.5 Pro sometime in June. Additionally, Deep Think, Google's new reasoning mode, which is said to nudge Google's AI into "considering multiple hypotheses" before delivering its response, is currently being tested.

Tulsee Doshi, senior director & product lead, Gemini Models at Google DeepMind, demoed this new Gemini Model's text-to-speech capabilities. She added that it will work in 24 languages, and can switch between languages (and switch back) "all with the same voice."

Doshi also showed us how Gemini Diffusion generates codes five times faster than Google's lightest 2.5 model— analyzing the prompt in the blink of an eye (quite literally). This model will roll out publicly in June.

AI Mode and Gemini Live in Search

AI Mode and Google Search seem to be levelling up. Google announced today at I/O that it will be integrating a custom version of Gemini's 2.5 model into Search for both AI Mode and AI Overviews.

Get the latest news from Android Central, your trusted companion in the world of Android

This means that users can start asking Gemini more complex queries tailored to their needs. Google also states that AI Mode will be the sole platform to get a front-seat access to all the new AI-powered features that will be rolled out starting this week.

Google Search gets Project Astra:

Google announced today that it's pushing the limits of live, real-time search and bringing Project Astra's multimodal capabilities to Google Search. You can talk back and forth with Gemini about what you're looking at through your device's camera.

For example, if you're feeling stumped on a project and need some help, simply tap the "Live" icon in Al Mode or in Lens, point your camera, and ask your question. "Just like that, Search becomes a learning partner that can see what you see — explaining tricky concepts and offering suggestions along the way, as well as links to different resources that you can explore — like websites, videos, forums, and more," Google added.

Gemini Live camera and screen sharing is coming to both Android and iOS starting today.

AI Mode gains more features

AI Mode is set to get a new Deep Search feature, which will give users a more thorough and thought-out response to their queries. This is particularly handy when users have to look up multiple websites while writing a research paper or simply want to gain knowledge about a certain topic.

Google stated in its press release that Deep Search collates data from "hundreds of websites," and it has the power to connect and draw conclusions from information that comes from different, unrelated sources or contexts to give users an "expert-level fully-cited report. "

Google added that they will be working with StubHub, TicketMaster, and Resy to create a "seamless and helpful" experience for users.

AI Mode x Project Mariner:

AI Mode is gaining Project Mariner's agentic capabilities, an AI agent that was initially built on Gemini 2.0. It can "understand" and process all the elements in a website, from images, text, code, and even pixels.

Google says that AI Mode will be integrated with these capabilities, which will help save users' time. For instance, you can ask AI Mode to find you two tickets for a soccer game on Saturday, with your preferred seats, and it will present you with multiple websites that match your exact needs.

For instance, you can ask AI mode to look up "things to go in Toronto this weekend with friends who are Harry Potter fans and big foodies."

AI Mode may show you results of Harry Potter-themed cafes or parties that you can go to, with hotel recommendations, tickets, and more. This feature within AI Mode can be adjusted in Search's personalization settings.

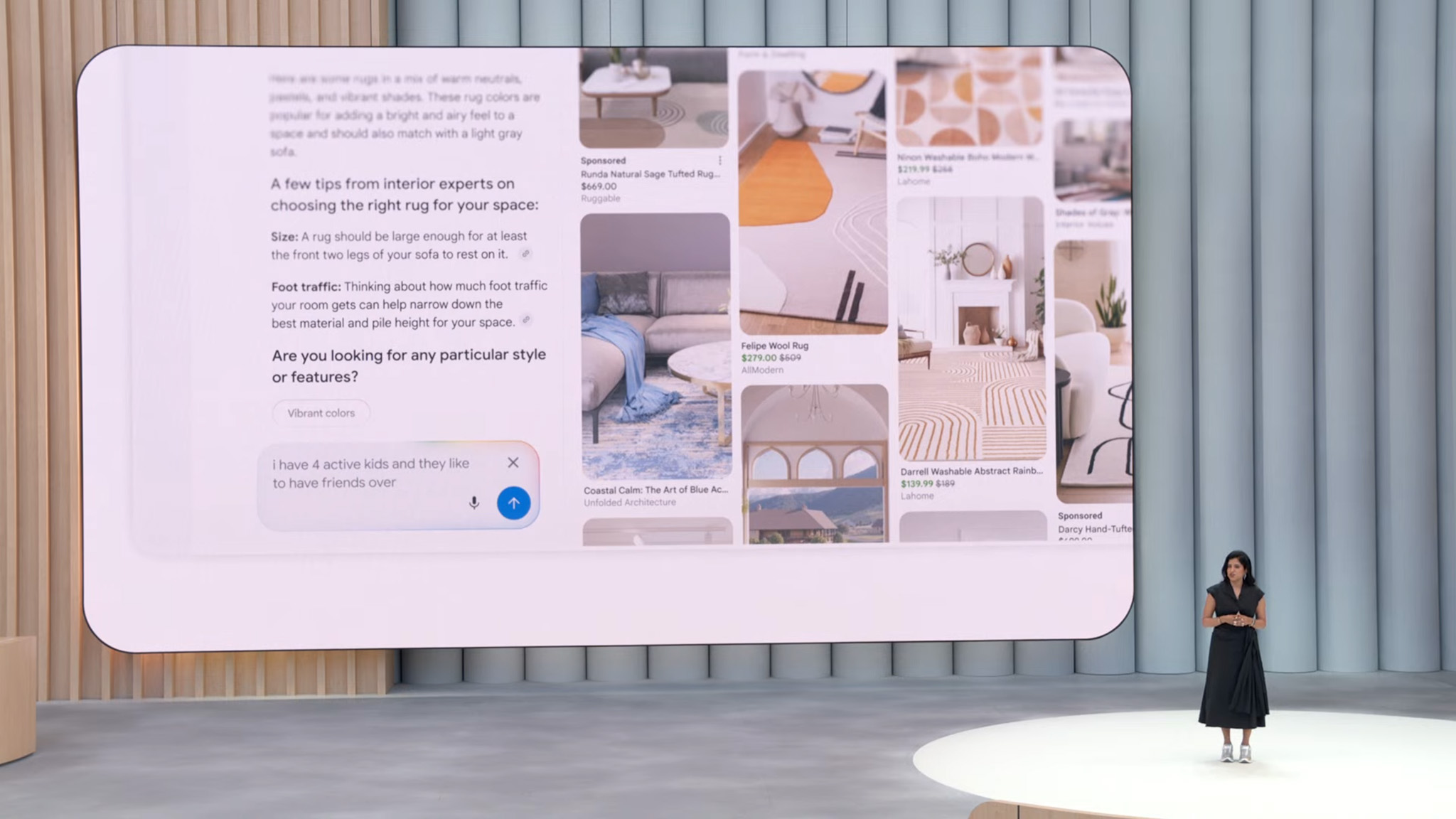

Shopping with AI Mode

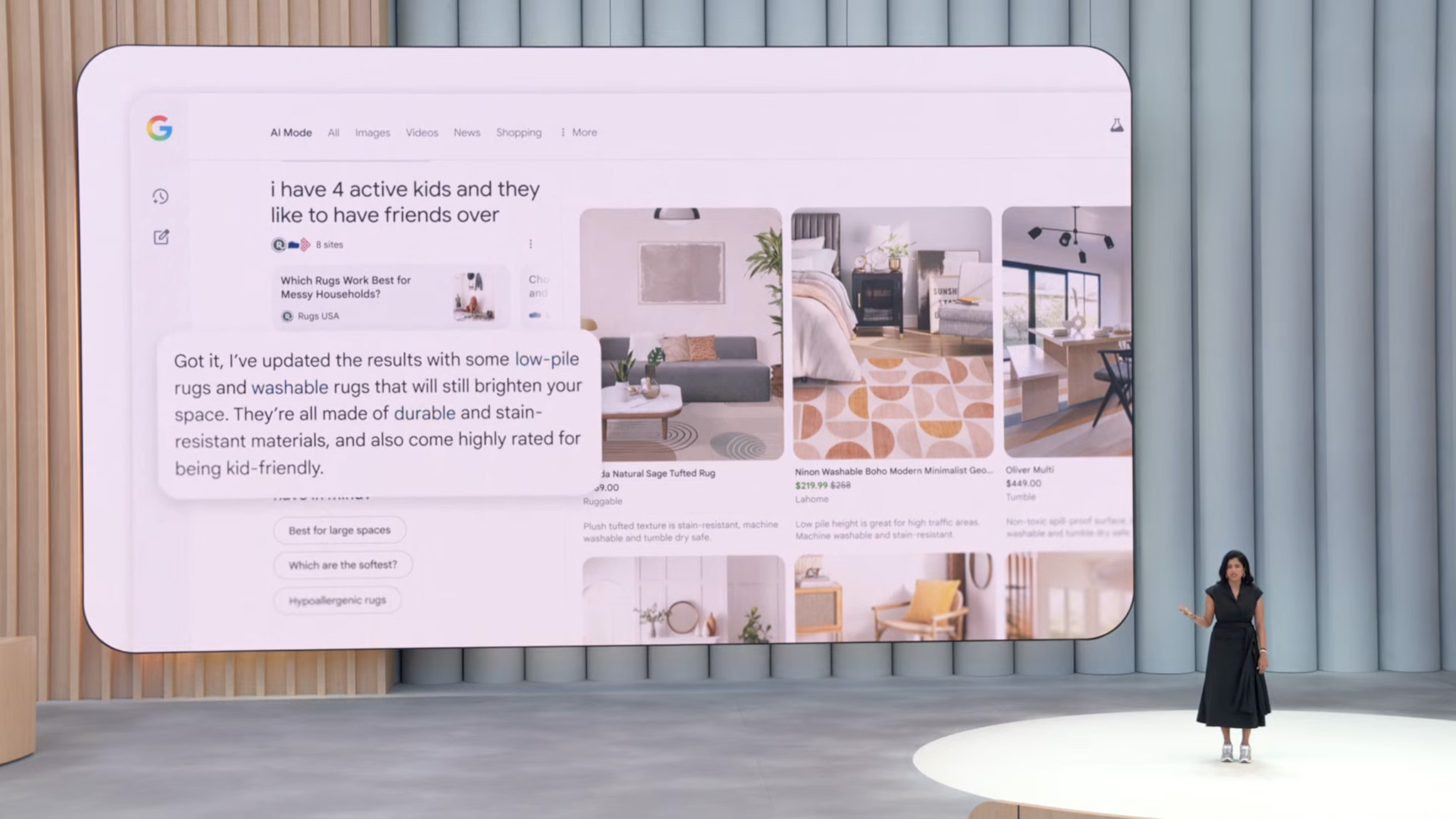

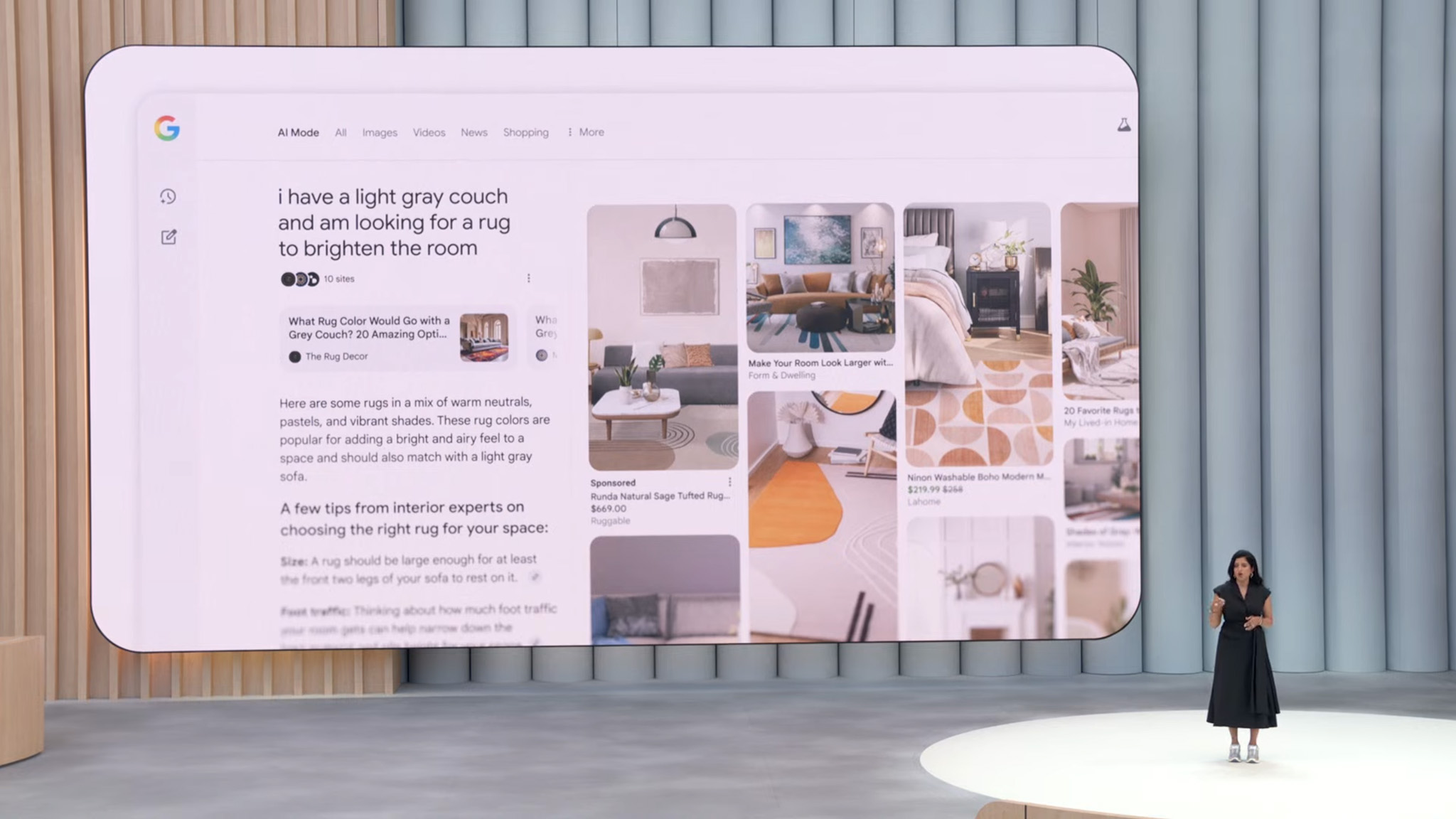

Lastly, Google is bringing a more seamless online shopping experience with AI Mode.

"It brings Gemini model capabilities with our Shopping Graph to help you browse for inspiration, think through considerations, and narrow down products," Google explained.

For instance, if you tell Al Mode you're looking for a cute travel bag. It understands that you're looking for visual inspiration, and so it will show you a browsable panel of photos and product listings personalized to your tastes.

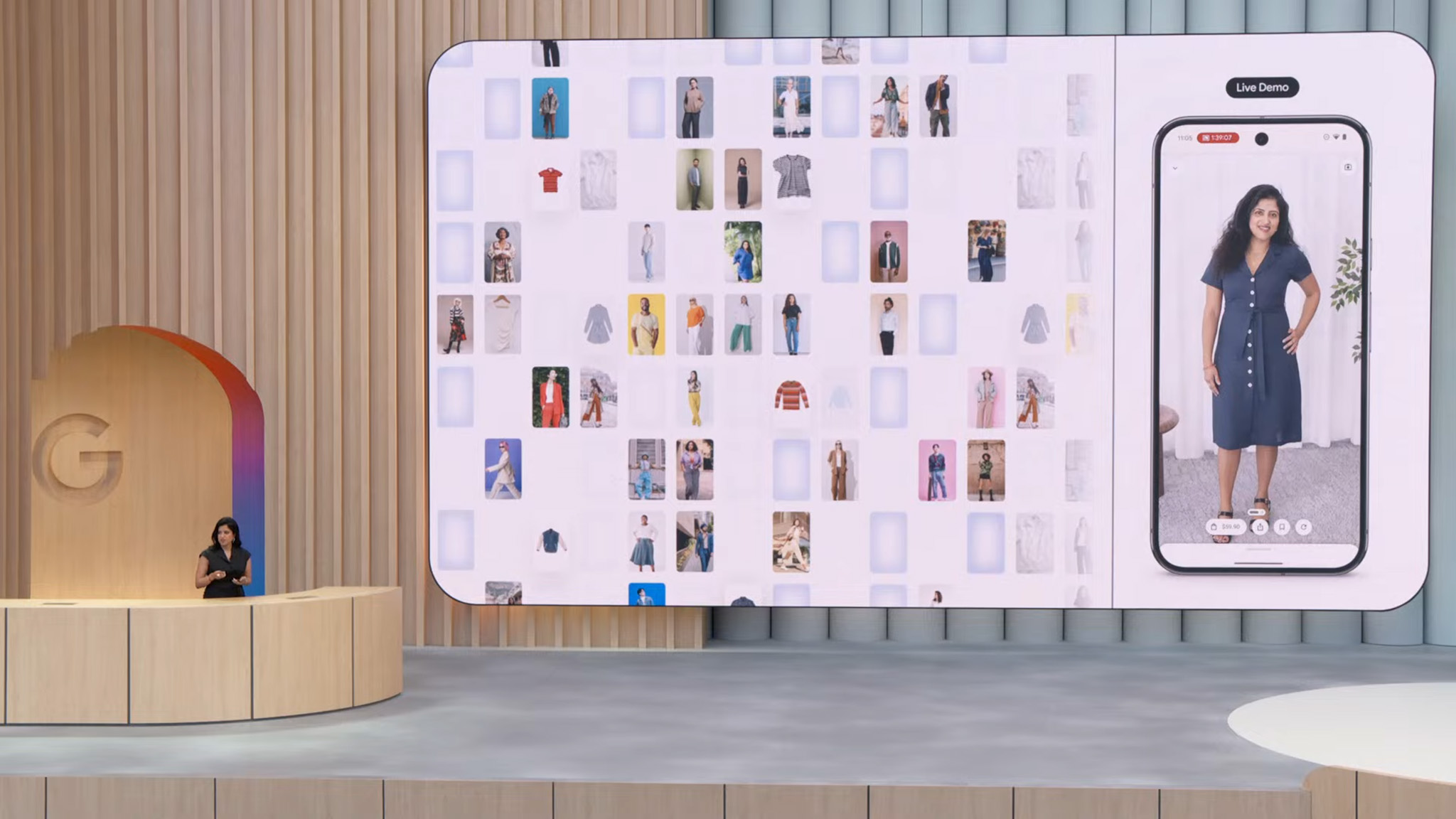

Users can even try out outfits virtually with the "try on" option. All you need to do is just upload a clear single picture, and AI Mode will show you images of what you'd look like in the attire you're shopping for. It then adds the items to the cart once they've picked the perfect outfit. AI Mode will help you buy the said item at your desired price.

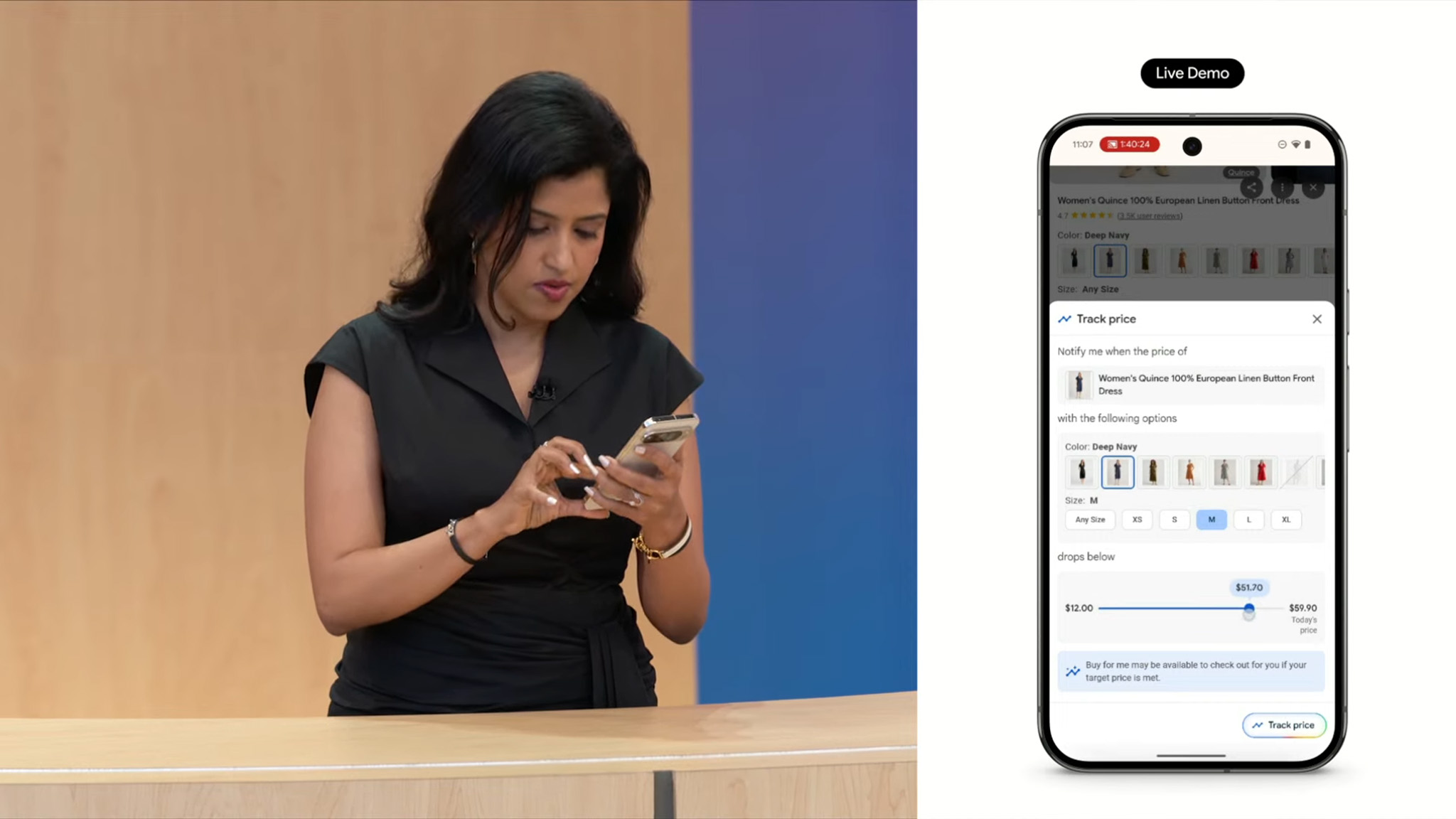

Just tap "track price" on any product listing and set the right size, color (or whatever options you prefer), and the amount you want to spend. Keep an eye out for a price drop notification and, if you're ready to buy, just confirm the purchase details and tap "buy for me".

Behind the scenes, AI Mode will add the item to your cart on the merchant's site and securely complete the checkout on your behalf, with the help of Google Pay, obviously with the user's supervision.

The virtual "try on" experiment is rolling out in Search Labs for U.S. users starting today. And AI Mode will be rolling out to everyone in the U.S. today as well, and the new features will slowly begin rolling out to Lab users in the coming weeks.

Imagen 4 and Veo 3

Imagen 4 and Veo 3 are next-gen image and video generating AI models, succeeding the previous models.

Whether you're designing a professional presentation, whipping up social media graphics, or crafting event invitations, Imagen 4 is said to give you visuals that "pop with lifelike detail and better text and typography outputs."

As for Veo 3 — it lets users not just generate a video scene, but also focuses on the details like "the bustling city sounds, the subtle rustle of leaves or even character dialogue — all from simple text prompts." Everyone can try Imagen 4 today in the Gemini app, while Veo 3 is available today in the Gemini app only for Google AI Ultra subscribers in the U.S.

Flow

If you thought that was all that Google could offer with image and video generation, then you've got to look at what their new "Flow" has to offer. It basically does the job of a script writer, a cinematographer, and an editor. Flow basically is built to help storytellers turn big ideas into movie scenes without spending the money to make one!

With Flow, you just type something like "A detective chases a thief through a rainy Tokyo alley," and Veo 3 brings it to life, complete with footsteps, rain sounds, and cinematic lighting.

You can tweak camera angles, zoom in, or switch perspectives like you're behind the lens. Building scenes becomes a breeze—add shots, change angles, remix elements, and it all stays consistent, like the one you see below.

At present, Flow is rolling out to Google AI Pro and Ultra users in the U.S., with more countries getting access soon.

Google Beam

Google announced a new video conferencing platform at I/O 2025, called Google Beam.

It is built to transform regular 2D video calls into more realistic 3D experiences. Essentially, what this means is that the platform will use the AI tech and special light field displays, multiple cameras, as seen in Project Starline, to build a live, detailed, 3D digital copy for the user.

That copy is then displayed to the person on the other side, using the same special light field display. This screen sends different light rays to each eye, creating the illusion of depth and volume without the need for special glasses or headsets. So Google Beam will make it seem like the 3D copy of the other person is actually "there" in the room with you.

"This is what allows you to make eye contact, read subtle cues, and build understanding and trust, as if you were face to face," Google added.

Along with Google Beam, the company is launching a real-time speech translation feature that allows people to speak freely, despite having language barriers.

For instance, if two people on a call speak different languages, such as French and English, each person can speak in their preferred language, and Google Beam will then translate the audio in real time.

It is expanding the real-time translation to Google Meet, starting today. Users on Meet can enable the live translation feature to have seamless conversations during meetings.

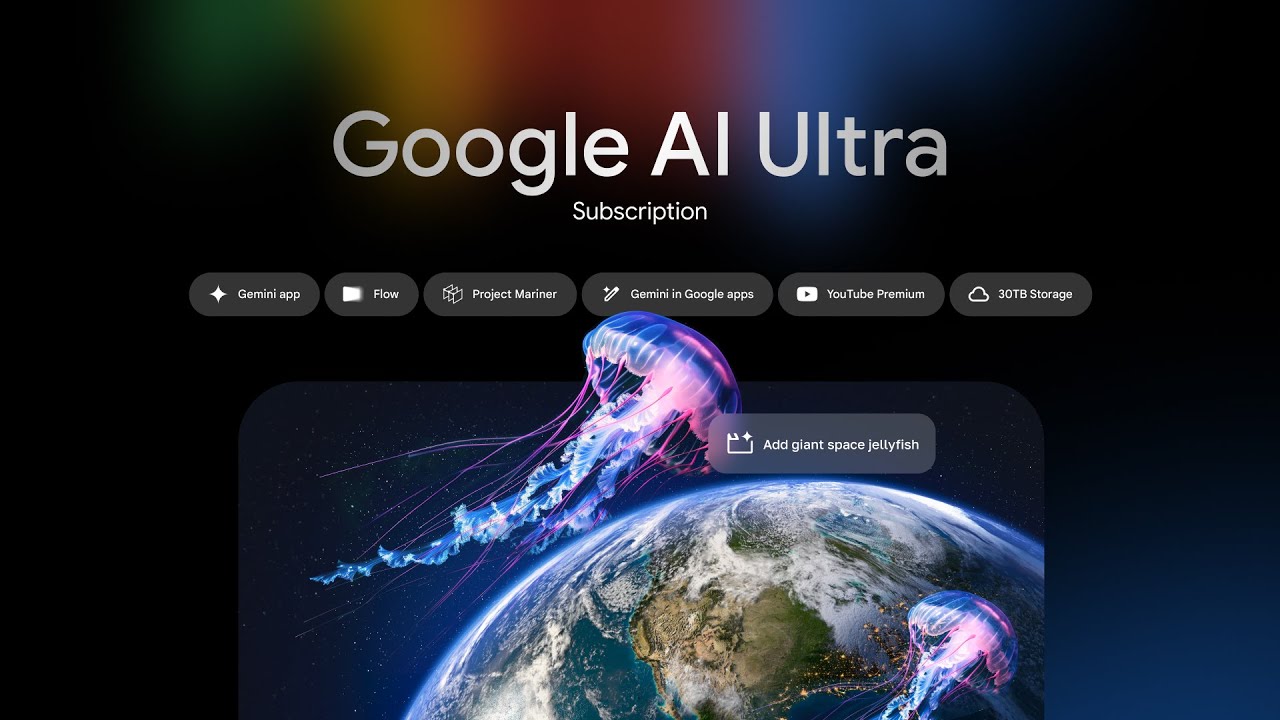

Google brings the VIP of AI subscriptions

Google announced a new all-inclusive pass to all the latest top-end AI features, including Veo 3, Imagen 4, Flow, Gemini on Chrome, and more. The tech giant is calling the subscription Google AI Ultra, "a new AI subscription plan with the highest usage limits and access to our most capable models and premium features."

This plan is best suited for filmmakers, developers, creative professionals, or someone who wants to make the most out of Google AI with the highest level of access. Google AI Ultra is available today in the U.S. for $250 a month (with a special offer for first-time users of 50% off for three months), and is coming soon to more countries.

That said, Google is renaming its premium AI plan to Google AI Pro. Priced at $20 a month, this plan gives users access to premium features as well. It includes access to the Gemini app with powerful models like 2.5 Pro and Veo 2 for video generation, alongside AI integration directly within Google apps such as Gmail, Docs, and Vids aimed at writing or proofreading.

Google says that subscribers also gain increased usage limits and premium features for NotebookLM, an AI research and writing assistant, and can generate and animate images with Whisk.

Drum roll please..... It's Android XR time!

You can be angry at me for holding onto the best for the last, but that's what Google did at I/O this year as well.

Google started off the Android XR chat by laying out its plans for Gemini-powered smart glasses and AR glasses. It also touched on Project Moohan, Samsung's XR headset that is going to be powered by Gemini, stating that it will launch later this year.

That said, Google didn't officially confirm a name for its Android XR glasses, and all the demos they showed during I/O did have a "prototype" label on it. We've been waiting to see these glasses ever since we spotted them at last year's I/O event during Project Astra's Demo.

Nishtha Bhatia, product manager, building and bringing consumer experiences at Google, demoed Android XR-powered glasses.

During the demo, she asked Gemini if it remembered the name of the coffee shop on the mug while she was walking through the backstage area at I/O. It pulled up the name, a description of the cafe, all while it was still livestreaming.

It also showed her directions to the said cafe with what turn-by-turn directions look like when viewed through the Android XR glasses. Additionally, Google also live demoed the Live Translate feature that allows for real-time translation while speaking to the person in front of you.

AC's Michael Hicks got to take both the Samsung's XR headset and Android XR glasses for a spin and he seems to be very impressed— practically naming them Gemini glasses!

The glasses that Google showcased were likely designed by Samsung and Google, but eyewear brands like Gentle Monster and Warby Parker will get first dibs on designing Android XR glasses starting next year.

Nandika Ravi is an Editor for Android Central. Based in Toronto, after rocking the news scene as a Multimedia Reporter and Editor at Rogers Sports and Media, she now brings her expertise into the Tech ecosystem. When not breaking tech news, you can catch her sipping coffee at cozy cafes, exploring new trails with her boxer dog, or leveling up in the gaming universe.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.