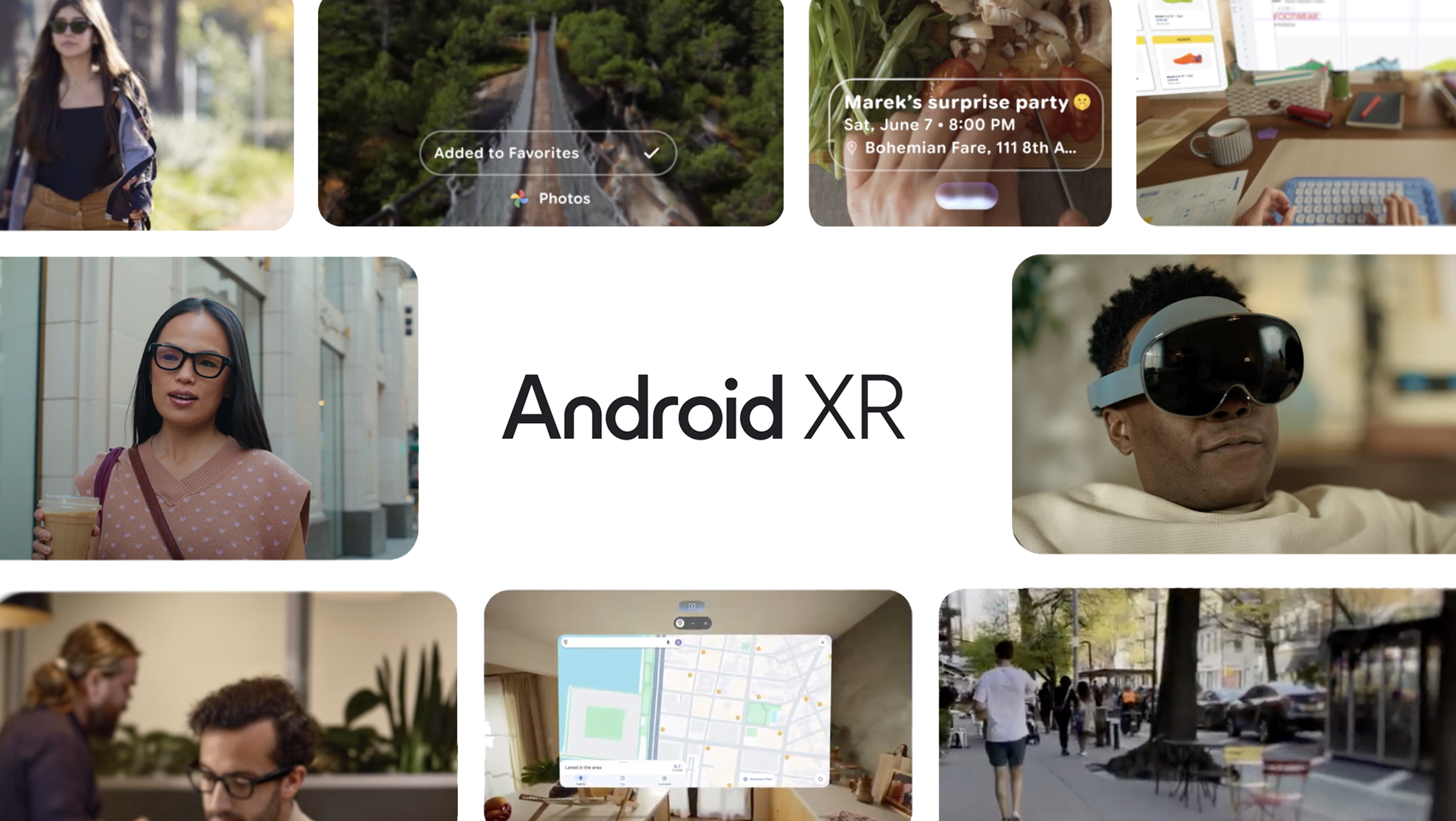

Google just laid out its game plan to make you (and developers) care about Android XR

From Gentle Monster frames to reference designs for 3rd-party smart glasses, Google has serious ambitions for Android XR in 2026.

What you need to know

- At Google I/O 2025, Google showed how apps like Google Maps, Messages, Calendar, and Photos will work on Android XR glasses.

- Eyewear brands like Gentle Monster and Warby Parker will design Android XR glasses for Google.

- Samsung and Google will co-create a "software and reference hardware platform" for other OEMs to make smart glasses.

- The new Android XR Developer Preview 2 adds new tools like stereoscopic 180° or 360° for Android XR apps.

It's been a quiet few months since Google announced Android XR, but at Google I/O 2025, the company outlined its ambitious plans for Gemini-powered smart glasses and AR glasses, trying to court developers to build XR apps — or their own smart glasses!

Google didn't officially confirm a name for its Android XR glasses, but it's "partnering with innovative eyewear brands, starting with Gentle Monster and Warby Parker, to create stylish glasses."

Just as Meta relies on Ray-Ban and (allegedly) Oakley to design its Meta AI glasses, Google wants expert design help for its own smart glasses. Google confirmed they'll have a "camera, microphones and speakers," plus an "optional in-lens display."

In other words, Google plans to sell stylish Gemini AI glasses that sync with your Android phone, but only some will have holographic tech for Google apps like Maps and Messages. Its non-XR glasses will be more affordable, competing directly with Ray-Ban Meta glasses.

Samsung, which first used Android XR for its Project Moohan headset coming out later in 2025, will now use Android XR for its smart glasses, too. Google announced both companies will team up on a "software and reference hardware platform" to help other manufacturers "make great glasses."

One company, XREAL, has already used an early version of this software platform, announcing its new Project Aura AR glasses on stage at I/O. But Google hopes that more brands will follow.

How Android XR could look on AR glasses

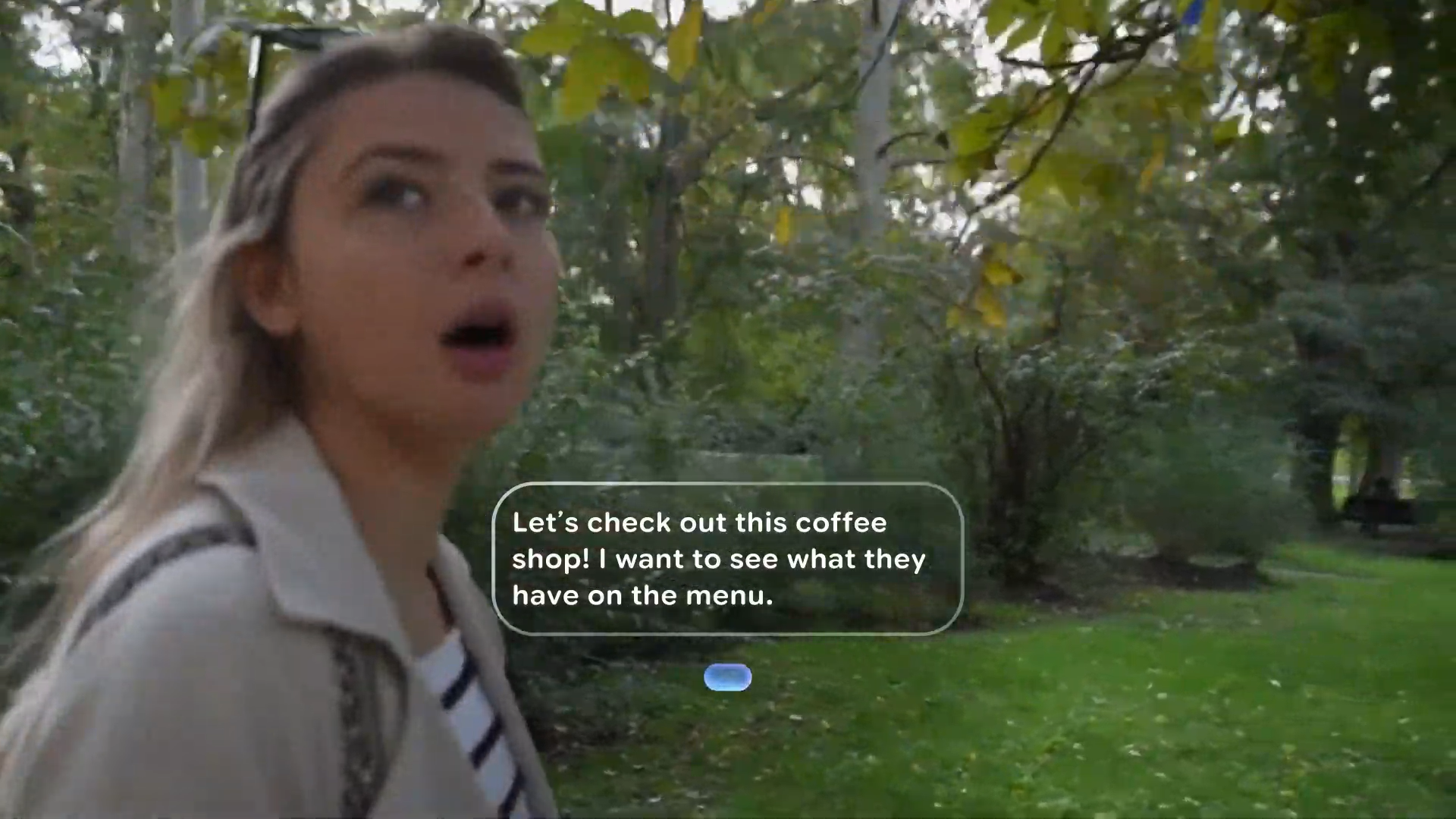

During the I/O 2025 keynote, Google showed an idealized vision of how its apps would look on an XR glasses display.

Get the latest news from Android Central, your trusted companion in the world of Android

In one clip, the Android XR user tells Gemini to set a Calendar birthday event. It pops up in her vision so that she can confirm the details are correct; then she follows up to create a Task to buy a present before the party, all while cooking dinner.

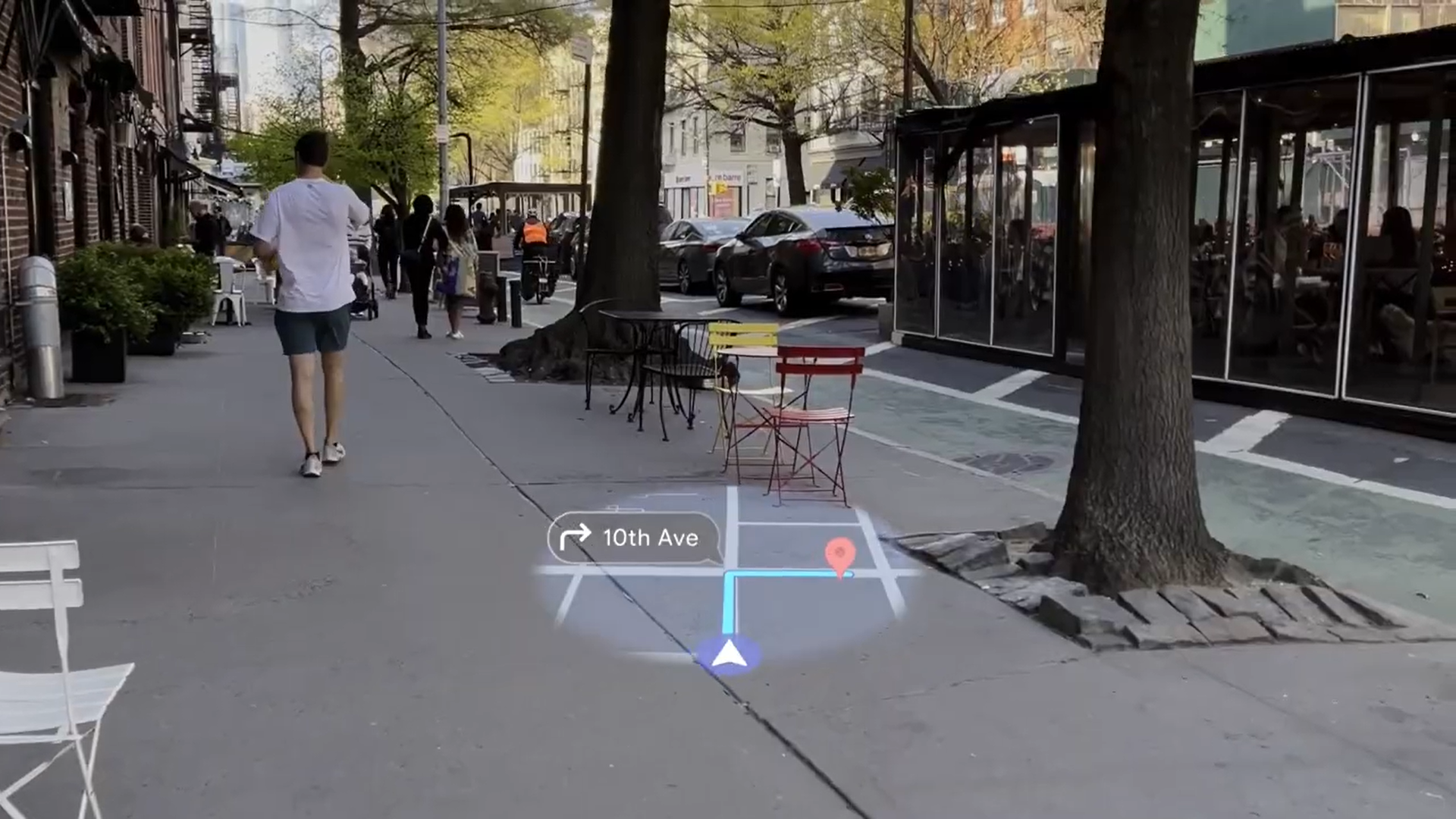

In another, the Google XR glasses wearer asks Gemini for a good nearby ramen place, then has the AI guide it to the destination using directional arrows and a mini-map in a portion of their vision. It looks similar to Maps Live View on your phone, only now your phone would be in your pocket.

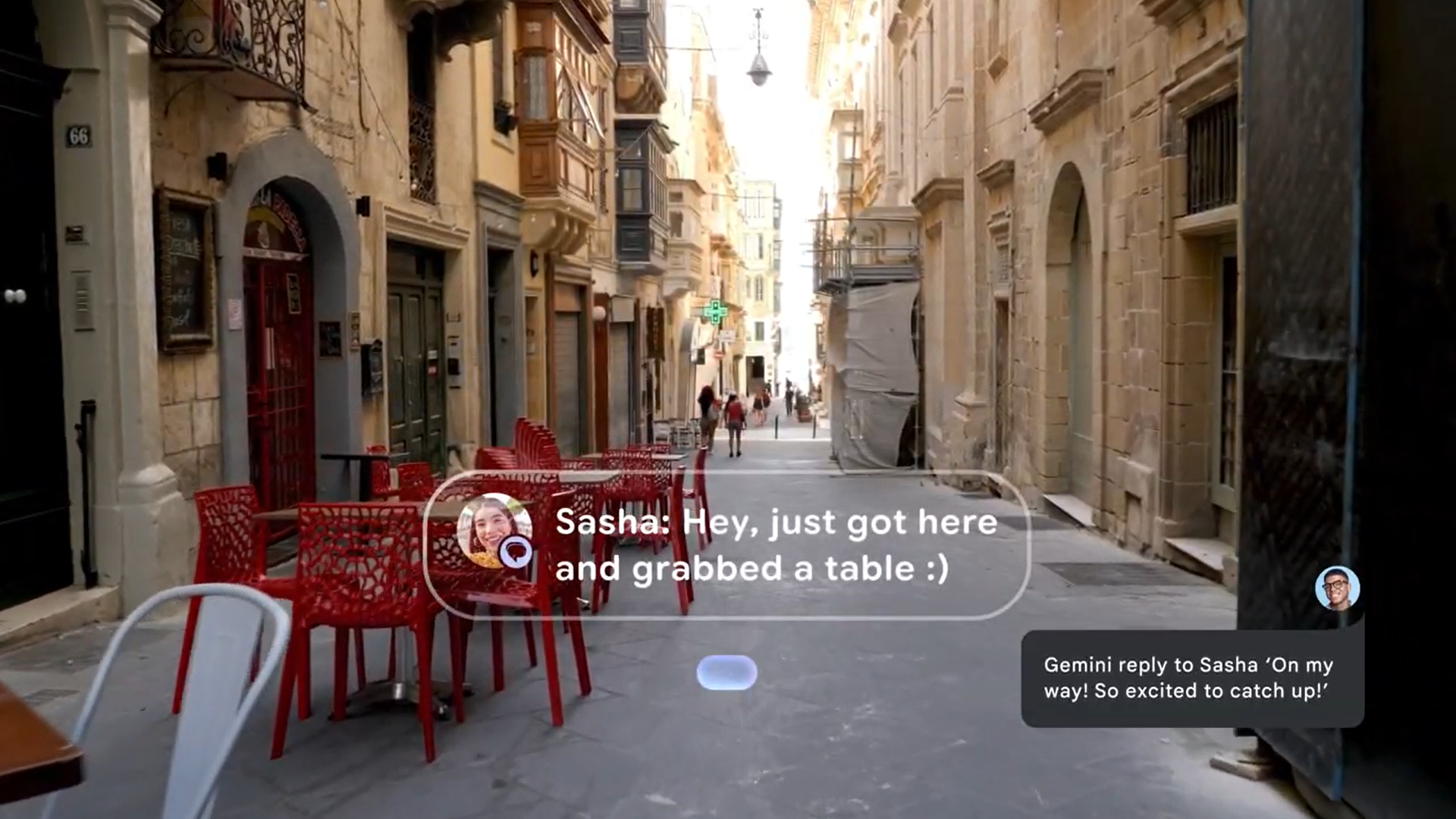

Other Android XR app examples included a Google Messages pop-up, a live Google Translate conversation, and a quick Photos snap during a dance party.

All of them will rely on Gemini voice commands, though we'd love to see alternative control options in the future. Google promises that Gemini will "see and hear what you do" through the camera and "remember what's important to you" when asking questions later.

As seamless as these XR apps look in concept, Google will need to create hardware that's lightweight and efficient enough for portable processing, but with good enough resolution to make text clearly visible in the bottom third of your vision.

That will be a tall order to deliver in untethered, consumer-priced glasses! Android Central will be demoing Google's XR glasses at I/O today, and we'll report back whether the hardware lives up to Google's software promises.

The new Android Developer Preview 2 sounds robust

While Google will eventually target consumers with Gentle Monster-designed glasses, its I/O presentation focuses more on developers. In a blog post, it shared the new Developer Preview 2 tools available today, created based on feedback to the first preview.

While it all gets very technical, here's a simplified breakdown of the key dev features coming to Android XR:

- Apps can now use the background hand tracking SKD (with user permission), with 26 posed hand joints tracked.

- The Jetpack SDK now supports stereoscopic 180° and 360° videos

- Devs can draw a subspace for 3D content and choose how much space it takes on the display, so it's not too large in your vision

- Unity OpenXR games get a performance boost, including dynamic refresh rate

- You can "integrate Gen AI into your apps" using Firebase AI Logic for Unity.

Currently, all of these tools focus on traditional XR for headsets, not glasses. The post explains that they are still "shaping" dev tools for smart glasses and that they'll share more details before the end of 2025.

Google also promises that the Android XR Play Store will launch later in 2025 with compatible 2D apps. But devs that create "differentiated" XR apps with spatial panels, environments, 3D models, and other XR-specific traits will get special attention.

Michael is Android Central's resident expert on wearables and fitness. Before joining Android Central, he freelanced for years at Techradar, Wareable, Windows Central, and Digital Trends. Channeling his love of running, he established himself as an expert on fitness watches, testing and reviewing models from Garmin, Fitbit, Samsung, Apple, COROS, Polar, Amazfit, Suunto, and more.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.