Meta and Google made big promises at AWE, but AR glasses' future is still messy

Reps from Google, Meta, XREAL, Qualcomm, and other industry titans all debated when and how augmented reality would "go mainstream."

Smart glasses are undeniably exploding. Speakers at Augmented World Expo 2025 panels on the future of smart glasses were passionately bullish on its future. But once specifically asked about augmented reality displays in glasses, the expert responses from Google, Meta, and others split into complicated, divergent visions of the future.

In panels like "What does it take to achieve mass market adoption of AR products?", speakers from Google, Meta, and Qualcomm hyperfixated on AI and questioned whether "AR glasses" even need a visual display.

They assured listeners that the world would inevitably adopt AR glasses, and even eventually "replace our phones" generations from now. But they had very different ideas on how they'd emulate smart glasses' success, given that those are more affordable and naturally stylish.

AR glasses today have a growing market as extended displays for gaming and streaming. Some of my favorite demos at AWE came from Viture and XREAL AR glasses, with substantially improved FoV and color since my last time trying AR glasses.

But that at-home entertainment niche aside, Big Tech wants AR glasses to serve as stylish, all-day companions for everyday consumers. That's a much taller order.

Here's everything I pieced together from AWE 2025 panels about how AR glasses will evolve over the next few years — including the ways that Meta and Google, in particular, see AR glasses' future very differently.

AR glasses don't have one clear blueprint for success like smart glasses

During her panel "State of Smart Glasses and What's Around the Corner," Meta VP of AR Devices Kelly Ingham addressed the obvious challenges facing AR glasses today.

Get the latest news from Android Central, your trusted companion in the world of Android

"Displays are still heavy, right? How do you put a display in front of your eyes and have it be comfortable?" she asked rhetorically. "Is it monocular, or is it binocular? You add more weight, you add more cost."

XREAL's Ralph Jodice, whose company recently made Android XR-powered AR glasses, noted that while they've successfully grown FoV from 30º to 57º in the last couple years and will soon hit 70º, their customers aren't satisfied. The main demand is "Make my FoV wider, make my glasses smaller," and doing both is an obvious "challenge."

Ingham concluded that there will be a "broad set of categories" for AR glasses, from 30-degree displays for notification windows to 70-degree displays for full-on entertainment, and from cloud-based AI through your phone to a "full computer on your head." There's no one set path to success.

Bernard Kress, Director of Google AR, made a similar point in the aforementioned panel on mass market adoption of AR. He described a range of potential AR "market segments":

- Monocolor AR glasses with basic, subtle text function

- Full-color single-display glasses like the Google AR prototype

- Binocular, full FoV glasses like Meta Orion or Snap Spectacles with higher power demands and "bigger form factors"

- Affordable "smart goggles" like XREAL One that don't need to "look like regular glasses"

- Full optical see-through (OST) headsets like the Hololens

What Kress didn't say is that this nebulousness makes it very difficult to imagine AR glasses' future, because it's far too complicated for non-techies to understand.

Smart glasses also have a range of form factors. But most people will see Ray-Ban Meta glasses as the template for smart glasses: built-in speakers and cameras, AI assistance, and phone connectivity in a "normal" glasses design.

We'll see a wider range of looks with the rumored Oakley Meta glasses or Android XR glasses designed by Warby Parker and Gentle Monster, but the core experience should be consistent and appealing to everyday folks.

Google thinks AR needs a killer app first to succeed

During the mass-market AR panel, the moderator asked Google's Kress and reps from Meta and Qualcomm, "Do smart glasses have to have a display to be AR?"

"I would say, 'Not necessarily,'" responded Edgar Auslander, a Senior Director at Meta. As long as your "reality is augmented," it could be audio, visual, or any other sensory changes. By that definition, Meta AI glasses are "AR glasses," which is questionable.

Kress took a different perspective. For him, AR glasses must have a display, but must also offer "something that cannot be replaced by audio." Multimodal AI — the killer app for smart glasses that analyzes what you see — might benefit from a display, but it already works without one.

Kress, who worked on the original Google Glass, explained that once the novelty wore off, consumers had "no real use for it." That's why Glass transitioned to enterprise, where developers could get clear "return on investment (ROI)" in areas like automotive, avionics, surgery, and architecture.

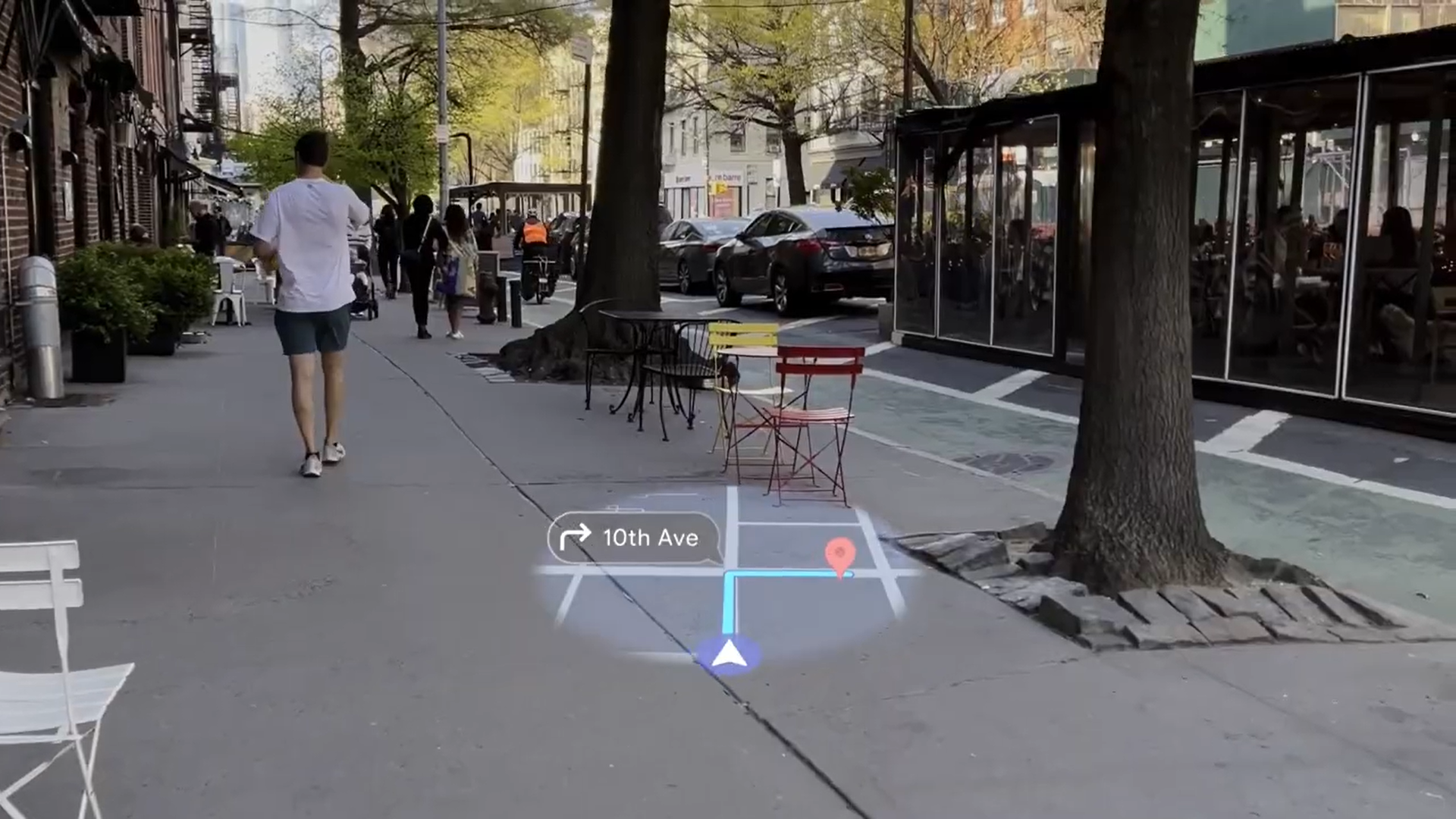

So what will change with AR glasses now? Kress believes the visual component only becomes necessary when you can achieve "World Locking," where 6DoF tracking and a visual positioning system let glasses accurately point to a specific, physical element of your world. Right now, the Android XR UI can only hover in empty space.

You also need affordable, power-efficient microLEDs to display the data clearly and efficiently, Kress noted. But software is the key. It's an unsurprising perspective from a Google engineer, given the company's focus on Android XR while leaving hardware to Samsung and others.

Meta: If they build AR hardware, consumers will come

Meta's Ingham had a more hardware- and economic-driven perspective on AR glasses' future: Smart glasses need to become mainstream first, or AR glasses have no chance.

Meta has sold two million smart glasses, and total glasses sales "better be in double digits" — meaning tens of millions — by 2027, Ingham says, or that's a warning sign for the category.

She explained how OEMs like Apple and Google need to join Meta with consumer smart glasses to make retailers like Best Buy and Target treat smart glasses as a "whole category." They'll then "start pushing these products" with more aggressive marketing on consumers.

Once consumers adopt AI glasses en masse and "have been trained" with audio AI interfaces, Ingham argues, they'll "start expecting more," priming them for more powerful AR glasses. But she believes this won't take place until "after 2030."

Ingham says that's why Meta is focused on building early prototypes like Meta Orion now, to prepare for that stage of the market evolution. And they're improving smart glasses' pain points like weight, audio quality, more variety of styles (especially for women), and a lower cost.

The one obvious pain point she ignored was battery life, and I found that telling. No one at AWE could really give a clear answer on how full-fledged AR glasses will ever be petite enough to appeal to mainstream consumers and have the battery life to last all day.

Should AR hardware or software come first? Google and Meta fundamentally disagree

Android XR is currently restricted to Project Moohan, but Google will add support for AR and smart glasses software later in 2025. Meta, meanwhile, has opened up its VR software with Horizon OS, but has no plans to unlock its glasses OS to devs yet.

XREAL's Jodice, a major Android XR partner, argues that the open system and easy access to an "ecosystem of devices" should tempt developers to jump onto the ground floor of AR app development.

But Meta's Ingham countered that their "focus" is to bring "enough devices to market" and "create better systems" first. "When you get to 10 million devices," she says, you can "develop an app and actually make money, right?"

Android XR might create a unified XR glasses system that makes it easy for developers to hit a wide range of products at once...but those devices need to exist for developers to get ROI. So while bigger developers can join Android XR at the ground floor, the smaller devs might wait for Samsung to make an actual smart glasses product first.

Michael is Android Central's resident expert on wearables and fitness. Before joining Android Central, he freelanced for years at Techradar, Wareable, Windows Central, and Digital Trends. Channeling his love of running, he established himself as an expert on fitness watches, testing and reviewing models from Garmin, Fitbit, Samsung, Apple, COROS, Polar, Amazfit, Suunto, and more.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.