Tech Talk: Content Credentials are coming to your phone's camera. What are they?

What is real and what's not?

Get the latest news from Android Central, your trusted companion in the world of Android

You are now subscribed

Your newsletter sign-up was successful

Welcome to Tech Talk, a weekly column about the things we use and how they work. We try to keep it simple here so everyone can understand how and why the gadget in your hand does what it does.

Things may become a little technical at times, as that's the nature of technology — it can be complex and intricate. Together we can break it all down and make it accessible, though!

How it works, explained in a way that everyone can understand. Your weekly look into what makes your gadgets tick.

You might not care how any of this stuff happens, and that's OK, too. Your tech gadgets are personal and should be fun. You never know though, you might just learn something ...

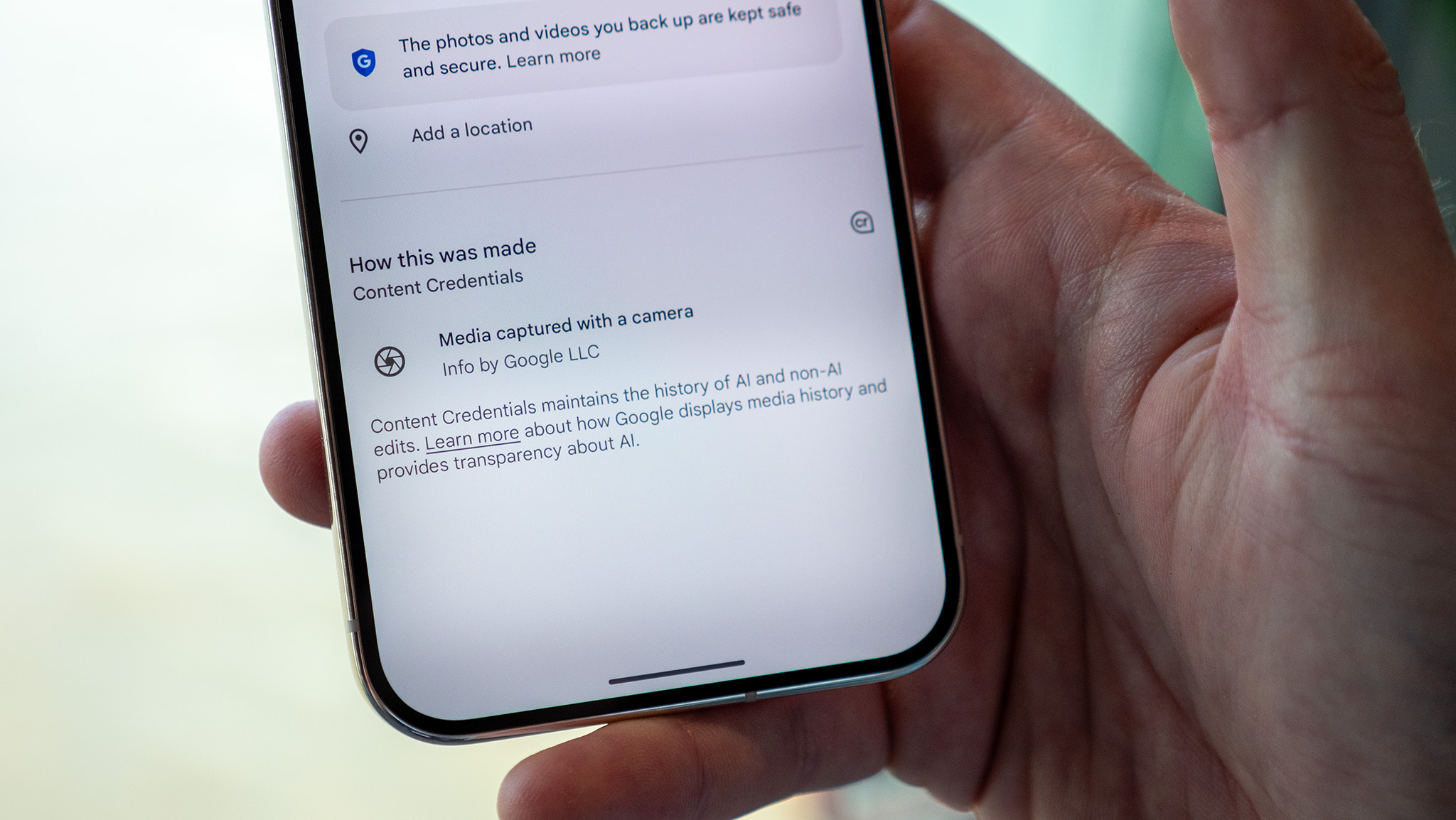

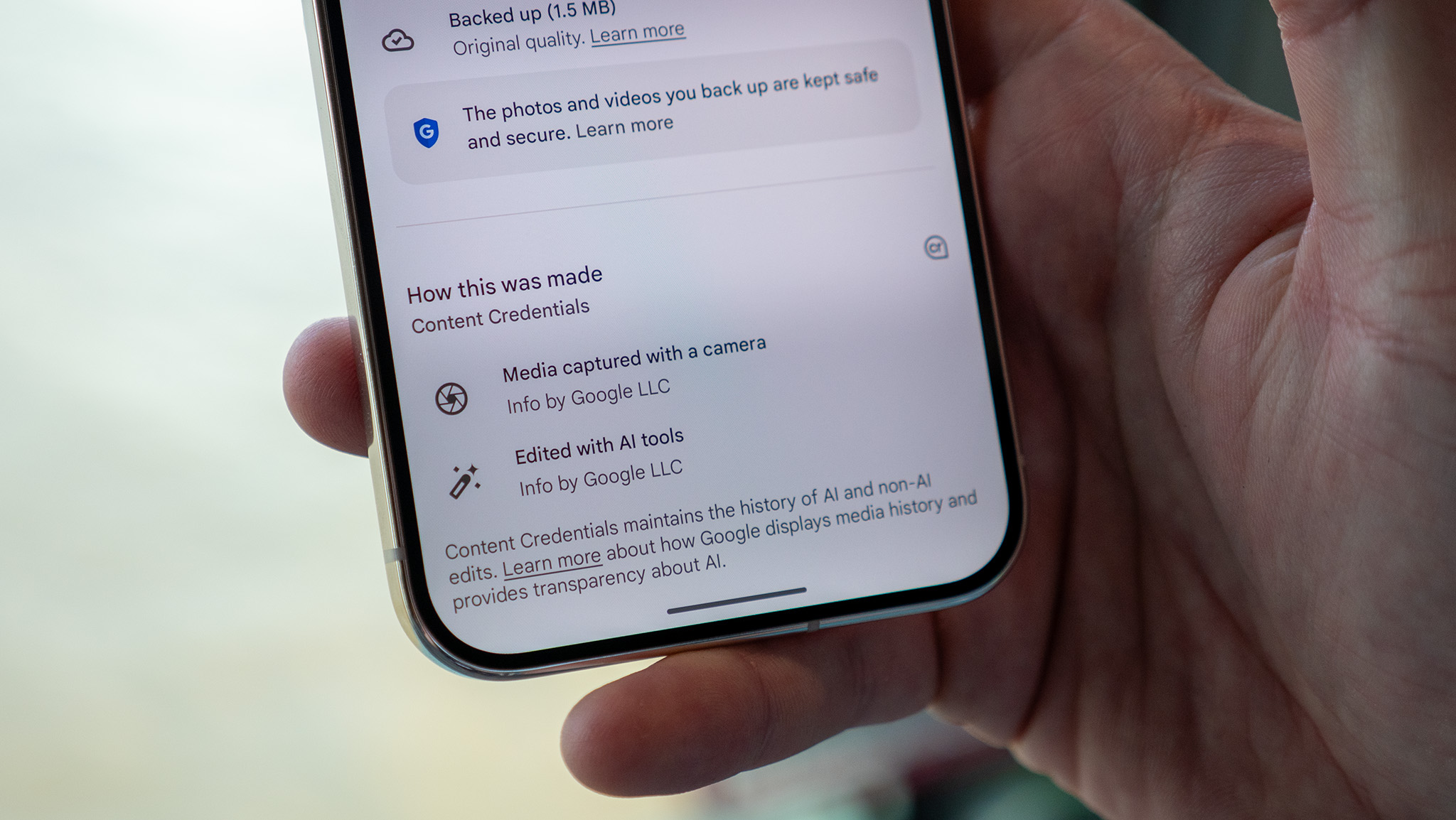

What are Content Credentials?

Content Credentials are the latest step to address a growing problem with fake or edited imagery. It's not the first try, and it's not even a popular idea among creators, but companies like Meta have been trying to get a handle on it for a while. What's needed is an industry standard built into the cameras most people use.

That's what Google is trying to do here. Starting with the Pixel 10, every photo will have a sort of trail explaining how it was created. When used with the existing tools like SynthID and metadata added by Google Gemini, you'll be able to see if a photo went through any sort of user-initiated AI for editing.

Why should I care?

Millions of people take photos with their phones every day, and a lot of those get shared. Putting a good camera phone into everyone's pocket and connecting it straight to the internet makes it easy, and we like to see them. Sharing pics is natural and fun.

Not all of those pictures are real, though. You've always been able to edit photos, even "old" photos taken before the digital camera age, and people have always been doing it. Now that AI makes it so easy, it can be problematic.

Get the latest news from Android Central, your trusted companion in the world of Android

Sure, most fake pics are just that: photos edited to be unreal and funny, or outrageous, or whatever. The kind of things friends share with each other. But not all of them.

We went through all this during the 2024 U.S. election. Some people decided it would be a good idea to make fake photos showing their favorite candidate in a positive light or their opponent in a negative light. Or both. These pictures were shared with the intention of influencing people and, in turn, the election.

Did it succeed? I have no idea. I'm sure plenty of people believed what they were seeing (this I know because many were heavily shared), especially as AI-generated imagery becomes increasingly convincing, but whether that translated into votes is mostly unknown.

But it could have.

That's just an easy example. Fake photos can be used for fraud, extortion, embarrassment, or any other horrible thing people often do to each other. When you make it so easy to take a photo, share it, but edit it first to make it tell whatever story you want, it's not good. I've been fooled by a fake picture. You've been fooled, too, and there's a good chance someone close to you, like a parent, has also been fooled.

Content Credentials is a major step in the right direction

Content Credentials sounds like a good idea, but creators don't like this. If I take a photo of a sunset and have Adobe touch up the colors to make it pop, it may be identified as edited by AI. That's because it probably was. AI carries a stigma when it comes to photographs, and that attitude will need to change because we deserve to know if what we're seeing is real or fake. And yes, altering the colors of a landscape photo makes it fake.

Content Credentials will help keep fake photos from going viral, but it won't be foolproof. Nothing ever is. People dedicated to working with AI to create or edit images will see what they can do to get around being identified, and some will succeed, at least part of the time.

In the end, though, it makes it a little easier for us to trust what our eyes are seeing, and that's the important part. This may have started with the Pixel 10, but it's also said to be rolling out to other Android and iOS devices soon. It's more than a band-aid, even if it's not a foolproof solution, more of this technology will be developed because the need isn't ever going to go away.

The latest AI phone

The Pixel 10 is Google's latest smartphone, featuring an upgraded Tensor chip that promises better photos, enhanced AI processing, and more.

Jerry is an amateur woodworker and struggling shade tree mechanic. There's nothing he can't take apart, but many things he can't reassemble. You'll find him writing and speaking his loud opinion on Android Central and occasionally on Threads.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.