AI could harm your critical thinking skills. Should that change how you use it?

A study from MIT's Media Lab looked into the risks of AI dependency on our brains. The results were scary.

AI Byte is a weekly column covering all things artificial intelligence, including AI models, apps, features, and how they all impact your favorite devices.

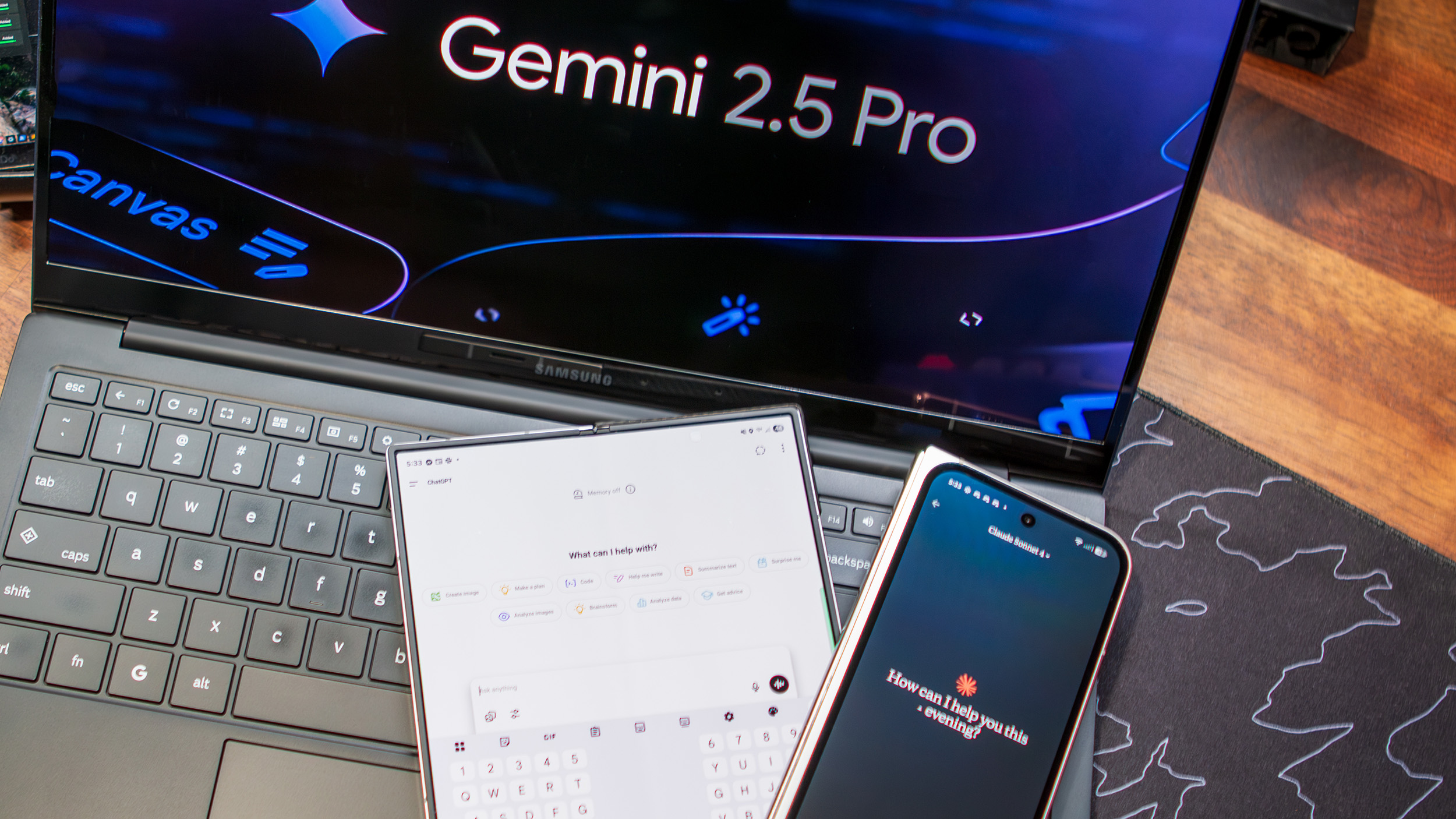

OpenAI made artificial intelligence mainstream with the release of ChatGPT in 2022. AI and machine learning were around well before then, but ChatGPT's emergence marked a turning point. Soon after, AI would become everywhere — on our phones, in our favorite apps, and part of our most-used services.

Whenever something new pops up, like ChatGPT, it takes a while for researchers to learn its true effects on our minds and daily lives. That process is only beginning, but an early study from MIT's Media Lab highlights some concerning trends regarding AI usage. It hasn't been peer-reviewed yet and has a small scope, including 54 participants aged 18 to 39 years old from the Boston region.

The results found that people regularly using ChatGPT to write essays had the lowest brain engagement of the groups studied and "consistently underperformed" at all levels compared to the other groups, which used either Google Search or only their own brains to write essays. The study demonstrates "the pressing matter of a likely decrease in learning skills" resulting from LLM use, per the report.

If you use AI tools, that probably sounds scary. You might be wondering whether using generative AI and large language models (LLMs) regularly puts your critical thinking skills at risk. While there's reason for concern, using AI for the right things probably won't be as dangerous to your mind as you might think.

What the MIT study found

First, it's important to understand the scope of the study and what its results actually mean. The team of researchers at MIT wanted to figure out how using AI to write an essay affected participants' learning and brain function. The study contained three groups: a brain-only group, an LLM group, and a search engine group.

The participants were asked to write three essays, one per session while remaining in their group. Those who were in the brain-only group could not use any external tools to write their essays in any of the sessions, for example. Then, in the fourth and final session, LLM users were asked to use only their brains to write an essay, and brain-only users were permitted to use LLMs — 18 participants took part in this final test.

All the while, researchers measured brain activity across 32 regions using an electroencephalogram (EEG), a device that records electrical signals firing in the brain. The tests aimed to record not only how cognitive function behaved during essay writing while using brain-only, LLMs, or search engines but also how it changed following prolonged LLM usage.

Get the latest news from Android Central, your trusted companion in the world of Android

Aside from measuring brain activity with EEGs, the researchers conducted interviews with participants after each session. They also used human teachers to score essays while having an "AI judge" evaluate the works.

The results painted a clear picture: brain activity and engagement decreased with the amount of external tools needed. The study found that the brain-only group had the strongest level of brain connectivity, with the search engine group displaying intermediate levels and the LLM group performing the weakest.

When the LLM users were asked to use only their brains to write the final essay, the participants "showed weaker neural connectivity and under-engagement of alpha and beta networks." In contrast, brain-only groups who were then permitted to use an LLM for the final essay "demonstrated higher memory recall, and re‑engagement of widespread occipito-parietal and prefrontal nodes."

In layman's terms, those who used an LLM to write the essays produced lower-quality work, couldn't recall what they had submitted, and had lower brain engagement. Additionally, those who had relied on LLMs before being asked to only use their brains performed worse than the brain-only group.

"The use of LLM had a measurable impact on participants, and while the benefits were initially apparent, as we demonstrated over the course of 4 months, the LLM group's participants performed worse than their counterparts in the Brain-only group at all levels: neural, linguistic, scoring," the study found.

What does this mean for you, an AI user?

The study's results are yet to be peer-reviewed, but let's face it, they aren't exactly surprising. Like any other muscle, your brain needs to be used regularly to stay in shape. If you stop using your brain to write essays, reports, emails, or text messages often, those skills will start to deteriorate.

People who often vouch for AI in learning and work environments often compare chatbots and LLMs to a calculator. When the calculator first became prolific, teachers wanted it banned. Now, calculators are allowed to be used in educational settings and are a vital part of our everyday lives — they're on our phones, computers, and even smartwatches.

I do think generative AI is like a calculator, but maybe not in the way its advocates think. As a writer, I haven't used complex math regularly since my early college days, when I was getting my required math courses out of the way. Since then, a calculator has been my best friend, and guess what? I'm not as good at mental math as I was before.

Similarly, I haven't regularly handwritten words in years — I'm an all-digital person now and have been for some time. When I get handed a form at the doctor's office, my penmanship isn't nearly as good as it once was, and I get hand cramps pretty quickly. All that is to say, the MIT study's findings are really just reinforcing common sense. If you create a dependency on artificial intelligence and stop using a particular skill, you will absolutely lose it.

To me, that's not as scary as it might sound. It all depends on how you incorporate AI into your daily life. Want to use generative AI to make a wallpaper or a cool image to share with your friends? Want Gemini to replace Google Assistant as your voice-based helper on an Android phone? Need it to analyze long documents? I don't think any of these use cases present a significant risk of harming your cognitive abilities.

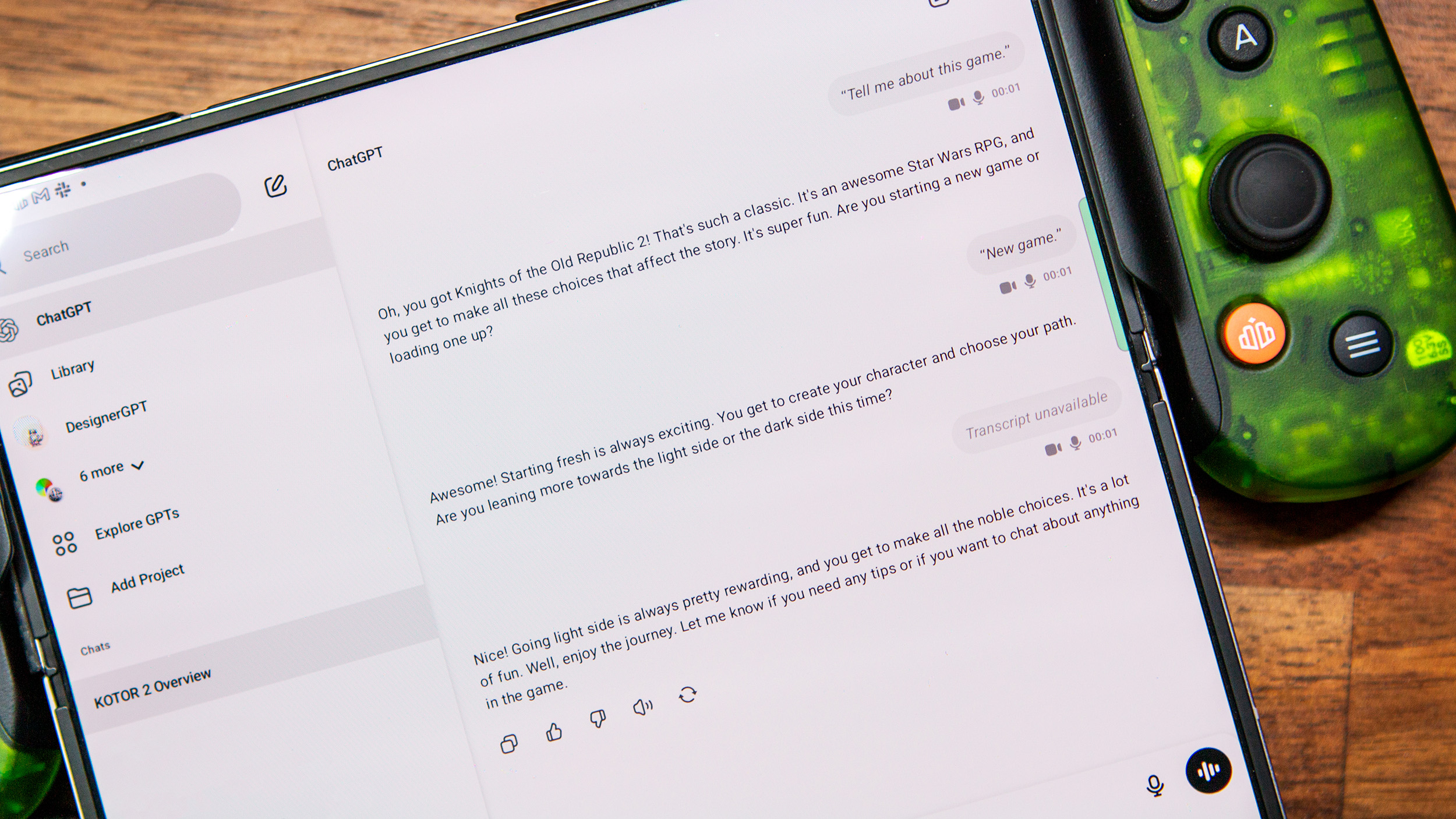

When you use AI to do something you can do yourself, you're at risk of losing those skills. If you use ChatGPT to write all your emails, documents, and reports, there is a good chance your skills will start to fade away — that's essentially what the MIT study found.

But that's not how I use artificial intelligence, and I don't think that's how most people use it, either. I use AI to do things I couldn't possibly do, and that's why it's helpful. That includes analyzing and summarizing documents that are thousands of pages long or generating an image that I couldn't create because I'm no artist. In those instances, AI is expanding your capabilities, not replacing them.

If you look at AI as a way to elevate your possibilities rather than replace the things you'd rather not do, you probably don't need to change your AI usage habits. However, if you want to use AI to eliminate certain parts of your workflow, think about whether you're willing to lose those skills in the process.

Brady is a tech journalist for Android Central, with a focus on news, phones, tablets, audio, wearables, and software. He has spent the last three years reporting and commenting on all things related to consumer technology for various publications. Brady graduated from St. John's University with a bachelor's degree in journalism. His work has been published in XDA, Android Police, Tech Advisor, iMore, Screen Rant, and Android Headlines. When he isn't experimenting with the latest tech, you can find Brady running or watching Big East basketball.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.