Google gives Gemini image markup tools to stop the app from guessing

You can finally make Gemini look where you point with new image markup tools.

What you need to know

- Gemini now lets you draw directly on images, so you can show the AI exactly what you mean instead of overexplaining in text.

- You can circle, highlight, sketch, or add notes, and Gemini uses those markings as context for analysis or edits.

- This appears to be a quiet server-side test, so you might not see the new tools immediately even if your app is updated.

Enjoy our content? Make sure to set Android Central as a preferred source in Google Search, and find out why you should so that you can stay up-to-date on the latest news, reviews, features, and more.

Google is rolling out a new image markup feature for its Gemini AI, allowing users to draw directly on photos to help the assistant analyze and edit images more accurately.

Until now, Gemini could already analyze images or edit them using Google’s newer image models, but the experience wasn’t always smooth. If a photo had multiple objects or details, you had to rely on carefully worded prompts and hope Gemini focused on the right thing.

That approach worked sometimes, but it often felt like talking past the AI. The new markup tools aim to fill that gap by letting you guide Gemini visually rather than verbally (via Android Authority).

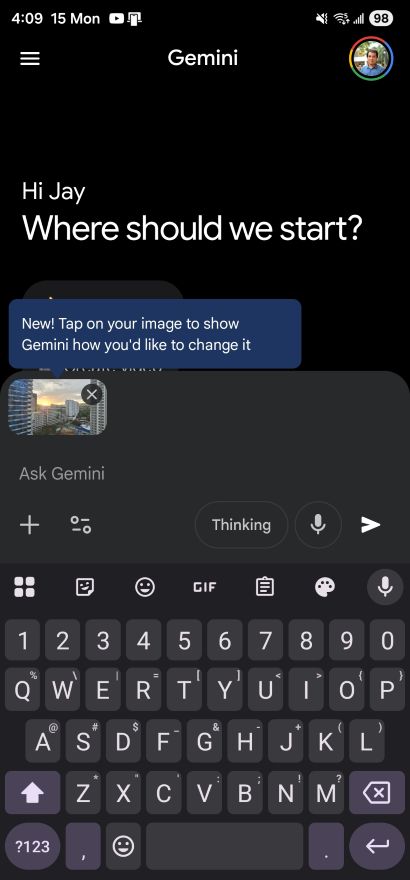

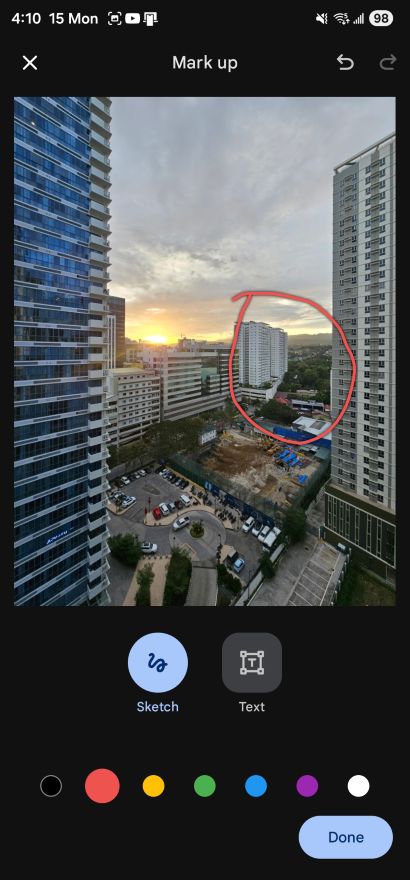

Once the feature is live for you, attaching an image in Gemini displays a brief note about a markup interface. From there, you can sketch directly onto the image or add text annotations. For example, you can circle an object, draw an arrow, highlight a section, or write a note. Gemini will use these markings as context when handling your request.

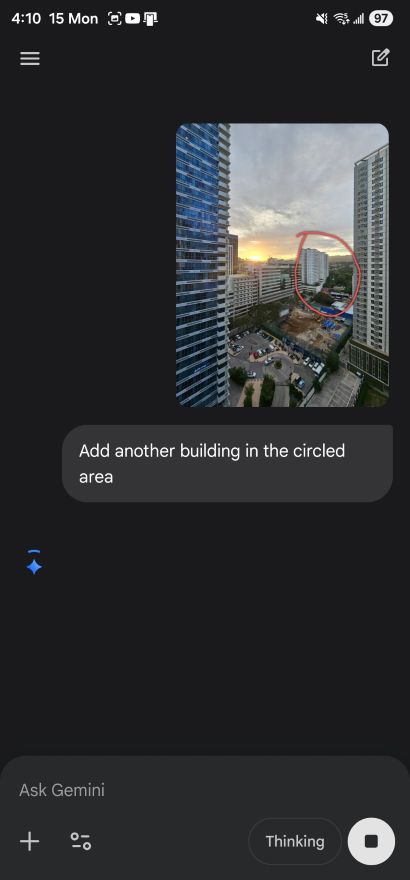

Real-world usage is still a mixed bag. In our testing, we asked Gemini to place a generated building next to a real one, but the AI fumbled the request. Instead of adding to the scene, it completely overwrote the actual structure with a fake one.

Gemini's markup tools work for edits and analysis

This change improves both image understanding and editing. If you want to know what something is, you can highlight the exact item instead of describing it. If you are editing a photo, you can mark the area you want changed instead of writing a long explanation.

Google has been preparing for this type of interaction for months. Earlier leaks showed image-highlighting tools meant to help Gemini focus better. The company has also been improving image editing, with models that support natural-language edits and keep subjects consistent. The markup feature brings these abilities together in a way that feels more natural for users.

Get the latest news from Android Central, your trusted companion in the world of Android

Right now, availability seems to be limited, and Google hasn't formally announced it yet. This appears to be a server-side rollout, so even users with the latest Gemini app may not see it yet. Still, the fact that some users can try it shows Google is ready to expand it soon.

Jay Bonggolto always keeps a nose for news. He has been writing about consumer tech and apps for as long as he can remember, and he has used a variety of Android phones since falling in love with Jelly Bean. Send him a direct message via X or LinkedIn.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.