How to use Google Lens in Google Photos

Look up content from your images using Google Lens in Google Photos.

Get the latest news from Android Central, your trusted companion in the world of Android

You are now subscribed

Your newsletter sign-up was successful

Google Lens has been an instrumental part of your camera for a while now, but it's also been part of Google Photos for many years, too. This means that even if there's no time to use Google Lens at the moment — such as when some weird-looking snake gets into the garden — you can come back and use Lens for its AI object identification, text transcription, and context recognition to figure things out later.

Google has added so many useful functions to Lens over the years. You can scan images in Google Photos for content and search the web directly from there. It even lets you copy bits of text that have been scanned from the picture and paste them anywhere. Here's how you can make the most of Google Lens in Google Photos.

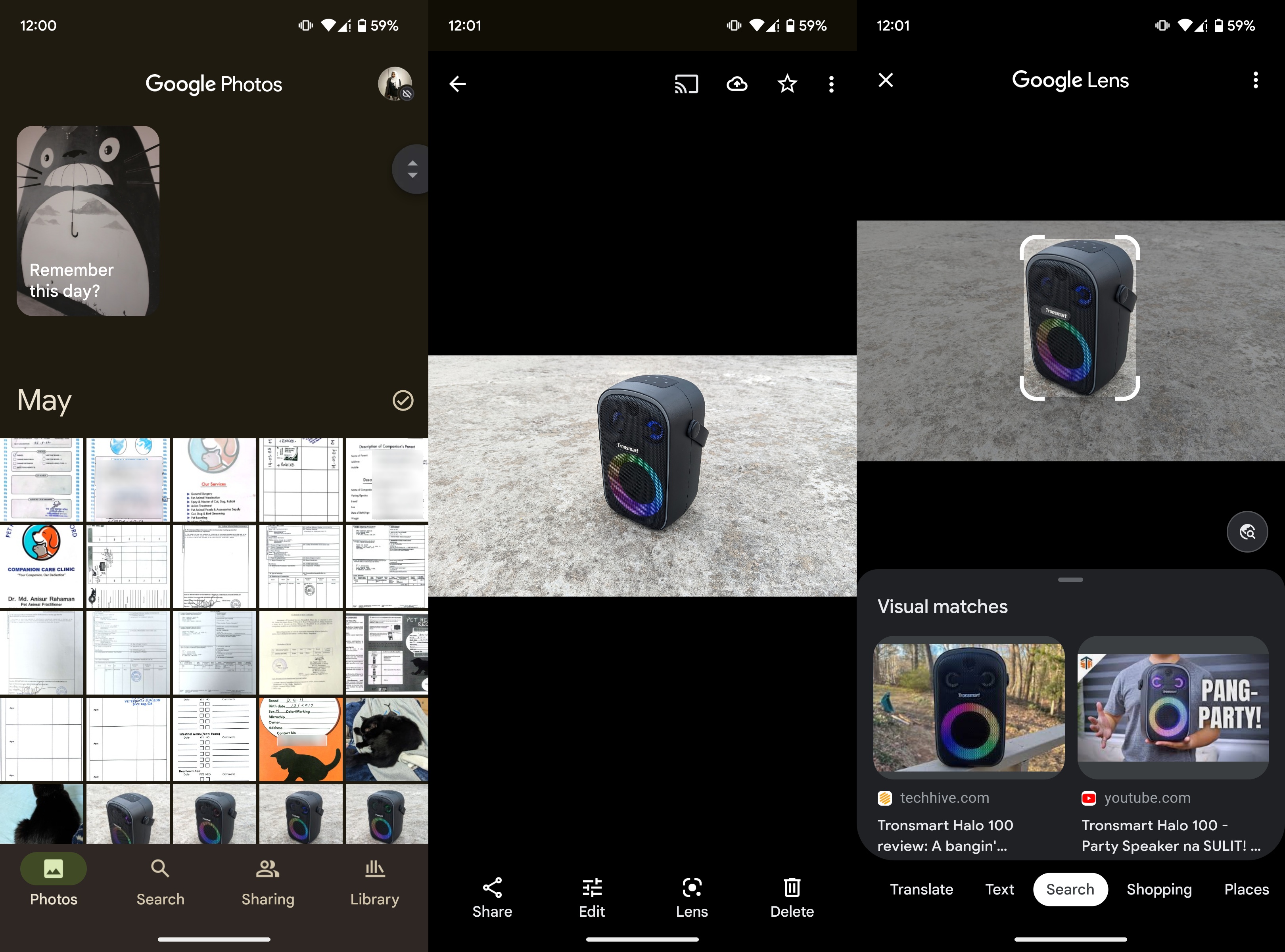

How to use Google Lens in Google Photos

1. Open the Google Photos app on your phone.

2. Select any image.

3. Tap the Google Lens icon in the bottom bar.

4. Browse through the options listed and choose one, such as Search or Text.

5. Tap highlighted text from the image to interact with it.

Get the latest news from Android Central, your trusted companion in the world of Android

6. Choose one of the many options that pop up such as Copy text, Search, Listen, Translate, or Copy to computer.

Open your mind up to the possibilities by exploring this epic Google Lens integration inside Google Photos. You can do a lot more than just copying text from pictures in Photos. Google Lens scans an image and offers the following basic options in Photos:

- Translate

- Text

- Search

- Shopping

- Places

- Dining

Based on whatever is in the image, Google Lens can also do the following:

- Find similar products online and tell you where you can buy them.

- Scan barcodes and QR codes.

- Save the phone numbers, addresses, and other contact details.

- Suggest book reviews and summaries.

- Add events to your calendar.

- Pull up facts, hours of operation, and more about landmarks and important places.

- Give you more information about artists and their artwork.

- Teach you facts about plant and animal species and breeds.

Google Lens can identify a wide variety of things, places, landmarks, and even people. It can identify millions of CD and vinyl albums, movie posters, flora, fauna, insects, animals, foods, and even what popular franchise that funny T-shirt is from. You can use it to transcribe text as well as translate text, and since it works with any photo you upload to Google Photos, this makes it an easy way to upload and transcribe your grandma's old 4x6 recipe cards.

Get on the Google One plan if you depend on Google Photos

The next time you see something you don't understand or recognize out in the real world, snap a pic and use Google Lens at your leisure rather than when you're standing right there blocking the entrance to that new Asian fusion restaurant. Of course, it always helps if your camera takes the best picture possible, so if your phone's camera isn't that great, you might want to invest in one of the best Android camera phones.

Are you running out of space in Google Photos? Since you only get 15GB for free with a free basic Google account, it's very likely that you'll need more space soon (if you haven't already). The easiest way to bid storage anxiety farewell is by getting the Google One plan.

Google One has many tiers, with the entry-level plan starting at $1.99 per month for 100GB. This goes all the way up to a whopping 30TB of storage for $149.99 per month. If you're a T-Mobile user, you can save some money by getting access to exclusive Google One offers.

Google One

Get more storage for your Google Photos account by purchasing the Google One premium plan. This adds more space to your Google Drive as well as all your other Google services.

Namerah enjoys geeking out over accessories, gadgets, and all sorts of smart tech. She spends her time guzzling coffee, writing, casual gaming, and cuddling with her furry best friends. Find her on Twitter @NamerahS.