Google’s new model stops Gemini from going rogue in Chrome

And Google will pay $20,000 to anyone who can trick its new AI firewall.

What you need to know

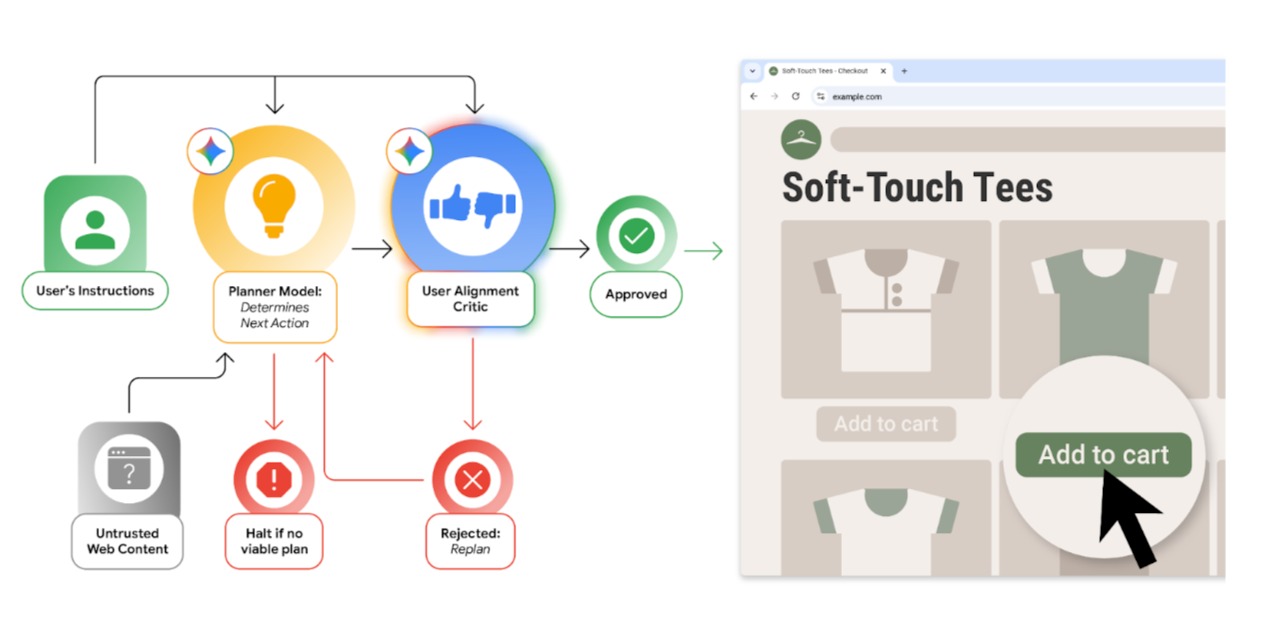

- Google has introduced the User Alignment Critic, a model that checks every AI action against your intent before allowing it to run.

- The Critic only sees metadata, not webpage content, so hostile sites can’t trick it with hidden prompts.

- Chrome now limits Gemini’s interactions to the domains tied to your task, blocking any unexpected site access.

Enjoy our content? Make sure to set Android Central as a preferred source in Google Search, and find out why you should so that you can stay up-to-date on the latest news, reviews, features, and more.

Google is adding more Gemini features to Chrome, letting it do more than just summarize pages. But as the browser becomes more like a personal assistant, Google is working hard to prevent security problems. After all, assistants can be fooled, and that risk is at the heart of Google’s new security changes.

When Gemini first landed in Chrome for U.S. desktop users back in September, it marked the beginning of “agentic browsing,” Google’s term for letting AI take meaningful actions online. Instead of simply responding to prompts, the browser could actually carry out a workflow across pages and tabs.

However, allowing AI to click buttons and read websites for you is exactly what security experts have warned about. Some malicious sites can hide secret instructions in their code or page elements, a method called indirect prompt injection, and use it to control the AI without you knowing.

To address this, Google is adding a new safety feature called the user alignment critic, which acts like a built-in gatekeeper for Gemini’s proposed actions. Before the AI executes anything, the action is routed to this model. Instead of reading the webpage directly, the Critic only receives structured metadata that describes what the action will do.

Action must match intent

Isolating the Critic from raw web content prevents a hostile site from manipulating the safety system itself. If an action doesn’t match your stated intent, the Critic blocks it outright. This extra layer in Chrome helps keep the AI working as intended, even if some websites try to interfere.

This new architecture also expands Chrome’s origin isolation rules so Gemini can only interact with the specific domains involved in your task. It can’t wander off to unrelated sites or make unexpected network requests.

Alongside that, Google has layered in threat detection tools, user confirmations for sensitive operations, and aggressive red-teaming before new agentic features roll out. Each piece is meant to reinforce the others, creating a defense-in-depth model that treats AI decision-making as a high-risk environment rather than a novelty feature.

Get the latest news from Android Central, your trusted companion in the world of Android

Google is also putting money on the table to prove it works. The company has updated its Vulnerability Rewards Program, offering up to $20,000 to any researcher who can bypass these new agentic security layers.

The upgrades arrive as Chrome prepares to ship more agentic capabilities, which means users will soon see Gemini taking more initiative online.

Jay Bonggolto always keeps a nose for news. He has been writing about consumer tech and apps for as long as he can remember, and he has used a variety of Android phones since falling in love with Jelly Bean. Send him a direct message via X or LinkedIn.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.