Examining the differences between iPhone X Face ID and Samsung iris scanning

Get the latest news from Android Central, your trusted companion in the world of Android

You are now subscribed

Your newsletter sign-up was successful

At the September 2017 Apple event, the iPhone X was revealed. It seems like Apple went all out on its "Anniversary" model, and one of the new features is Face ID.

Unlocking your phone with your face isn't exactly new. Android has had the feature for a while, and Samsung has used a special iris scanner since the Galaxy Note 7. But Apple is doing things very differently, as it is wont to do. Rather than use a pattern to create an unlocking token, Apple is using the shape of your face. And it has some pretty specialized hardware in place to do it.

I haven't used the iPhone X just yet, but this is an area where I have a good bit of experience. Modulated acquisition of spatial distortion maps, then turning the acquired data into something a piece of software can use as a unique identifier has been around for a while, and products you have in your house right now were built, packaged or quality-checked using it. I've been involved in designing and deploying several systems that use depth image acquisition to sort produce (apples, peaches, plums, etc.) by grade, shape, and size and understand how the technology used in Face ID will work.

Let's compare.

Android's facial recognition

Unlocking your phone with your face has been part of Android since version 4.0, Ice Cream Sandwich. This is the least complicated and least secure of the three things we're comparing.

Using the front facing camera, your Android phone can grab an image of your face and Google's facial-recognition software then processes it to build a set of data based on the image. When you hold the phone to your face to unlock it, an image is collected, processed and compared to the stored data. If the software can match both of them, a token is passed to the system so your phone will unlock.

Face unlocking came to Android in 2012, and Samsung has made it much better on their newest phones.

The data isn't sent anywhere and is collected and processed all on the phone itself. It is stored securely and encrypted, and no other process is able to read the raw data. Android face unlocking also doesn't need any special lights or sensors or cameras — it uses the same front-facing camera you use to take selfies with.

Get the latest news from Android Central, your trusted companion in the world of Android

Samsung has improved the experience with the Galaxy S8 and Note 8 phones by starting scanning as soon as the screen is tapped, and the processing is faster and more accurate because of the better camera and CPU. Face unlock on the Galaxy S8 is fast and generally works well once you get a feel of how to hold the phone while you're using it.

The biggest problem with face unlock is that it's not secure. It's not advertised as being secure, even by Google or Samsung. It's a convenience feature that was built to showcase (and refine) Google's facial recognition algorithms, and a printed photo of your face will defeat face unlock.

Thankfully, Samsung also offers an alternative way to recognize your face.

Samsung's iris scanning

Samsung first brought iris scanning to the Galaxy line with the Galaxy Note 7. Having a computer scan your eyeballs to authenticate you is something we've all seen in movies, and it is used for secure entry in real government facilities. Samsung is using the same concept with its iris scanning system, just scaled back so it can work faster and work with the limited resources of a smartphone. It's more than secure enough for your phone, even if it's not 100% foolproof.

Every eye has a different pattern, and your right eye is even different than your left.

Every eye has a unique pattern in the iris.Your left eye even has a different pattern than your right. Iris patterns are actually more distinct than a fingerprint. Because every eye is unique, Samsung is able to use your eyes to identify you and act as your credentials. These credentials can be used for anything a fingerprint or even a passcode could. You hold the phone so the special camera can see your eyes and your phone will unlock.

To do this, Samsung is using specialized hardware on the face of the phone. A diode emits near-infrared light and illuminates your eyes. It's a wavelength of light that humans can't see but it's fairly intense and "bright." Near-infrared light is used for two reasons: your pupils won't contract and you'll have no change in vision, and it illuminates anything with a color pattern better than the wavelengths we can see. If you look closely at your iris you'll see that there are hundreds of different colors in a distinct pattern. Under near infrared, there are thousands of colors and they contrast with each other very well. It's just better for grabbing an image of your iris, because even though you don't see any of this, your phone can and uses it to build a dataset.

Samsung uses near-infrared light and a special camera to collect and process data about your eyes.

Once the iris is illuminated, a specially tuned narrow-focus camera grabs an image. The regular front facing camera on your Galaxy S8 could register color information under infrared illumination, but it wasn't designed to do it. That's why a second camera is needed.

This image is analyzed and a distinct set of data is created and stored securely on your phone. All the processing, analyzing and storage of the data is done locally and is encrypted so only the process of recognizing your iris has access to it. This data is used to create a token, and if the iris scanner process provides the right token a security check was passed — those are your eyes, so any software that needs your identity is able to proceed.

Of course, Samsung also collects some data about your face using the normal front-facing camera. Most likely, the facial data is used to help position your face so the iris scanner has a clear view.

There are some inherent drawbacks. Because using iris scanning to unlock your phone needs to be very fast, not as much data is collected about the pattern in your eyes. Samsung had to find the right balance of security versus convenience and since nobody wants to wait five or 10 seconds for each scan, the iris scanning algorithms can be fooled with a high-resolution photo laser printed in color and a regular contact lens to simulate the curvature of an eye. But, honestly, nobody is going to have a photo of your eye that is clear enough to unlock your Galaxy S8 or Note 8. If they do, you have a much bigger problem on your hands.

Samsung's iris scanning works well as long as your eyes are in the 'sweet spot.'

The bigger issue is accuracy. Enough of your irises need to be analyzed to pass the software check, and because the camera that grabs the image for recognition has a very narrow focus there's a "sweet spot" your eyes need to be in. You need to be in that sweet spot long enough to pass the checks. The system is of no use if it doesn't collect enough data to prevent someone else's eyes identify as you, so this is just how it has to work.

It's a good system as far as biometric security goes, and for many it's great. Only your eyes will work (ignoring the off chance some spy agency has photos of your eyeballs) and it's fairly fast. You just have to learn to use it correctly — and yes, that typically comes as a result of many times holding your phone unnaturally high with your eyes wide open.

Apple's Face ID

Apple has entered new territory when it comes to biometric security on a phone. It wasn't so long ago that you needed specialized lighting, multiple cameras with special lenses and a very expensive image processing computer board for each of them to collect enough shape data for unique recognition. Now it's done with some components on the face of the iPhone X, Apple's new A11 chipset, and a separate system to crunch the numbers.

Face ID projects an intense infrared light to illuminate your face. Just like the light used by Samsung's iris scanner, it's a wavelength a human can't see but it's very "bright." It's like a flood light — an equal amount of light across a wide area that washes your face and will fall off quickly at the edges of your head.

Apple is trying something very different with Face ID and how it gathers data about your face.

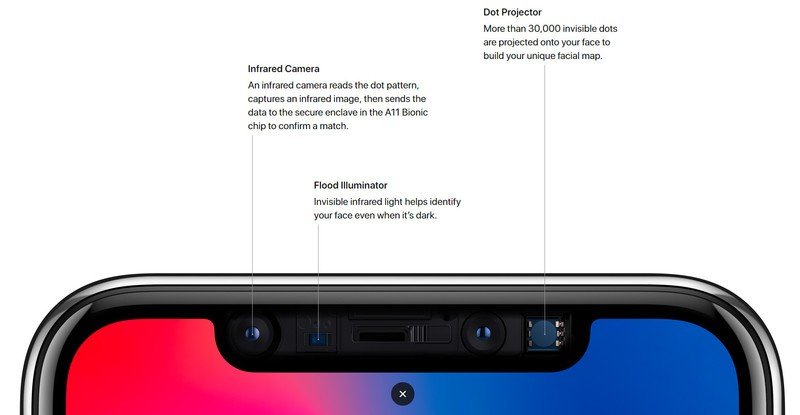

While your face is illuminated, a matrix of infrared LED lasers is projected over your face. These LEDs use a wavelength of light that contrasts with the light used for illumination and thousands of individual points of light cover your face. As you move (and we can never be perfectly still) the points of light reflect the changes.

With your face illuminated with the infrared lamp and a light matrix is projected over it, a special camera is collecting image data. Every point of light is marked and as you move and they change, those changes are also logged. This is known as depth image acquisition using modulated pattern projection. It's a great way to collect data that shows shape, edge detection, and depth while an object is in motion under any type of lighting conditions. A ton of data can be collected and used to show a distinct shape that can be recreated in 3D.

The data is then passed to what Apple is calling the A11 Bionic Neural Engine. It's a separate subsystem with its own processor(s) that analyzes the data in real time as it is being collected. The data is used to recreate your face as a digital 3D mask. As your face moves, the mask also moves. It's an almost perfect mimic, and Apple does an excellent job showing it off with its new iMessage animated emojis in iOS 11.

Face ID uses some of the same technology as Android phones with Tango.

For authentication purposes, the data set is also used to calculate a unique identifier. Just like Samsung's iris scanner, Face ID securely stores this data and can compare it against what the special camera is seeing while Face ID is actively running. If the data set matches what the camera can see, the security check is passed and a token that verifies that "you are really you" is given to whatever process is asking for it.

While Apple is also making a few concessions to ensure Face ID is fast and easy, there are some clear advantages from a user perspective. Face ID is actually more secure because you're moving (more data is being analyzed) and there is no "sweet spot" as all of your face is being used and the camera uses a wider field of view. The matrix projected on your face contrasts well against whatever is in the background because a sense of depth is used to isolate your face's shape.

As a bonus, the shape data of your face in real time can be used for other purposes using what Apple calls the TrueDepth Camera system. We saw an example of this with the new portrait mode for selfies, the animated emojis, and Snapchat masks. Apple has built the Bionic Neural Engine in a way that it can share simple shape data with third party software without exposing the data it uses to build a secure identifying token.

Which is better?

We can't say anything is really better until we've tried it.

Better is subjective, especially since we've not yet used Face ID or the iPhone X in the real world. For authentication purposes, the important thing is that the process is accurate and fast. Samsung's iris scanner can be both as long as you point the phone so it can find the data it needs, but on paper, Face ID will be easier to use because it doesn't need to lock on any particular spot to work. And for many of us neither is better and we would prefer a fingerprint sensor, which the Galaxy S8 and Note 8 both still have.

Whichever you prefer, there's little doubt that Apple has outclassed the competition in this regard. Extensive hardware to build and collect data about your face's shape and features, combined with its own processing system to analyze it all more akin to Tango than any previous facial recognition we've seen on a phone. I'm excited to see this level of technology come to mobile devices, and can't wait to see how future products build on what we see from Apple.

Jerry is an amateur woodworker and struggling shade tree mechanic. There's nothing he can't take apart, but many things he can't reassemble. You'll find him writing and speaking his loud opinion on Android Central and occasionally on Threads.