Google Translate meets Gemini in customized language practice update

The company's bringing its multimodal AI model into the Translate app to help Android users with real-time translations and more.

Get the latest news from Android Central, your trusted companion in the world of Android

You are now subscribed

Your newsletter sign-up was successful

What you need to know

- Google announced a new Gemini-backed update for the Translate on mobile, which brings a customized language practice feature to users.

- Users can tap "practice" to learn a new language, informing the AI of what your understanding is (level) and your goals for the daily sessions.

- The Translate app is also getting a boost with real-time translation, capable of understanding what's being said in other languages and switch between them to avoid problems.

- The customized language practice is rolling out this week on Android and iOS, while the real-time translation update should begin appearing today (Aug 27).

Two features are rolling out in Google Translate this week, courtesy of Gemini's multimodal capabilities.

This morning (Aug 27), Google showcased the upcoming AI-powered features headed for Translate that aim to help users with customized language learning and translate better in real-time. Beginning with the former, Google states Translate now offers a new "language practice" feature, which pushes the app to develop "tailored listening and speaking" practice sessions.

When using Translate, the post states users need only tap "practice" to get going. Initially, the app will ask you what you believe your current "level" is for any given language. Users will find options like Basic, Intermediate, and Advanced. Google says the practices Translate delivers will be generated "on-the-fly," but they will "adapt" to you the better you get.

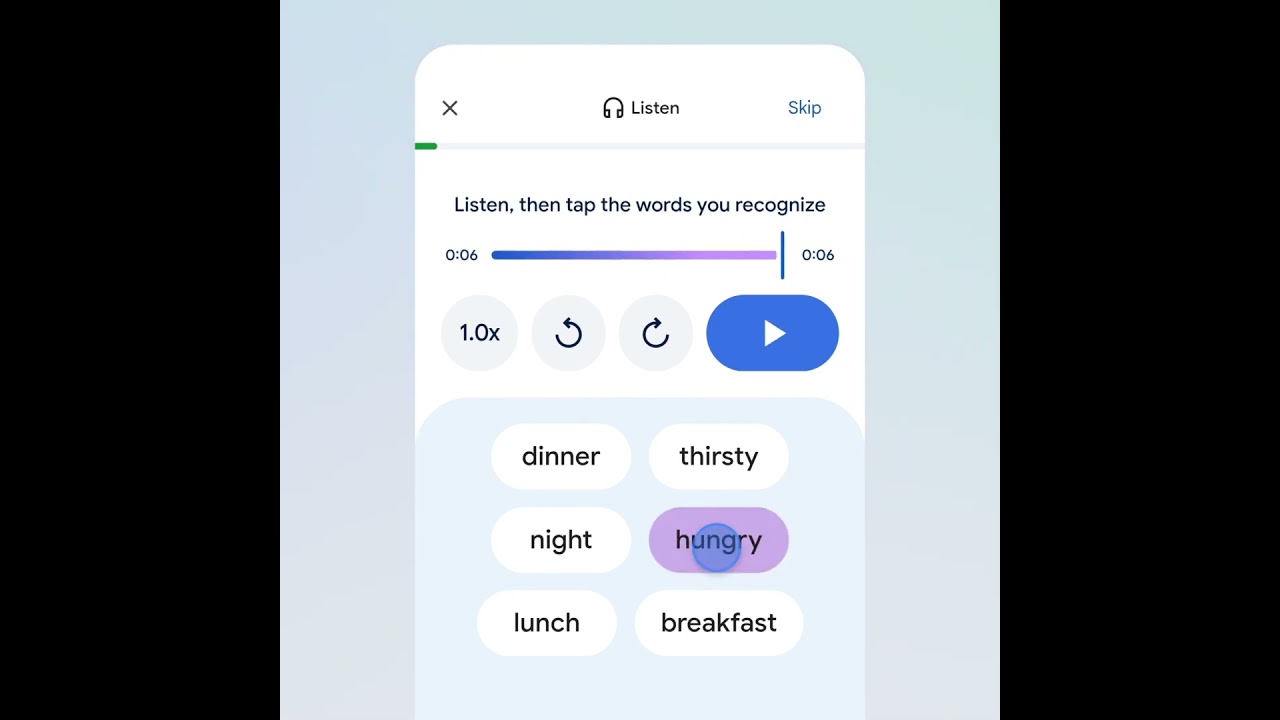

Users will be asked about their goal for learning a language they're interested in before getting into the practice session. Google states practices will be in one of two ways: listening or speaking. If you're listening, you will have to tap the words you recognize from the AI-read statement. Speaking is pretty straightforward, as you'll have to say aloud what's in front of you—hints are there, too, just in case.

Google states this customized learning is rolling out in beta for the Translate app on Android and iOS this week.

Google Translate meets Gemini

The Translate app's real-time translations are being upgraded this patch, as Google credits its latest AI models for the improvement. Rolling out this week, users can tap "live translate," select the appropriate languages, and begin speaking. After you're done, the app will display what it's translated as it speaks it aloud for you.

Moreover, the AI models aid the Translate app in "intelligently" switching between the languages spoken by two parties (i.e., English and Spanish).

Get the latest news from Android Central, your trusted companion in the world of Android

These enhanced live translate capabilities should begin appearing today (Aug 27) for users in the U.S., Mexico, and India. As it arrives, Google highlights its work to improve the quality and speed of the app's translations.

Gemini's intelligence continues to spread across Google's app ecosystem, but the AI model received a major boost yesterday (Aug 25) for photo editing. Using Google DeepMind's latest software as leverage, Gemini's built-in editor for photos takes off using your prompts. Google states its editor is designed to keep the people and subjects looking like themselves when changing their outfits, backgrounds, and when blending images.

Nickolas is always excited about tech and getting his hands on it. Writing for him can vary from delivering the latest tech story to scribbling in his journal. When Nickolas isn't hitting a story, he's often grinding away at a game or chilling with a book in his hand.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.