Google is bringing its bandwidth-saving RAISR image processing to your Android phone

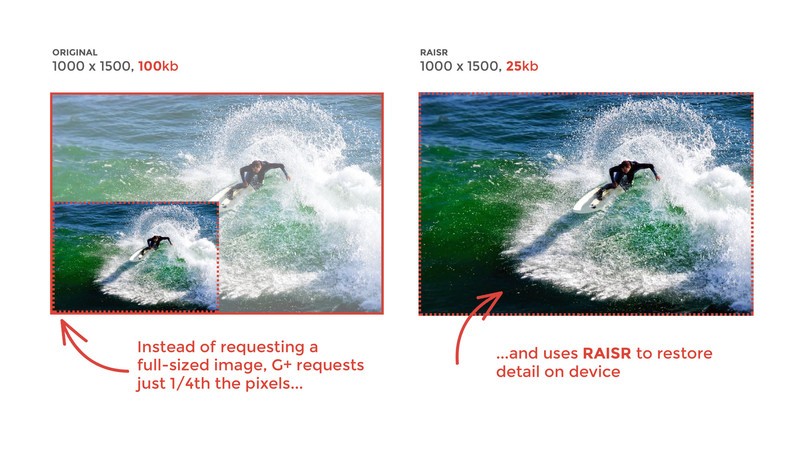

Google is constantly searching for ways to make it easier for us to share and use technology. Back in November 2016, they showcased RAISR, a new method for image processing that takes a low-res upload of a photo and uses machine learning technology to fill in the gaps between the pixels, so that the end-user gets the full resolution of the photo while using a fraction of the bandwidth and download time.

Google has been testing and refining this technology on its own social media platform, Google+, which has allowed them to take a low-res version of a photo, process it through RAISR, and deliver the photos to people on a subset of Android devices with near full-resolution quality while keeping the file sizes low. This cuts down on bandwidth constraints and load times while maintaining the integrity of the original images.

After a soft launch on Google+, it appears Google may be getting ready to unleash this image processing beast across more of its services and devices. In a recent blog post, Google+ Product Manager John Nack states that RAISR is now processing over a billion photos a week, an astonishing number when you consider its limited implementation. But of note is what Nack says in closing:

"In the coming weeks we plan to roll this technology out more broadly — and we're excited to see what further time and data savings we can offer."

The phrasing here is deliciously vague, as it's unclear whether that means the RAISR technology will simply be rolling out to be use on a wider subset of Android devices, but still self-contained within Google+, or spread across the wide spectrum of Google services and products.

Whatever happens, it's great to see Google continually developing and implementing these sorts of processes that allow us to share our favorite photos more efficiently. Once this technology is ubiquitous, we'll all reap the benefits of saving more data and time.

Get the latest news from Android Central, your trusted companion in the world of Android

Marc Lagace was an Apps and Games Editor at Android Central between 2016 and 2020. You can reach out to him on Twitter [@spacelagace.