Google Lens: Everything you need to know

Get the latest news from Android Central, your trusted companion in the world of Android

You are now subscribed

Your newsletter sign-up was successful

Google Lens was one of the major announcements of the Google I/O 2017 keynote, and an important part of Google's Pixel 2 phone plans. For Google, a company with a long history in visual search, Lens is the latest step in an ongoing journey around computer vision. This is an endeavor which can be traced back to Google Image Search years ago, and which is a close relative of the AI powering Google Photos' object and scene recognition.

For the moment, Google is only talking about a "preview" of lens shipping on Pixel 2 phones. But as a part of Google Assistant, Google Lens has the potential to reach every Android phone or tablet on Marshmallow and up, letting these devices recognize objects, landmarks and other details visually (with a little help from your location data) and conjure up actionable information about them. For example, you might be able to identify a certain flower visually, then bring up info on it from Google's knowledge graph. Or it could scan a restaurant in the real world, and bring up reviews and photos from Google Maps. Or it could identify a phone number o a flier, or an SSID and password on the back of a Wi-Fi router.

Whether it's through a camera interface in Google Assistant, or after the fact through Google Photos, the strength of Lens — if it works as advertised — will be the accurate identification and the ability to provide useful info based on that. It's absolutely natural, then, that Lens should come baked into the camera app (and Photos itself) on the new Pixel 2 and Pixel 2 XL smartphones.

Big, BIG data

Like all the best Google solutions, Lens is a product of AI and data.

Like all the best Google solutions, Google Lens is rooted in big data. It's ideally suited to Google, with its vast reserves of visual information and growing cloud AI infrastructure. Doing this instantly on a smartphone is a step beyond running similar recognition patterns on an uploaded image via Google Image Search, but the principles are the same, and you can easily draw a straight line to Google Lens, starting with Image Search and going through the now-defunct Google Goggles.

Back in 2011, Google Goggles was impressive, futuristic and in the right setting, genuinely impressive. In addition to increased speed, Google Lens goes a step beyond this by not only identifying what it's looking at, but understanding it and connecting it to other things that Google knows about. It's easy to see how this might be extended over time, tying visible objects in photos to the information in your Google account.

This same intelligence lies at the heart of Google Clips, the new AI-equipped camera that knows when to take a photo based on composition, and what it's looking at — not unlike a human photographer. That all starts with understanding what you're looking at.

The potential for Google Lens is only going to grow as Google's capabilities in AI and big data increase.

At a more advanced level, Google's VPS (visual positioning system) builds on the foundations of Google Lens on Tango devices to pinpoint specific objects in the device's field of vision, like items on a store shelf. As mainstream phone cameras improve, and ARCore becomes more widely adopted, there's every chance VPS could eventually become a standard Lens feature, assuming your device hit a certain baseline for camera hardware.

Get the latest news from Android Central, your trusted companion in the world of Android

What can Google Lens do on the Pixel 2?

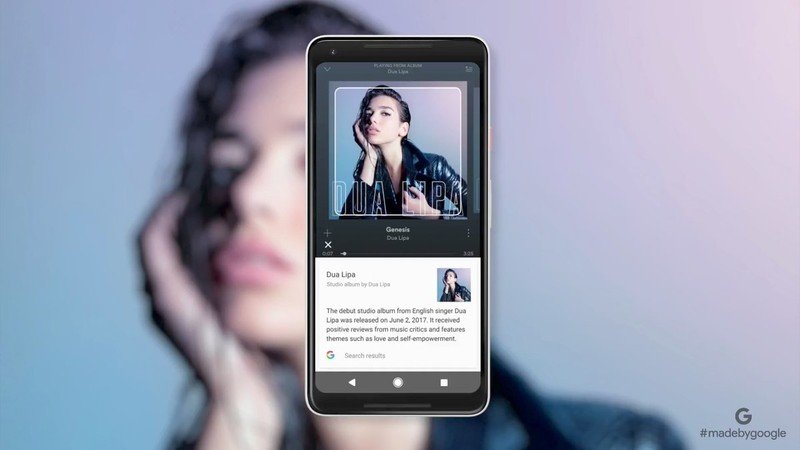

Google is calling the version of Lens on Pixel 2 phones a "preview" for the time being, and it's obvious the company has ambitions for Lens far beyond its current implementation on these handsets. At the October 4, 2017 presentation, Google demonstrated identifying albums, movies and books based on their cover art, and pulling email addresses from a flyer advertisement.

Those are relatively simple tasks, but again, Google surely wants to start small, and avoid the pitfalls experienced by Samsung's Bixby service in its early days.

More: Google Pixel 2 preview

How is Google Lens different to Bixby Vision?

On the surface the two products might appear very similar — at least to begin with.

However, the potential for Google Lens is only going to grow as Google's capabilities in AI and computer vision become stronger. And the contrast with one of Samsung's most publicized features is pretty stark. The Korean firm is still a relative newcomer in AI, and that's reflected in the current weakness of Bixby Vision on the Galaxy S8 and Note 8.

Right now Bixby can help you identify wine (badly), as well as flowers (sometimes) and animals (to varying degrees of success) — as well as products, through Vivino, Pinterest and Amazon respectively. Samsung doesn't have its own mountain of data to fall back on, and so it has to rely on specific partnerships for various types of objects. (The service routinely tells you it's "still learning," as a caveat when you first set it up.)

What's more, while Samsung can (and apparently does plan to) bring Bixby to older phones via software updates, Google could conceivably flip the switch through Assistant and open the floodgates to everything running Android 6.0 and up. Both services are going to require some more work before that happens, though.

Nevertheless, anyone who's used Bixby Vision on a Galaxy phone can attest that it just doesn't work very well, and Google Lens seems like a much more elegant implementation. We don't yet know how well Lens will work in the real world, but if it's anywhere near as competent as Google Photos' image identification skills, it'll be something worth looking forward to.

We'll have more to say on Google Lens when we test it in more detail on the Pixel 2 phones.

Alex was with Android Central for over a decade, producing written and video content for the site, and served as global Executive Editor from 2016 to 2022.