I don't care whether PS5 or Xbox Series X has better graphics, and neither should you

Graphics — specifically as they pertain to resolution — have been a hot topic since the Xbox One and PS4 launched, and will continue to be a hot topic when the PS5 and Xbox Series X launch, much to my chagrin. To put it bluntly, I couldn't care less which is capable of higher resolution graphics. Why? Because no matter what, games on both consoles will look absolutely outstanding. Don't kid yourself otherwise.

I should clarify that I'm not referring to graphics in a broad sense when it comes to everything it takes to create a game's visuals. The term "graphics" has unfortunately almost become synonymous with resolution. Anti-aliasing is important. Ray tracing is important. Shadow mapping is important. That's where you'll see real differences. Resolution, though, not so much. And when it comes to the former elements, I have every faith the PS5 and Xbox Series X will be able to deliver if the developers optimize correctly.

I couldn't care less which is capable of higher resolution graphics.

As long as I'm not sacrificing major components of a game and developers aren't feeling hindered by one console over another, I'm cool. I'm not talking about "this console can hit 60FPS and this one can't" or "my game runs at checkerboarded 4K on this console but native on this one." I'm talking about drastic aspects of a game that completely change how they may function. Notably when Middle-earth: Shadow of Mordor came out across both Xbox 360/PS3 and Xbox One/PS4, the Nemesis system had to be dumbed down to its bare bones on the older generation hardware.

And before any of you start saying, "the damage control has begun" based on rumors regarding either console, here's my history of owning video game consoles between Xbox and PlayStation.

I Received an original Xbox in 2002 for Christmas; got an Xbox 360 for Christmas in 2006; upgraded to an Xbox 360 S shortly after it came out; bought an Xbox One with Kinect in 2014; and upgraded to an Xbox One X in 2018. I didn't get my first PlayStation until I bought a PlayStation 4 Slim in 2018. I had used PlayStation consoles prior, all the way back to the PS2, at friends' houses, but Xbox has been my main platform nearly my entire life. I grew up on it.

This isn't me "being a Pony and shilling for Sony" because the PS5 is rumored to be less powerful.

I'm privileged enough now to own both — and cover both for work — and after spending so much time with them, I can honestly say that one isn't inherently better than the other just because of its power. The type of games that they offer, the services they offer, the ecosystems — those are what matter.

Get the latest news from Android Central, your trusted companion in the world of Android

I'd also like to remind you that we don't know the final specs we are dealing with. I don't even consider teraflops as the be-all and end-all of a console. Regardless of what the specs end up as, both will be immensely powerful and showcase phenomenal looking games. There comes a point when you get diminishing returns on resolution, anyway.

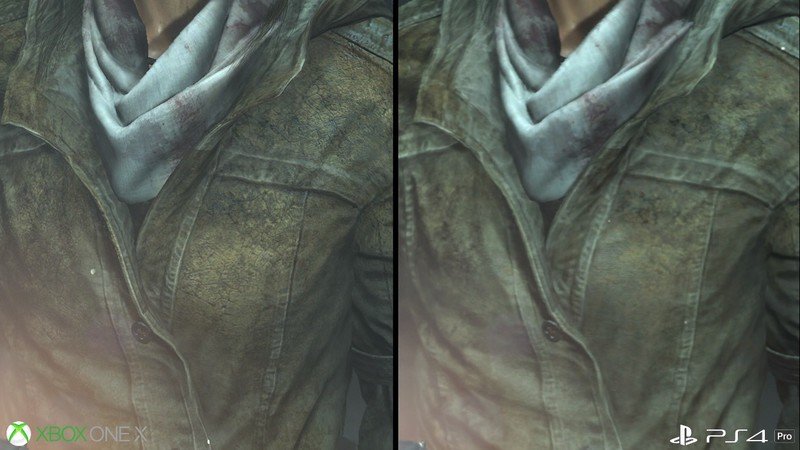

For starters, let take a look at Digital Foundry's comparison of Rise of the Tomb Raider in 2017.

The Xbox One X screenshots look marginally better, but the difference isn't drastic. It definitely doesn't affect my enjoyment of playing the game.

Now yes, I'm cherry-picking an example. There are thousands of games you could compare on Xbox One X and PS4 Pro, and some may look better on one than the other. Part of the job is also on the developer to optimize it. My main point is this: those both look pretty damn good and even when taking into account YouTube video compression, there really isn't a huge difference between the two. Not one that most people would notice while playing, anyway. It's easy to nitpick when you have a still screenshot to stare at and analyze. And those minor differences in detail will only get smaller and smaller as technology progresses next-gen, whether or not one console is 12TFlops or 9TFlops.

Good luck telling the difference between a game running at 8K resolution or 4K resolution, because you probably can't. Does 8K technically have four times the amount of pixels as 4K? Yes. Does it translate to visual details that will be immediately apparent and perceptible? Probably not.

Let's not forget Spider-Man's "puddlegate" controversy that Insomniac took a lot of flack for. Were the visuals downgraded? No. But a puddle was taken out and lighting was changed, making the scene look "worse" to a lot of people.

Insomniac even made light of the situation by adding puddle stickers to Spider-Man's in-game photo mode. Because when people are ridiculous, you need to call them out. It's a reference I hope the studio somehow carries over into Spider-Man's inevitable sequel.

When I start playing a game, I honestly can't even tell you if I'm playing in 1080p or 4K unless I know what the game runs at off the top of my head. I'd wager most of the general player base for either console are in the same boat. As long as something isn't incredibly blurry, I'll be having fun. 900p, 1080p, 4K, whatever.

To those who can tell the difference, are you really going to argue that playing a game in 1080p instead of 4K completely ruins the experience? Or are you just trying to get into fights over your favorite pieces of plastic?

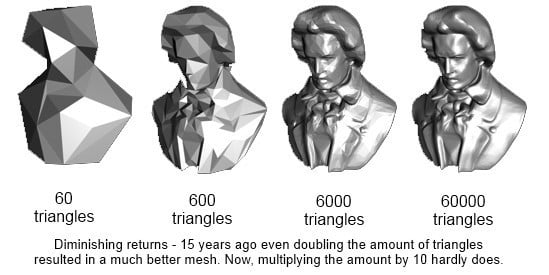

On the subject of diminishing returns, the picture below has popped up time and time again over the past several years when discussing polygon counts.

After one Reddit user went to "debunk" the image six years ago, they were met with this response from another user who claims to have been an artist at Guerrilla Games at the time:

So anyways - about the actual issue at hand; there really is a diminishing returns issue in games. Every time you double the amount of polygons, the subject will only look marginally better than the previous generation did. The difference between PS1 and PS2 was enormous. The difference between PS2 and PS3 was smaller, although still very significant. The difference between PS3 and PS4 is clearly noticeable, but it's not as big a leap as previous generations were. Future generations will no doubt offer smaller changes in graphical fidelity, and put more focus on added features.There are still great improvements to be made in graphics technology. We're moving away from static pre-baked lighting and that means we want to simulate light bouncing around in real time. That's ridiculously demanding on the hardware. And you wouldn't see the difference in a screenshot, but you will see it when objects and lights move around.Game graphics can still get loads better, but the improvements won't come in the form of higher poly counts. At least not by much. Look forward to stuff like realtime global illumination, particles, dynamics, fluids, cloth and hair, etc. It's not necessarily stuff that will make screenshots look better, but it will definitely make game worlds feel more alive.

My biggest takeaway from playing on a PS4 Slim and an original Xbox One before moving to each console's respective premium offering is that resolution wasn't a big factor when it came to my enjoyment. What mattered, and what I immediately noticed, were performance differences. How consistent the frame rate was. What loading times were like.

I only started to notice graphical differences between newer and older tech when I started playing around in Assassin's Creed Odyssey's photo mode. Because I'm a photo mode snob, I needed the perfect shot, which meant I'd always over-analyze any screenshots I'd take.

Games on both consoles will look absolutely outstanding.

It's not like we'll suddenly lose anti-aliasing, ambient occlusion, anisotropic filtering and all the other good stuff that begins with the letter A. Shadow mapping, draw distance, and volumetric lighting will only get better and better on next-gen systems. However the PS5 or Xbox Series X implement ray tracing, I guarantee it will look stunning on both. Let's not act like either will deliver potato quality visuals.

And while internet mobs squabble over 8K vs 4K and which console has the most teraflops, the Nintendo Switch will be off in the corner swimming in piles of money, despite having by far the worst specs on paper. Graphics certainly play a part, but they aren't everything, folks.

You know what actually interests me? How the CPUs and SSDs can be utilized to craft bigger and better worlds that load seamlessly between areas. How they can run more complex systems within a game. How game worlds can react to your actions. Just imagine the enemy AI you could be able to encounter.

That's what makes me excited about the PS5 and Xbox Series X. Not resolution. Regardless of the nuance there is to the argument and everything that comprises video game graphics and graphical fidelity, this is a hill I'm willing to die on.

Also, we should all continue playing more indie games next generation. We don't always need hyper-realistic 4K graphics where I can see every hair follicle on someone's face. Stylized graphics are important, too.

Sony's most powerful console... for now

Not everyone will be able to make the jump to next-gen when it releases. If you're one of those people, it's still worth it to upgrade to a PlayStation 4 Pro. It offers some amazing games at steadier frame rates than its PS4 Slim counterpart.

Jennifer Locke has been playing video games nearly her entire life. You can find her posting pictures of her dog and obsessing over PlayStation and Xbox, Star Wars, and other geeky things.