Does a digital personal assistant have ethical responsibilities?

Get the latest news from Android Central, your trusted companion in the world of Android

You are now subscribed

Your newsletter sign-up was successful

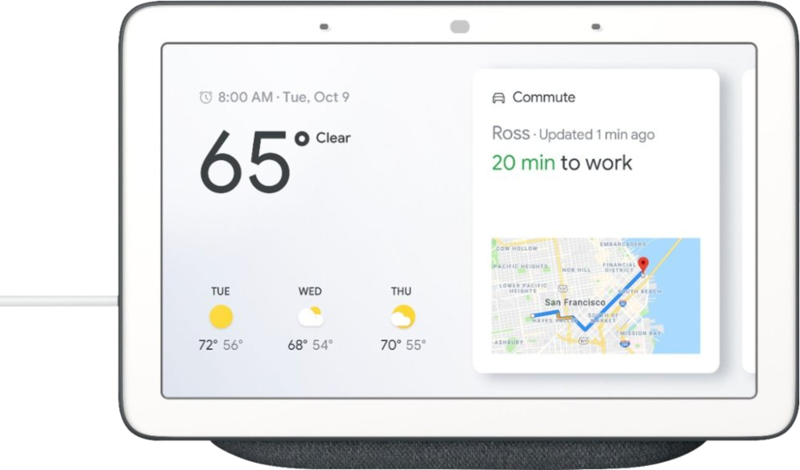

A friend of mine finally joined the ranks and purchased a Google Home. Largely because they were on sale, but also because she's seen my wife and I use ours on occasion and her curiosity finally got the best of her. In any case, she now has a little robotic AI on her coffee table that can tell her the weather or her schedule for the day, play music on demand, and turn on the porch light using the power of voice command and is having fun with it. I assume everyone who has a Google Home or Amazon Echo device had a similar honeymoon phase before it became routine.

Should we trust an AI to make the right call to the right people?

She also knows I'm pretty savvy when it comes to how Google "stuff" works (her words) and wanted to know if it could call the cops because it "thought" (this time the quotes are from me) there was a crime being committed or a dangerous situation at play. I let her know it couldn't and that unless it recognized a hotword it did not process or upload anything it could hear, but I would be careful asking it stupid questions that might get oneself in trouble just on principle. She seemed ... disappointed.

More: What does an "Always Listening" smart speaker really mean?

Why on earth would you want it to be able to report anything suspicious, I asked. Do you not value your privacy more than that? She quickly responded with a "yes, but" and proceeded to explain that she lived alone and would be perfectly fine if it could assess a potentially dangerous situation and call some sort of dispatch. We discussed the pros and cons and listened to each other's arguments — it eventually came to child safety like a lot of things do — and I admit, her rationale was solid, even if I disagree.

I do not want a little gadget that sits on a table to decide if it needs to call 911. Period, full stop. I do not trust that it would be right enough of the time nor do I want that decision to be taken away from myself, even if I would not make the right call every time. I consider myself to be fairly intelligent and able to make a quick judgment call even though I know sometimes that call may be wrong. I also think an algorithm could be developed that would recognize specifics and make the right call most of the time, too. Nevertheless, I still don't want technology that supersedes my little bit of privacy in my own home regardless of the circumstances.

Her arguments, which I will once admit again are sound, hit unsavory topics like an abusive partner or parent and the idea of a home invasion scenario. The point where I had to pause was when she asked if I would like that feature when my wife was alone or for my daughters to have. I try to be open-minded in all things but am still a husband and father and can't help being a bit protective so I had to admit that I wouldn't hate it as much then.

I value privacy over all else, but I have to pause when it comes to my family members.

I'm not torn — I don't think that a digital personal assistant should have any sort of ability to listen in other than when a hotword is heard and processed. I understand that someone in trouble may not be able to ask Google or Alexa to call for help, but I still think that not having the ability is the lesser evil. But as technology becomes more and more personal, these are the types of conversations that need to be had. Lawmakers and the companies that produce them need to hash it out, but we also need to discuss how much intervention any sort of smart electronics should have in our lives.

Get the latest news from Android Central, your trusted companion in the world of Android

I'm OK with the current state and hope that none is always the answer to that question. I'm curious to hear what you think. Should a Google Home be able to call the police and report abuse? What about other situations like discussions about violence? A bigger question may be if one should have the ability to check and to report someone who is wanted since it knows our identity. I expect plenty of knee-jerk reactions to these questions — I certainly had my own — but hopefully, we can have a serious discussion, too.

Hit the comments and tell me what you think.

Jerry is an amateur woodworker and struggling shade tree mechanic. There's nothing he can't take apart, but many things he can't reassemble. You'll find him writing and speaking his loud opinion on Android Central and occasionally on Threads.