Apple should ditch Siri for Gemini and Google Cloud, here's why

Gemini can be the chatbot, leaving Apple to do what it does best

It’s been a year since Apple unveiled Apple Intelligence, its long-awaited foray into the world of artificial intelligence on phones. The company promised many features, but a year on, these have been delayed for up to another year.

Over the same period, Google has continuously developed and released new features for Gemini, as well as unique features for each phone maker through Google Cloud. I enjoy the usefulness of Apple’s AI direction, but the best Android phones have more AI tricks than iOS 26.

Rumors suggest that Apple could add Google Gemini to the iPhone alongside ChatGPT, but could this lead to a partnership like Google’s current deal to be the default search engine? Given the challenges so far, should Apple ditch Siri entirely in favor of Gemini?

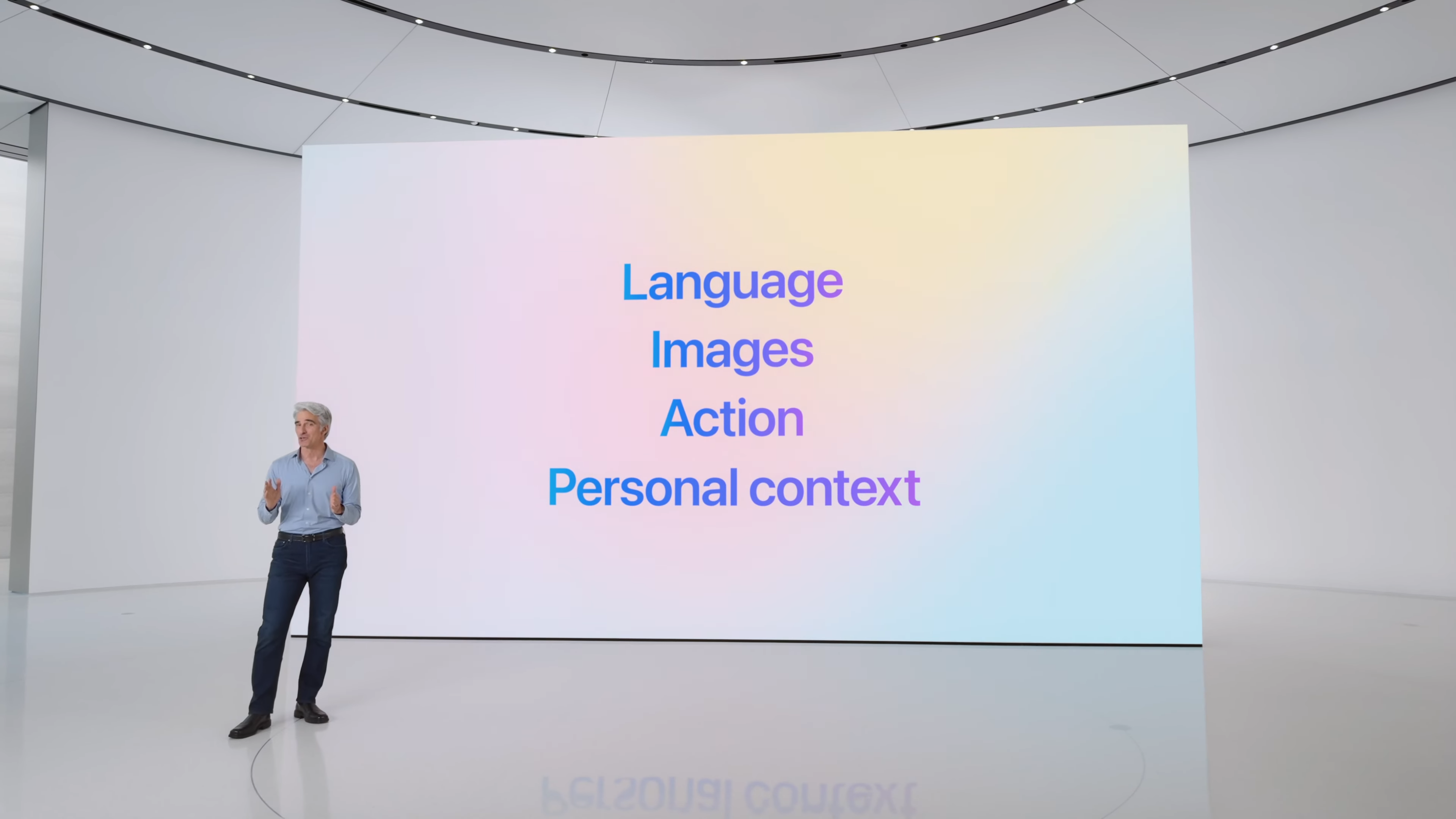

Apple has a strong vision for Apple Intelligence

This may seem like a contradiction given the challenges so far, but I like the direction that Apple Intelligence is headed. Apple chose to focus on building an AI personal assistant that solves everyday problems, and while the idea is sound, the execution has proven challenging.

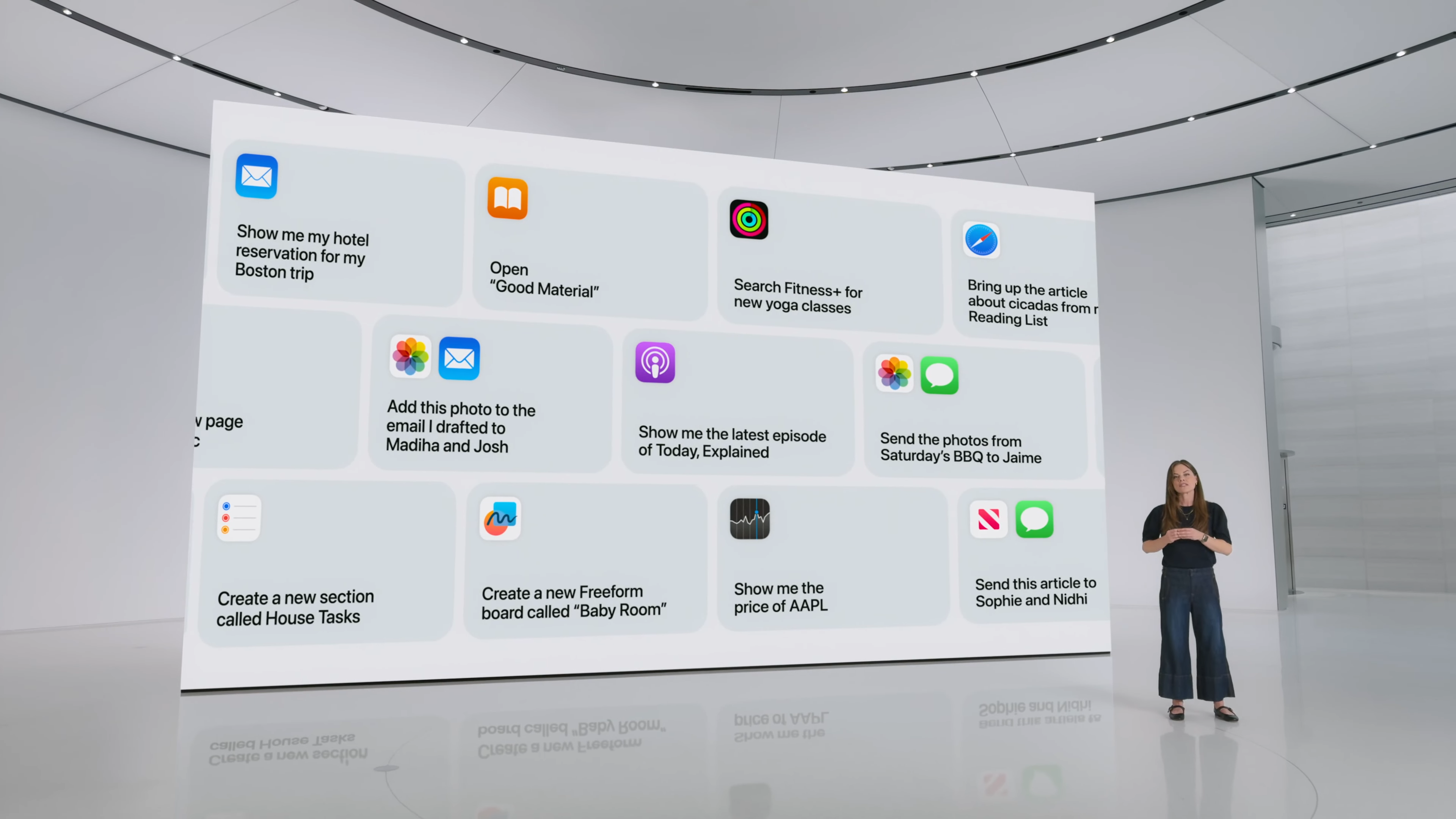

Many of the features have deep integration into iOS and solve genuine problems faced by many users. It’s the antithesis of most AI applications so far, but the direction that the industry is headed towards. Yet, many of the features hadn’t launched by this year’s WWDC 2025 keynote, where Apple announced iOS 26 with far fewer mentions of AI than last year.

Apple execs Craig Federighi and Greg Joswiak spoke with Joanna Stern from The Wall Street Journal about the challenges faced so far in delivering Siri, the expectations around Apple and AI, and Apple Intelligence as a whole.

This interview is particularly interesting, as it shows Apple is quite contrite about the state of Siri. Apple has shipped roughly half the new Siri features it mentioned last year, and it made a rare misstep of running a commercial for the new Siri. However, most interesting is that the issues with Siri have also overshadowed the broader Apple Intelligence features that have been shipped.

Get the latest news from Android Central, your trusted companion in the world of Android

One such feature is the partnership with OpenAI and ChatGPT. This allows the new Siri to offload tasks that fall outside its knowledge base and rely on more up-to-date information from OpenAI. It's not perfect, but it does show that Apple is open to letting a third party handle the chatbot part of the AI equation.

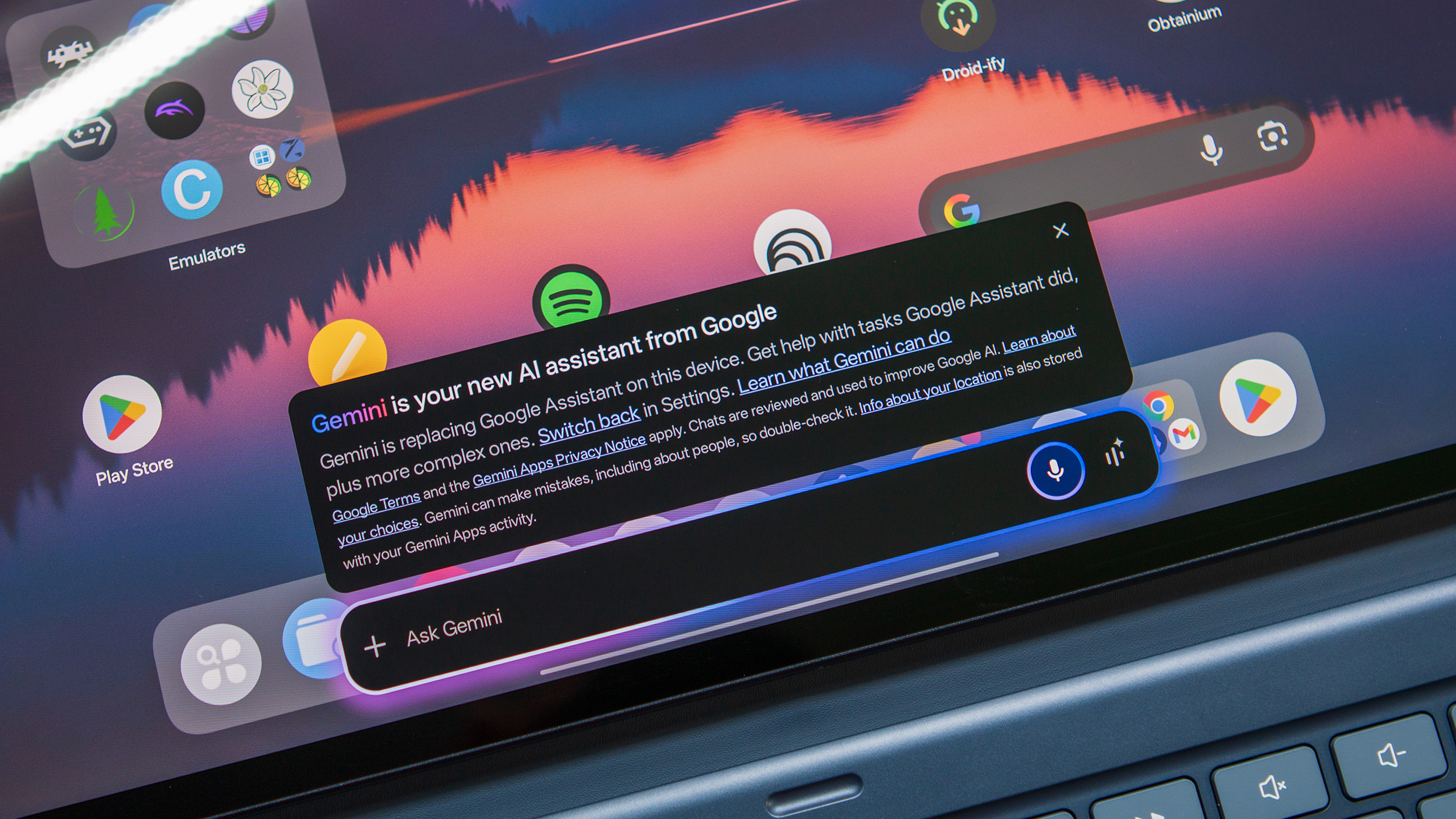

Gemini has incredible AI features across form factors

Google has developed a comprehensive suite of AI features for all Android phones, as well as the iPhone. The iPhone experience is limited, as it utilizes the Gemini app and only includes core Gemini features, such as Gemini Live with screen sharing. The current iPhone experience lacks OS-level features like Circle to Search, Google Lens, and several AI planners.

Many of Gemini’s first features focused on generative use cases, but this year, the company has shown off Gemini Live and its ability to be your visual assistant, and the ability to create entire videos with sound using the new Veo 3 model.

Apple promises similar Gemini Live features with Visual Intelligence, but it's unclear when these will launch. Apple's version of Circle-to-Search is launching in iOS 26, where users will be able to capture what's on the screen and search for specific information or perform tasks across every app on their phone, not just perform a web search.

Yet, Visual Intelligence and Siri do not have the same capabilities as Gemini. Apple’s focus on and challenges in building a helpful personal assistant have allowed Google to develop a suite of generative features and subsequently catch up in productivity features.

Then there’s Project Astra, and Google’s foray into AR glasses. Apple is expected to eventually launch a pair of multimodal glasses, but Google already has a pair in the works that is set to launch next year. There’s also the Project Moohan headset that’s been developed with Samsung, and is ready to launch this year to challenge the Apple Vision Pro.

Google has also proven it can build custom experiences

If it were just Gemini, I think there’s a much stronger case to be made for Apple to develop its own suite of AI features. Yet, over the past year, Google has proven that it knows exactly how to build features that empower partners’ creative visions. The result is that every Android phone has a taste of Gemini, and many now also have unique AI applications offered by Google Cloud.

There’s the suite of Moto AI features on the Razr 60 Ultra, which includes Catch Me Up to summarize personal notifications, Remember This to capture a screen and notate it for future reference, and Pay Attention to launch a voice recording complete with transcription.

It’s not just Motorola, as Google has also partnered with every company to help enable custom experiences. Samsung has been a test bed for new Gemini features, such as Circle-to-Search, and Gemini has replaced Bixby as the default personal assistant.

OnePlus and Nothing have built AI spaces to store, percolate, and iterate on ideas. Realme’s new AI Assistant can automatically create new lists and calendar entries by reading what’s on your screen. Then there’s Honor and Image to Video, which uses Google Veo 2 AI models to bring static images to life in short video clips or animated GIFs.

Google I/O 2025 and WWDC 2025 proved to be extremely contrasting when it came to AI as a whole. Google mentioned AI over 100 times during the keynote, more than Apple mentioned all of its AI features, Apple Intelligence, and Siri combined during its keynote.

Apple could benefit from Google’s head start

The challenge for Apple is whether it can rebuild faith in Apple Intelligence. It utilized its upcoming AI features to promote the iPhone 16 series, and a year later, many of these features are still not available for customers who bought into the marketing.

There’s a promise of delivering these features next year, but the partnership with ChatGPT shows that Apple isn’t afraid to look externally. A potential collaboration with Gemini would bring immense benefits to iOS users and also allow Apple to focus its efforts on building deeper features, rather than competing directly with Google.

History suggests that Apple would be open to this, but it would only be a temporary agreement until it can refine its own capabilities. I use an iPhone daily alongside one of the best folding phones, usually the Oppo Find N5 or the Razr 60 Ultra. Observing my usage over the past couple of weeks, I’ve realized that when I have a choice of phones, I’ll always turn to an Android phone with Gemini for any AI-powered tasks.

Apple has a strong vision for Apple Intelligence, but based on what’s known publicly, Google seems to be closer to building features that will capture the attention of potential customers. Considering the popularity of the Ray-Ban Meta smart glasses, eyewear is shaping up to be the next battle frontier, and a partnership with Gemini may be the fastest way for Apple to bring a product to market quickly.

The key question: What about Privacy?

For all the benefits, there’s likely one area where a partnership could fall apart. Apple has made privacy a significant part of its focus with Apple Intelligence, and the company has built a reputation around data privacy.

Meanwhile, Google offers many of the same features — i.e., Private Compute Cloud — that are designed to focus on the privacy of your data, but doesn’t have the same trust amongst everyday customers when it comes to privacy.

A partnership between the two companies that makes Gemini the default assistant could also lead to further regulatory scrutiny regarding competition. Yet, if Apple can work its way around that, Gemini would make the ideal replacement for Siri, allowing Apple to focus on building the AI experiences that only it can.

You May Like

Nirave is a veteran tech journalist and creator at House of Tech. He's reviewed over 1,000 phones and other consumer gadgets over the past 20 years. A heart attack at 33 inspired him to consider the Impact of Technology on our physical, mental, and emotional health. Say hi to him on Twitter or Threads

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.