Google Lens' new search feature lets you find objects using a mix of text and images

For when you're at a loss of words to describe what you're looking for

What you need to know

- Google has announced a new Lens-powered multisearch feature on mobile devices.

- The new functionality lets you search for objects using both text and images at the same time.

- It is available as a beta feature in English in the U.S.

At its Search On event last year, Google teased a new Lens feature that would power your shopping experiences on the best Android phones and Chromebooks. The search giant is now rolling out that experience with a new smart feature called multisearch.

Google announced today that it's launching a new way to search on mobile devices. The new multisearch function in Lens allows you to do a quick search for objects using a combination of text and images at the same time.

It comes in handy when you don't "have all the words to describe what you were looking for," the search giant noted.

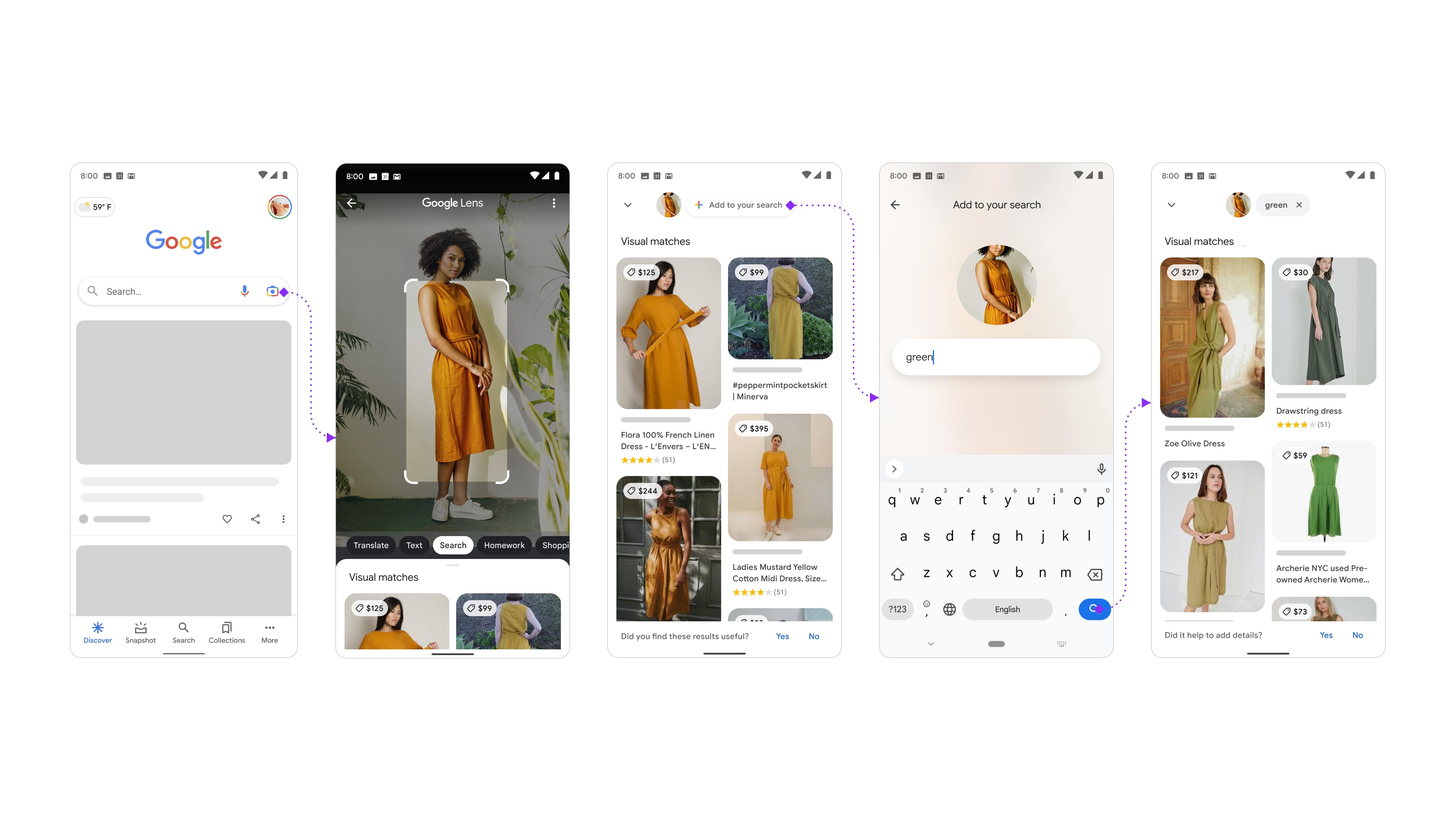

Google provided a couple of screenshots showing the feature in action. In one example, multisearch is used to refine a search for an orange dress by adding the query “green” to find it in another color.

You can also use the new functionality to snap a photo of a rosemary plant and append your query with “care instructions."

Google noted that multisearch helps you "go beyond the search box and ask questions about what you see." In other words, it helps you search for objects you find hard to describe.

The feature resides in the Google app for Android and iOS, though it's only available to beta testers in the U.S. for now. To get started, simply tap the Lens camera icon in the Google app and search for one of your screenshots or take a photo of an object. Then, you need to swipe up and tap the "+ Add to your search" button to modify your search with text queries.

Get the latest news from Android Central, your trusted companion in the world of Android

"All this is made possible by our latest advancements in artificial intelligence, which is making it easier to understand the world around you in more natural and intuitive ways," Google said in a blog post. "We’re also exploring ways in which this feature might be enhanced by MUM– our latest AI model in Search– to improve results for all the questions you could imagine asking."

Google didn't say whether the feature is coming to the web. But it's a safe bet that it will, given Lens' availability on the web inside Google Photos and the launch of the Lens-powered reverse image search option on Chrome for desktop last year.

For the time being, the feature only applies to searches in English. The Mountain View-based company also noted that it returns "the best results for shopping searches," though it's also useful for other types of queries.

Jay Bonggolto always keeps a nose for news. He has been writing about consumer tech and apps for as long as he can remember, and he has used a variety of Android phones since falling in love with Jelly Bean. Send him a direct message via X or LinkedIn.