Gemini 2.5 Flash-Lite now 'generally available' following Google's month-long preview

Google's "fastest" Gemini model is here, acting as the finale to its 2.5 series.

What you need to know

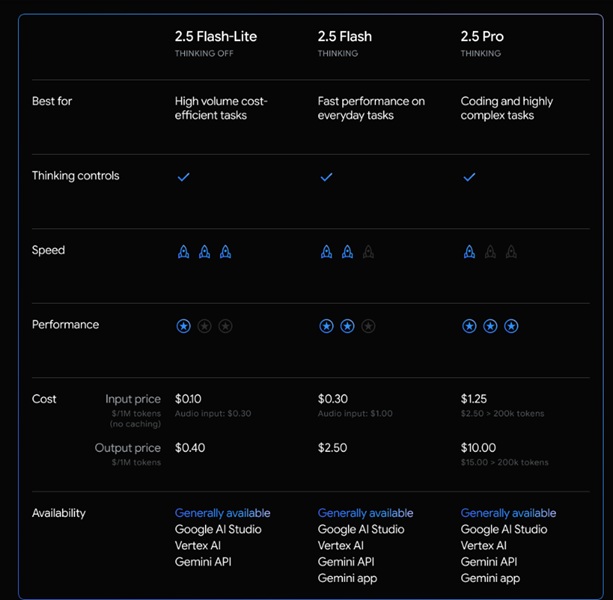

- Google announced the "general availability" of its Gemini 2.5 Flash-Lite model, which is said to be its most cost-efficient and fastest version in the 2.5 series.

- While developers can find $0.10 per 1M token input and $0.40 for output, 2.5 Flash-Lite has already gone through some heavy real-world scenarios.

- Companies like Satlyt have used the model to help summarize telemetry data and process satellite data far quicker than before.

- Gemini 2.5 Flash-Lite entered its preview stage in June during the public availability of 2.5 Flash and 2.5 Pro in the Gemini app, Vertex AI, and AI Studio.

The finale to Google's recent string of 2.5 models has arrived, as the company highlights a Gemini variant previously held in testing.

This afternoon (July 22), in an email to Android Central, Google announced the "general availability" of Gemini 2.5 Flash-Lite, which has exited its preview stage. The company went into more in-depth detail in a Developers blog post, stating this Gemini variant is its "fastest" and most "cost-efficient" AI model. The post details developers will find costs at $0.10 per 1M token input and $0.40 for output.

Power is the name of the game for Gemini 2.5 Flash-Lite. While extremely lightweight, this Gemini variant still retains Google's thinking controls. More than that, the company highlights the model's "all-around high quality" capabilities in "coding, math, science, reasoning, and multimodal understanding."

Google teases its Gemini 2.5 Flash-Lite model capabilities by drawing attention to its performance in a few real-world scenarios.

From Satellites to Data Analysis

Companies like Satlyt have been using 2.5 Flash-Lite to help reduce latency for critical onboard diagnostics for its decentralized space computing platform. Gemini's fastest model has also helped decrease Satlyt's power consumption by ~30%, as it helps process satellite data and offer real-time summarization for telemetry data. Other companies like HeyGen have reportedly used Gemini 2.5 Flash-Lite to translate their video content in over 180 languages to reach global audiences.

DocsHound and Evertune have also spent time with the 2.5 Flash-Lite model to quicken long video processing and speed up analysis/report generation.

Google adds that developers can begin trying Gemini 2.5 Flash-Lite today (July 22) by typing “gemini-2.5-flash-lite” in their code. The AI model can be found in Google's AI Studio and Vertex AI.

Get the latest news from Android Central, your trusted companion in the world of Android

A Month in Preview

We've been on quite a run with Google's 2.5 Gemini models over the past month. In June, the company brought its 2.5 Pro and 2.5 Flash models into the public eye via the Gemini app, Google AI Studio, and Vertex AI spaces. The most important part of that announcement was revealing that 2.5 Flash-Lite was entering its preview stage. This iteration came only a few months after Google made the 2.0 version public earlier this year.

At the time, Google pushed the cost-efficient and fast sides of 2.5 Flash-Lite for developers looking to quicken their workload. In the event that you require more thought power, there is Gemini 2.5 Pro.

Nickolas is always excited about tech and getting his hands on it. Writing for him can vary from delivering the latest tech story to scribbling in his journal. When Nickolas isn't hitting a story, he's often grinding away at a game or chilling with a book in his hand.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.