The Pixel 2 camera's secret weapon: A Google-designed SoC, the 'Pixel Visual Core'

Get the latest news from Android Central, your trusted companion in the world of Android

You are now subscribed

Your newsletter sign-up was successful

We've been using the Pixel 2 and it's bigger sibling the Pixel 2 XL for a while. Once again, Google's phones have some fantastic photo capabilities. What we were seeing from both the 12.2MP rear camera and 8MP front-facing is just so much better than any other phone we've ever used. And we've used a lot of them.

Read the Google Pixel 2 review

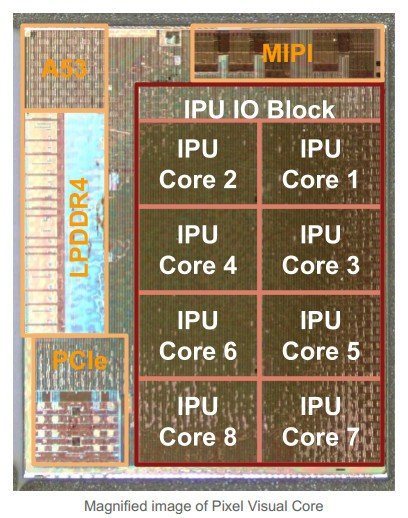

And that's before Google enables its secret weapon. Google has designed a custom imaging-focused SoC (system on chip) in the Pixel 2, and it's called Pixel Visual Core.

We don't have all the details; Google isn't ready to share them and maybe isn't even aware of just what this custom chip is capable of yet. What we do know is that the Pixel Visual Core is built around a Google-designed eight-core Image Processing Unit. This IPU can run three trillion operations each second while running from the tiny battery inside a mobile phone.

Interestingly, the Pixel Visual Core wasn't even enabled at launch on the Pixel 2 and 2 XL — we're just now seeing an "early version" of it with the Android 8.1 Developer Preview 2. With the Pixel Visual Core finally enabled, Google's HDR+ routines will be processed using this IPU, and it runs fives times faster while using less than one-tenth of the energy than it would if it ran through the standard image processor in the Snapdragon 835.

Google says this is possible because of how well the software and hardware have been matched with each other. The software on the Pixel 2 controls "many more" details of the hardware than you would find in a typical processor to software arrangement. By handing off control to software, the hardware can become a lot more simple and efficient.

Google is a software company first and foremost. It's no wonder that its first custom mobile SoC leverages software the way other companies use hardware.

Of course, this means the software then becomes more and more complex. Rather than use standard methods of writing code, building it into a finished product and then trying to manage everything after all the work is finished, Google has turned to machine learning coding languages. Using Halide for the actual image processing and TensorFlow for the machine learning components themselves, Google has built its own software compiler that can optimize the finished production code into software built specifically for the hardware involved.

Get the latest news from Android Central, your trusted companion in the world of Android

Even though it wasn't ready at launch and took extra time to enable, right now the only part of the camera experience using the Pixel Visual Core is the camera's HDR+ feature. It's already very good; this is what comes next.

HDR+ is only the beginning for the Pixel Visual Core.

With the Android 8.1 Developer Preview 2, the Pixel Visual Core will be opened up as a developer option. The goal is to give all third-party apps access through the Android Camera API. This will give every developer a way to use Google's HDR+ and the Pixel Visual Core, and we expect to see some really big things.

For the one last thing we always love to hear about, Google says that we should remember the Pixel Visual Core is programmable and they are already building the next set of applications that can harness its power. As Google adds more abilities to its new SoC, the Pixel 2 and 2 XL will continue to get better and be able to do more. New imaging and machine learning applications are coming throughout the life of the Pixel 2, and we're ready for them.

Jerry is an amateur woodworker and struggling shade tree mechanic. There's nothing he can't take apart, but many things he can't reassemble. You'll find him writing and speaking his loud opinion on Android Central and occasionally on Threads.