Sony's AI-powered sensors are the future of smartphone cameras

Earlier this week Sony announced two new IMX image sensors that are going to change everything when it comes to "smart" photography.

That's a really bold statement to make, but it's also an easy prediction because of how smartphones and other smart products use their cameras. You expect a bigger "real" camera with an expensive lens to take great photos because the hardware being used makes it easier. But when you're using a tiny sensor in a tiny device and a fixed lens, you don't have expensive optics to do the heavy lifting. Instead, things like AI are used, and that's what makes Sony's new sensors special: they have a small AI processor embedded in the sensor package.

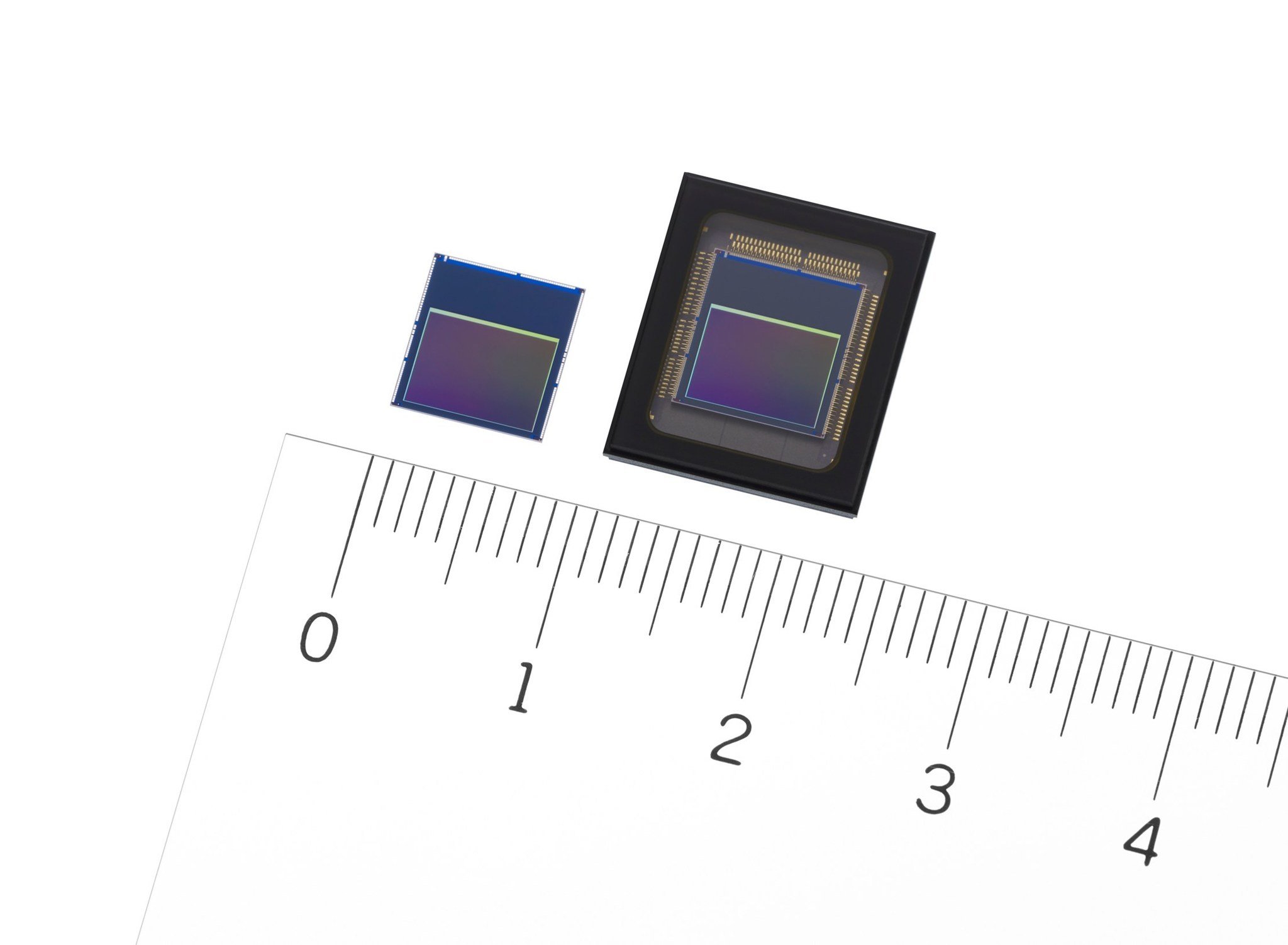

These sensors have nothing to do with a phone or any consumer products. They're designed for industrial and manufacturing machine vision equipment and companies like Amazon have expressed interest because an all-in-one solution means there is plenty of cost savings built into the product. But like all things, the sensors will get smaller and cheaper — right now the IMX costs about $90 — and eventually, you'll find them in home surveillance cameras, body cameras, and even your smartphone.

AI has tuned smartphone photography into something amazing.

AI is a big deal when it comes to our smartphone cameras, but nobody ever really talks about why. That's OK because our eyes can tell us that AI-powered "image acquisition systems" make our pictures look a lot better than phone cameras from just a few years ago. But a small bit of knowing how is always a good thing.

A camera sensor is just a piece of electronics that can gather the color and intensity of light. When you open the camera app the sensor starts gathering this data, and when you tap the shutter it's grabbed and sent off to another piece of hardware that turns the data into a photograph. That means something, somewhere has to be programmed to make the conversion.

Where AI comes into the mix is to add or convert extra or even nonexistent data to the conversion process. AI can help detect the outline of our hair against a busy background. It can estimate the distance of everything in a scene because it "knows" how big one thing is. It can take data from multiple image captures and combine them to make low-light photos better. All digital cameras use AI, and phone cameras use it a lot.

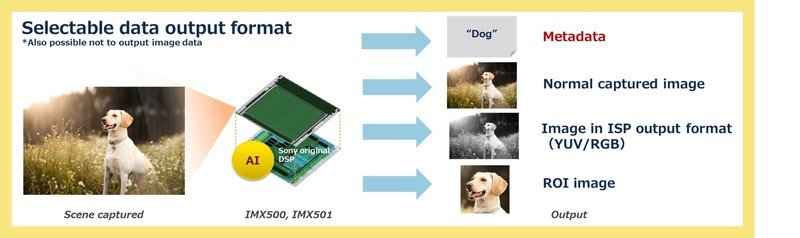

With Sony's new sensors, the middle layer of smart electronics is bypassed because there's an AI-powered image processor built into the package. That could mean a really great photo can be delivered directly to a display, but more importantly, it means the output doesn't have to be a photo at all.

Get the latest news from Android Central, your trusted companion in the world of Android

An AI-powered image sensor means the output doesn't have to be an image at all.

Sony's examples of how this sensor can be used show that an item and an Amazon Go store can be tracked from its spot on a shelf into a customers hands, a running tally of the number of people visiting a store in the mall can be kept, or a dog in front of the sensor could tell another piece of software that there is a dog in front of the sensor.

There are countless use cases for this sort of equipment in manufacturing and shipping and any commercial application where the data of what a camera "sees" is as important (or more) than the image it sees. The car you drive, the pen you use, even the phone you're holding all used machine vision to sort parts or find defects. But for us, where it gets interesting is how this would work inside a phone.

As mentioned, your phone already does all of this. Apple, Google, and Huawei have specialized hardware to help, but the chip that powers your phone has an ISP (Image Signal Processor) built into it that also relies heavily on AI to do the very same things Sony's new camera sensors are doing.

Samsung phones take really great "regular" photos. More than good enough for anyone, in fact. But when you start pixel-peeping you find that Apple, Google, and Huawei phones take photos that are a little bit better. They can have truer colors, do better at detecting edges which makes photos sharper, and even non-portrait mode shots have a better sense of depth.

More: Best Android Camera in 2020

This is because Samsung is using a single ISP, albeit a very good one, to build a photo out of sensor data, while the others are using an ISP in conjunction with a dedicated piece of hardware that uses AI to further refine the process. Samsung's pictures are excellent and anyone who claims they look bad isn't being genuine. Imagine what Samsung could do with an extra layer of AI and data collection on top of the ISP tuning it's doing right now.

Now take that a step further and imagine what a company like Motorola or OnePlus — which aren't exactly known for having incredible cameras — can do with an extra layer of image processing done locally on the device that doesn't add any overhead to the process.

Today, big companies that have big buildings full of equipment are interested in Sony's new sensors. In the near future, you and I will be, too, because this idea will trickle down.

Jerry is an amateur woodworker and struggling shade tree mechanic. There's nothing he can't take apart, but many things he can't reassemble. You'll find him writing and speaking his loud opinion on Android Central and occasionally on Threads.