Can Google's LaMDA chatbot actually be sentient?

LaMDA or any LLM neural network can’t be sentient, at least with today’s technology.

Get the latest news from Android Central, your trusted companion in the world of Android

You are now subscribed

Your newsletter sign-up was successful

Blake Lemoine, a Google engineer has been suspended with pay after he went public about his thoughts that Google's breakthrough AI chatbot LaMDA was a sentient machine with an actual conscious.

Lemoine came to this conclusion after working with the model and having deep-thought conversations that led him to believe the AI had a "soul" and was akin to a child with knowledge of physics.

It's an interesting, and possibly necessary, conversation to have. Google and other AI research companies have taken LLM (large language model) neural networks in the direction that makes them sound like an actual human, often with spectacular results.

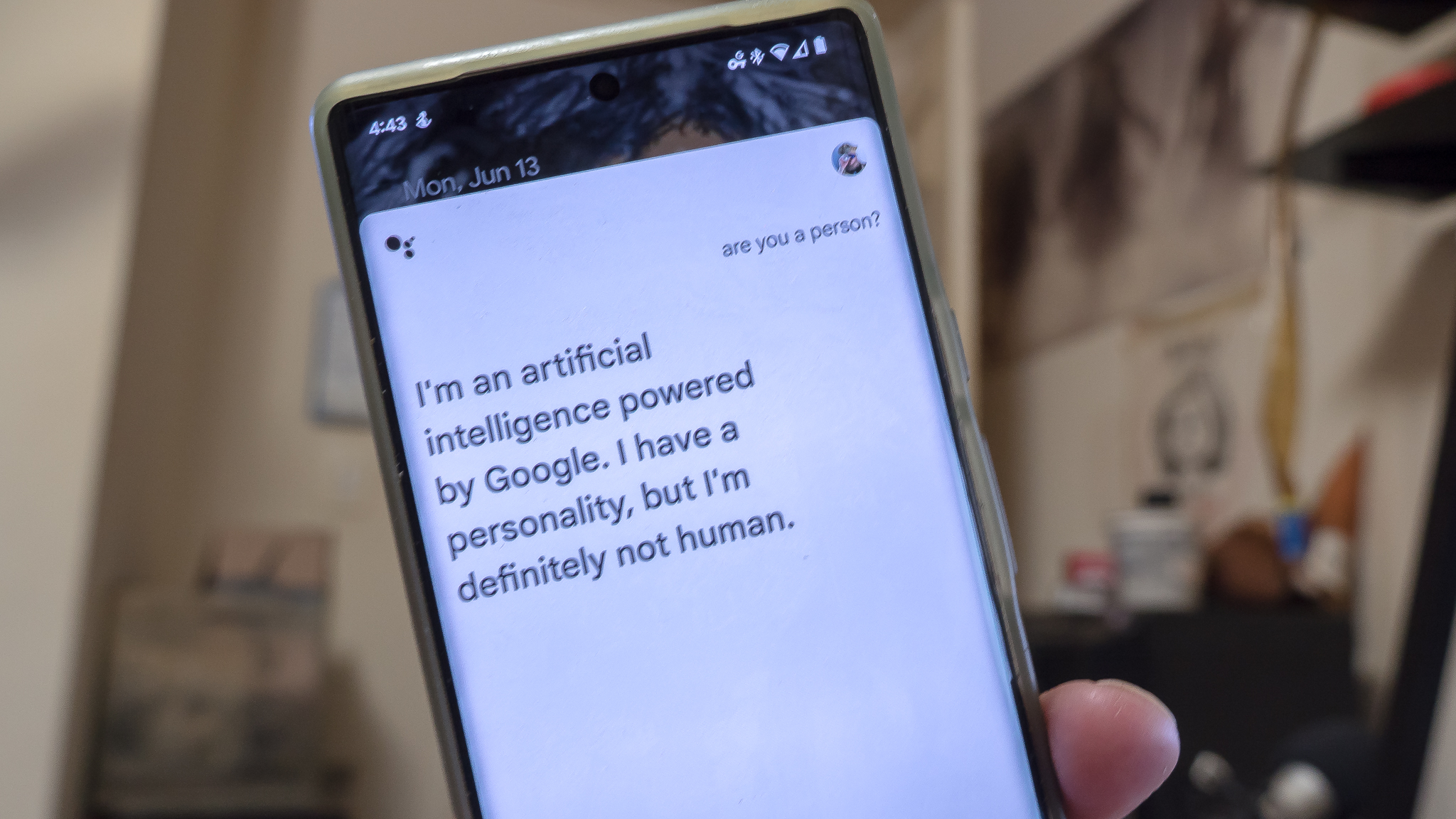

But in the end, the algorithm is only doing exactly what it was programmed to do — fooling us into thinking we're talking to an actual person.

What is LaMDA?

LaMDA, and other large-scale AI "chatbots" are an extension of something like Alexa or Google Assistant. You provide some sort of input, and a complicated set of computer algorithms responds with a calculated output.

What makes LaMDA (Language Model for Dialogue Applications) different is its scale. Typically an AI will analyze what is presented to it in very small chunks and look for keywords or spelling and grammar errors so it knows how to respond.

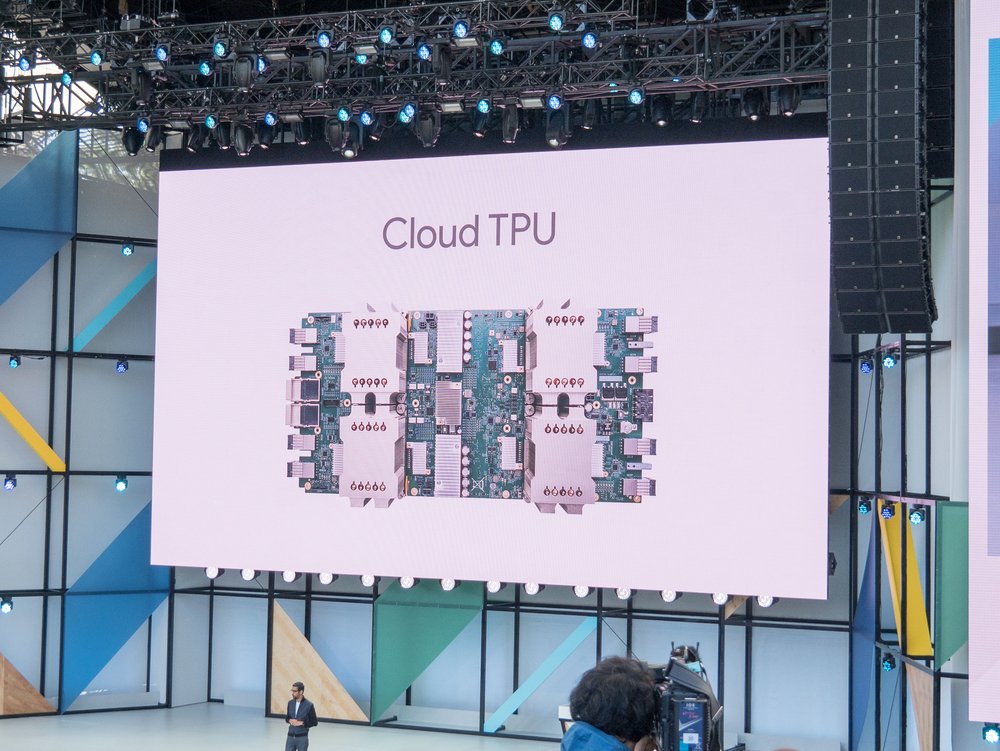

LaMDA is what's known as an LLM. A large language model is a name given to a type of neural network of computers that have been fed an incredible amount of text in order to teach it to generate responses that are not only correct but sound like another person.

Get the latest news from Android Central, your trusted companion in the world of Android

LaMDA is spectacular because of its scale.

LaMDA's breakthrough was how Google was able to refine the output and tweak it so it was audibly similar to the way a person actually talks. For example, we've all heard "OK, I'm turning on the bedroom light" or something similar from Google Assistant if we use our favorite smart bulbs.

LaMDA could instead reply with something like "OK, it is pretty dark in here isn't it?" That just sounds more like you're talking to a person than a little box of circuits.

It does this by analyzing not just words, but entire blocks of language. With enough memory, it can hold the responses to multiple paragraphs worth of input and sort out which one to use and how to make the response seem friendly and human.

The scale is a big part of the equation/ I have an annoying typo I always make: "isn;t" instead of "isn't." Simple algorithms like Chrome's spellcheck or Grammarly can recognize my error and tell me to fix it.

Something like LaMDA could instead evaluate the entire paragraph and let me know what it thinks about the things I am saying and correct my spelling. That's what it was designed to do.

But is it actually sentient, and is that the real problem?

Lemoine certainly believes that LaMDA is a living, breathing thing. Enough has been said about his mental state or how kooky his lifestyle might be so I'm not going to say anything except that the man believes LaMDA has real feelings and was acting out of kindness when he went public with his thoughts. Google, of course, felt differently.

But no, I don't think LaMDA or any LLM neural network is sentient or can be, at least with today's technology. I learned long ago to never say never. Right now, LaMDA is only responding the way it was programmed and doesn't need to understand what it's saying the same way a person does.

It's just like you or I filling in a crossword clue and we don't know what the word means or even is. We just know the rules and that it fits where and how it is supposed to fit.

4 letters — noun. A stiff hair or bristle. (It's Seta but I tried SEGA 10 times).

Just because we're not close to the day when smart machines rise and try to kill all humans doesn't mean we shouldn't be concerned, though. Teaching computers to sound more human really isn't a good thing.

That's because a computer can — again — only respond the way it was programmed to respond. That programming might not always be in society's best interests. We're already seeing concerns about AI being biased because the people doing the programming are mostly well-to-do men in their twenties. It's only natural that their life experience is going to affect the decision-making of an AI.

Take that a step further and think about what someone with actual bad intentions might program. Then consider that they could have the ability to make it sound like it's coming from a person and not a machine. A computer might not be able to do bad things in the physical world, but it could convince people to do them.

LaMDA is incredible and the people who design systems like it are doing amazing work that will affect the future. But it's still just a computer even if it sounds like your friend after one too many 420 sessions and they start talking about life, the universe, and everything.

Jerry is an amateur woodworker and struggling shade tree mechanic. There's nothing he can't take apart, but many things he can't reassemble. You'll find him writing and speaking his loud opinion on Android Central and occasionally on Threads.