No, your Galaxy S23 Ultra isn't actually taking pictures of the moon and it doesn't matter

Over the weekend, a Reddit post from r/Android exploded across the tech-sphere as it attempted to explain how Samsung is "faking" claims that you can take pictures of the moon with your Galaxy S Ultra. And boy oh boy, did it seem to rile up everyone from Apple pundits to the Android faithful, and a lot of people in between.

Here's the quick TLDR:

Samsung's last three Galaxy S Ultra phones are touted as being able to take pictures of the moon. In the post, u/ibreakphotos took a picture of the moon, downsized it, applied a "gaussian blur" and then took a picture of the "moon" on their monitor.

The problem arises when you look at how Samsung markets this as being an actual capability of the zoom lens, which one (or many) could argue is misleading. But there's much more going on here.

AI and Machine Learning

I'm not well-versed in the ins and outs of how a photo is processed, whether it's from my smartphone or a traditional camera. But no matter whether you own the Galaxy S23 Ultra, iPhone 14 Pro Max, or a cheap Android phone, your images are being processed.

Each phone maker handles the image-capturing process a bit differently from the next, with some going super-heavy on saturation, while others try, to give you a result that's more true-to-life. In many cases, again with every phone, you will likely come across a picture that looked amazing in the preview, only to see that the processing absolutely ruined it.

Two different phones with the same camera hardware won't produce the same images simply because of on-device processing.

There's a reason why you see and read about the AI and machine learning capabilities whenever a new processor is launched. Some of these improvements have to do with being able to properly account for the different cameras that each phone is using.

Get the latest news from Android Central, your trusted companion in the world of Android

It's only been in the last few years that we've started to see more and more phones provide the ability to capture RAW photos. These are supposed to ditch all of the potential processing while capturing as much information as possible with the tap of a button.

Same argument, different situation

A few years ago, Huawei was "caught in 4K" as it was found to be super-imposing images of the moon taken with the Huawei P30 Pro. That phone also features a periscope zoom lens, which at the time, was considered one of the best phones for mobile photography.

After many attempts to provide an explanation and boatloads of research done by the community, the overall conclusion was that it really doesn't matter. And on the surface, that's where you might land with Samsung and the Galaxy S23 Ultra.

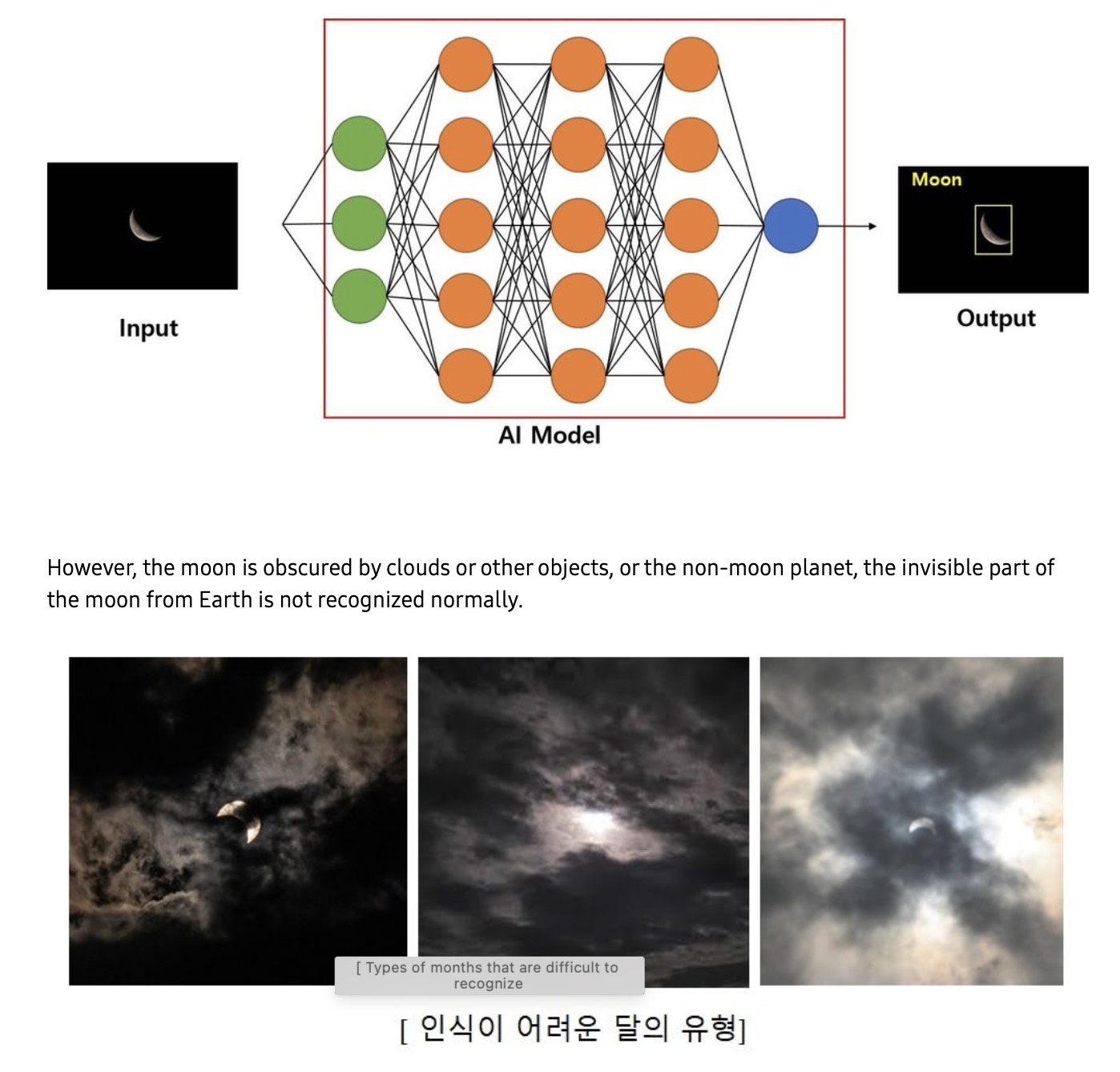

But as Max Weinbach so thoughtfully points out, it's not like Samsung hasn't already explained how a phone with a 10MP periscope lens, capable of both 3x and 10x optical zoom, is capturing photos of the moon. According to the Samsung Community post (translated), your phone "uses an AI deep learning model to show the presence and absence of the moon in the image and the area (square box) as a result. AI models that have been trained can detect lunar areas even if other lunar images that have not been used for training are inserted."

The post goes on to confirm that (translated) "if the moon is obscured by clouds or other objects, non-lunar planets, or parts of the moon that are not visible from Earth, it is not normally recognized." It's why if you take a picture of the moon on a clear night, it'll come out looking like a mind-bending feat. But if there's any type of cloud cover or other obstruction, it simply won't be recognized and will just look like a big glaring ball in your picture.

It's not great, but just turn it off

The real question behind all of this is whether it actually matters or not. In terms of the capabilities of the camera itself, no, I don't think it matters. Where it does matter, is how the phone is marketed, as the average consumer isn't going to know why some of their moon photos turn out great (in perfect conditions), while others just look like crap.

It definitely feels a bit misleading, but there hasn't been enough of a fuss for Samsung to stop including it on its phones. At this point, you could just file it along with the myriad of other "gimmicks" that we've seen in recent years.

Just took this picture of Pluto with my Samsung Galaxy S24 Plus Pro Max pic.twitter.com/EbWmMAQH9kMarch 12, 2023

It's not like you have to take a picture of the moon if you don't want to. Samsung even makes it possible for you to turn off this functionality, as you just need to simply toggle Scene optimizer off. As I was writing this, I noticed Samsung's description of what Scene optimizer does: "Automatically optimize camera settings to make dark scenes look brighter, food look tastier, and landscapes look more vivid."

If you don't want any of that, then just turn it off. And we don't need to burst someone else's bubble just because the Galaxy S23 Ultra isn't actually technology's version of the Elder Wand.

An absolute beast

If you're looking for the best smartphone that money can buy in 2023, look no further than the Galaxy S23 Ultra. This phone has all of the capabilities and features you could want, from the 200MP main camera to true multi-day battery life.

Andrew Myrick is a Senior Editor at Android Central. He enjoys everything to do with technology, including tablets, smartphones, and everything in between. Perhaps his favorite past-time is collecting different headphones, even if they all end up in the same drawer.