Ask Jerry: What happened to laser autofocus?

Let's talk about tech.

Welcome to Ask Jerry, where we talk about any and all the questions you might have about the smart things in your life. I'm Jerry, and I have spent the better part of my life working with tech. I have a background in engineering and R&D and have been covering Android and Google for the past 15 years.

Ask Jerry is a column where we answer your burning Android/tech questions with the help of long-time Android Central editor Jerry Hildenbrand.

I'm also really good at researching data about everything — that's a big part of our job here at Android Central — and I love to help people (another big part of our job!). If you have questions about your tech, I'd love to talk about them.

Email me at askjerryac@gmail.com, and I'll try to get things sorted out. You can remain anonymous if you like, and we promise we're not sharing anything we don't cover here.

I look forward to hearing from you!

What happened to laser autofocus?

Alex writes:

I've been wondering about the importance of laser auto-focus for smartphone cameras.

I'm asking this question because I noticed this feature missing from OnePlus' flagship phones for several years now after being included previously.

Get the latest news from Android Central, your trusted companion in the world of Android

Is OnePlus cutting corners by not including it anymore or does this feature have little benefit to begin with? Thanks.

This is a really cool question that shows how much consumers care about the camera on any new phone. It also shows how manufacturers can't just make a change without people wondering why.

The short answer is that laser autofocus has been replaced with something "better", or at least something phone makers think is better.

There are a lot of ways to try and focus a camera. The simplest — and one that isn't used much at all any longer — is using the contrast between two surfaces to adjust the lens. This does work, but it's slow and needs a lot of light to be reliable.

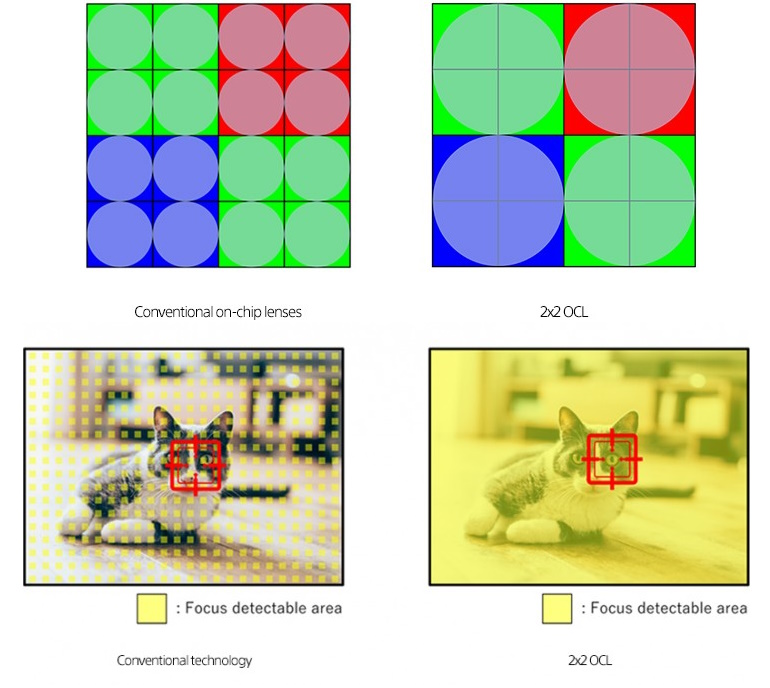

PDAF (Phase Detection Auto Focus) uses a small number of pixels on the camera sensor to focus the lens by measuring differences in light. If nearby pixels measure different amounts of light, the lens is corrected until they are the same and the image should be in focus.

Laser Autofocus uses a small laser near the camera lens that emits a short burst at the center of your photo. The camera software measures how much time it takes for this light to bounce back and uses this measurement to determine how far away the object is so the lens knows how to focus. A ToF (time of flight) sensor can make this even better.

We're going to talk a bit more about PDAF because it's replaced laser focus for the most part. Improvements to the tech like DPAF (Dual Pixel Auto Focus) which uses photodiodes on every pixel of the image sensor to monitor an actual electrical signal for focus information, is fast and accurate. You'll find it on expensive DSLR cameras as well as phones.

The OnePlus 12 takes this idea and extends it even further. Officially, the OnePlus 12 uses "multi-directional PDAF" to focus its camera sensors. To figure out what this is you need to look towards Oppo, OnePlus' parent/sister company (depending on who you ask) also uses this and uses the tech on phones like the Find X2.

Using a custom Sony sensor, pattern change (what PDAF uses to make focal adjustments) can be measured along both the vertical and horizontal plane instead of only vertically. This gives double the data of PDAF for faster focusing and better focus in low light.

Four adjacent pixels are used under the same single-color lens in the sensor's microlens instead of each using its single lens, This enlarges the focusing area and means the pixels used for focusing are also part of the image itself and don't have to be cropped out.

Using every pixel for focusing and taking the actual photo means there's no need for extras like a laser or ToF sensor.

So far it seems to work really well. I expect we'll see all high-end phones doing something similar in the next few years until something better comes along!

Jerry is an amateur woodworker and struggling shade tree mechanic. There's nothing he can't take apart, but many things he can't reassemble. You'll find him writing and speaking his loud opinion on Android Central and occasionally on Threads.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.