Google unveils next-generation TPU for cloud computing

Get the latest news from Android Central, your trusted companion in the world of Android

You are now subscribed

Your newsletter sign-up was successful

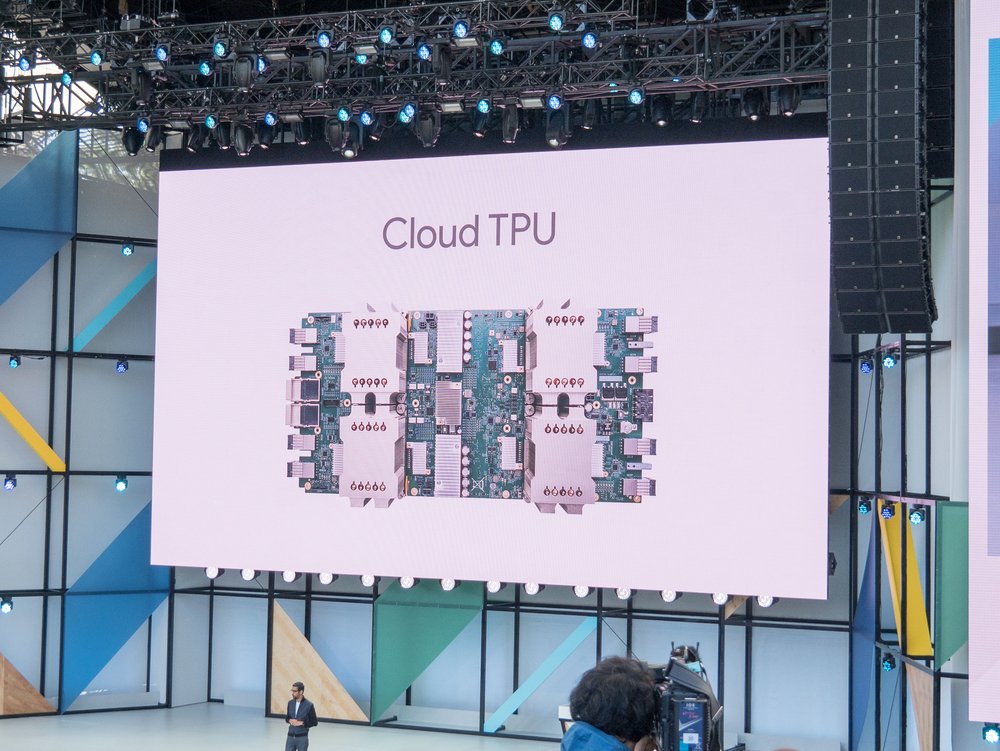

At Google I/O today, Google CEO Sundar Pichai provided an update on next generation of the custom-made Tensor Processing Units (TPU) which Google uses power its Google Compute Engine.

These new cloud TPUs feature four chips on a single board and are capable of generating 180 teraflops (180 trillion floating-point operations per second — the top-end NVIDIA GTX Titan X GPU runs at just 11 teraflops). Furthermore, Google has managed to link 64 of these TPUs into one TPU Pod super computer, for a combined processing power of 11.5 petaflops. Pichai says this new technology "lays the foundation for significant progress".

Google first announced TPUs at 2016's Google I/O.

Get the latest news from Android Central, your trusted companion in the world of Android

Marc Lagace was an Apps and Games Editor at Android Central between 2016 and 2020. You can reach out to him on Twitter [@spacelagace.